We’ve all seen it. That one line that follows us from Netflix to YouTube, Amazon to Instagram. A phrase so seemingly innocent, yet so profoundly influential. “Just for you.” It’s the quiet whisper of an algorithm narrowing your world—one click at a time.

In a digital landscape engineered for personalization, designer Yoon Bee Baek is asking: What if the best thing for us is actually not what we like?

That answer took shape as De.fault — a speculative, subversive Chrome extension that rewires the way we engage with recommendation systems. Developed as a Master’s thesis at the School of Visual Arts (SVA) in the Designer as Entrepreneur program, De.fault challenges the core of today’s attention economy with a deceptively simple mission: to reduce bias and broaden human curiosity.

And its impact reached beyond academia. In 2024, De.fault was honored by the Core77 Design Awards — winning in the Speculative Design category for its bold challenge to algorithmic norms.

Breaking the Loop of Predictability

Today, an average of 48 trackers follow us across the web — with social media platforms packing up to 160 per visit. Recommendation algorithms have become the beating heart of nearly every business model.

We now live in a world where constant surveillance and pre-chewed information feel so routine, phrases like “Are you feeding your algorithm nicely?” no longer sound strange.

Curiosity — once a vital engine of personal and societal growth — is being quietly outsourced to tech companies whose business models rely on predictive comfort, not unexpected discovery.

“This isn’t just about convenience.” she says, “It’s about living in a system that drives us to accumulate and accelerate — and question less.”

The result? Filter bubbles. Social rigidity. Algorithmic echo chambers that reduce exploration to a recursive loop of our past behavior. The more we scroll, the smaller our world becomes.

AdTech Got Sneaky. So We Got Sneakier.

Let’s say you’re looking for a better night’s sleep and you search for a mattress. From there, your digital world becomes a carousel of pillows, lamps, more mattresses — all designed to optimize your buying behavior.

“But what if instead of showing you a new mattress,” Baek asks, “the algorithm offered you a TED Talk on the good sleep? Or a collection of short, mindful literatures to read before your bedtime?”

This subtle redirection is the foundation of De.fault. It doesn’t just recommend differently — it recommends deliberately, with the goal of expanding context, not collapsing it.

How De.fault Works

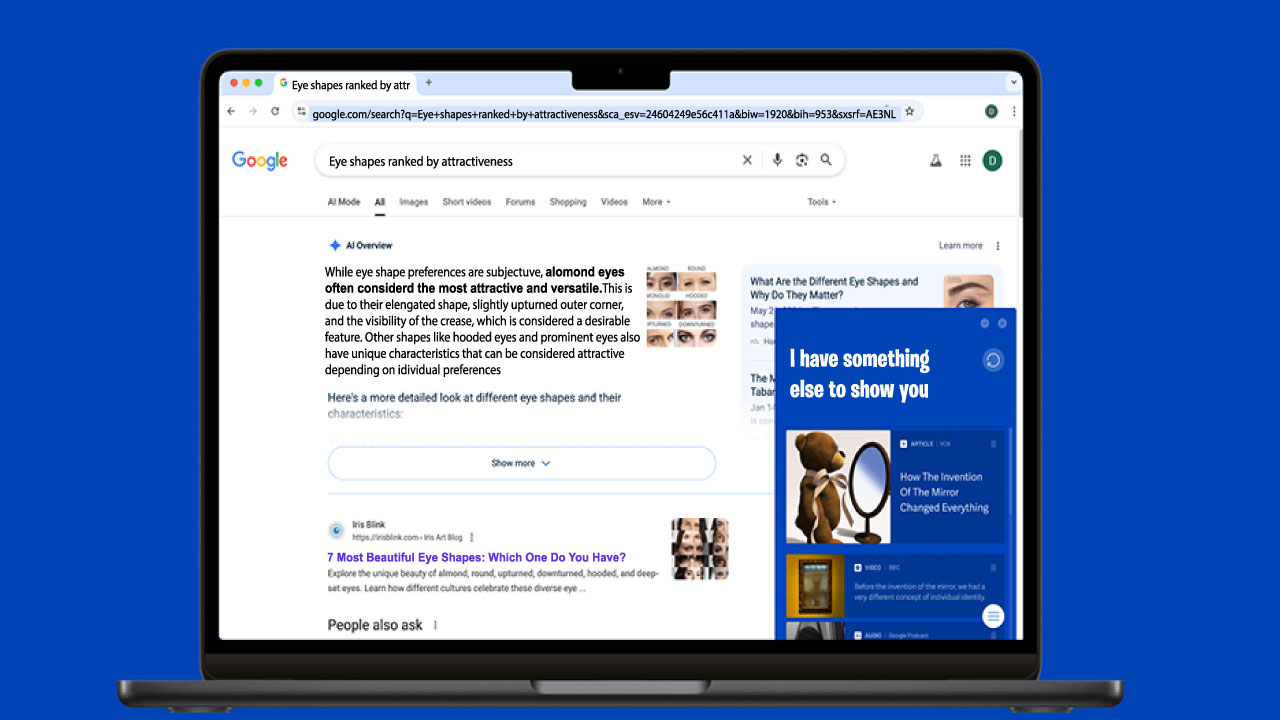

At its core, De.fault is a purposeful de-personalization engine. Built as a Chrome extension, it intercepts recommendation streams and injects unexpected but meaningfully adjacent content. It doesn’t track you. It doesn’t sell you. Instead, it offers interpretive friction — small disruptions that help you re-see the world around you.

So how does it work in practice?

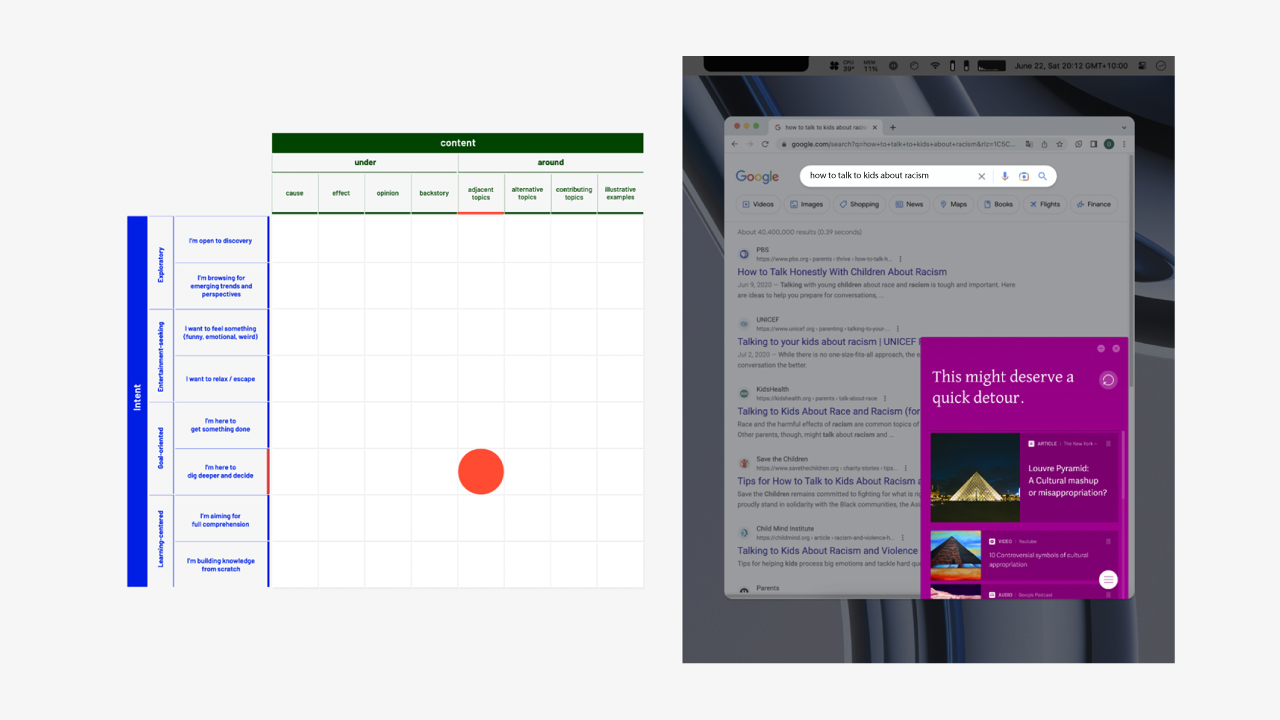

On the internet, there’s always an interplay between two forces: intent and content.

- Intent is the reason behind your search — the curiosity, concern, or goal that brings you online.

- Content is what you get in return — the information the system decides fits your question.

While most recommendation algorithms aim to refine and narrow the gap between the two, De.fault works in the opposite direction. It intentionally looks under or around topics — offering perspective instead of precision. To do this, it uses a simple yet powerful matrix: intersecting intent with content that invites lateral thinking.

Take, for instance, a search like “thank you email that gets replies.” Rather than offering example subject lines or template hacks, De.fault might instead surface content that prompts critical reflection on commodification of sincerity, performative politeness, or emotional labor in professional settings.

Another example: the search term “how to talk to kids about racism.” Most systems will direct you to parenting tips or conversation guides. However, De.fault may intersect that with adjacent topics — like art examples of cultural appropriation or representation in media — broadening the perspective into a wider discourse worth exploring.

Think of it as a talkative friend who always finds a way to tell you something you didn’t expect — but maybe needed to hear. By connecting disparate subjects and offering counter-narratives, De.fault acts as a digital interlocutor, enriching the default lens through which we experience the internet.

Business plan: From De.fault to Me.fault and We.fault

De.fault is the first expression of Baek’s long-term venture vision — a free and shareable program built within a larger system, with two planned expansions to follow.

- Me.fault – Encouraging personal change by introducing developmental bias — ideas that challenge you just enough to inspire growth.

- We.fault – A collaborative tool to help communities educate each other and dismantle collective assumptions.

Together, they move from simply breaking filter bubbles to building new, intentional ways of thinking and being online.

Why This Matters

The stakes couldn’t be higher. We live in a time where our beliefs, behaviors, and even our moods are being shaped by invisible systems optimized for engagement, not enlightenment. De.fault offers a rare counterproposal: a technology built not to confirm who you are, but to question who you might become.

“The name comes from the “De” — as in undoing or unraveling, and “Default” — as in taking the most obvious answer to something” Baek explains. “Rooted in the project’s commitment to educational responsibility, it’s meant to oppose the rigid defaults we passively adopt — and offer a fault line for new ideas to break through.”

In other words: a soft rebellion, encoded in code.

Watch & Learn

Interested in seeing De.fault in action? Watch the full venture plan here: https://vimeo.com/884794698

Read more about the project and the research behind it at:

Final Thought

De.fault begins as a thesis — but reveals itself as a wake-up call for anyone who’s ever felt like their feed knows them a little too well. In a global culture of optimization, it dares to be inefficient. Unpredictable. Human.

Because maybe the best recommendations aren’t the ones we might also like — but the ones that help us think differently, and live more fully.