Platform Engineering and GitOps are going beyond the usual limits of deployment frequency even further, while Agile and DevOps approaches have become the norm. In this high-stakes environment, regression testing – the indispensable safety net meant to ensure that new code does not interfere with existing functionality – has often been a bottleneck. Traditional manual regression suites are too slow, too expensive, and too brittle to keep up.

However, a substantial transformation is taking place. Automating regression testing is changing from a post-development, isolated activity to a sophisticated, ongoing, and elaborately linked part of the software development life cycle (SDLC).

The Belitsoft software testing company explores the major developments that will shape automation for regression testing in 2025. We will look at how testing is evolving to establish a continuous quality loop, how AI and ML are evolving from trendy terms to indispensable tools, and how new approaches to test data and maintenance are appearing. We will also look at the emergence of low-code solutions that democratize test automation and the growing significance of non-functional testing. Lastly, we will talk about the overall strategic change to a business-centric, risk-based approach to quality assurance.

The AI and ML Revolution: From Script Creation to Predictive Analysis

Machine learning (ML) and artificial intelligence (AI) are evolving from experimental features to the mainstays of contemporary test automation systems. They have many uses in regression testing and are becoming progressively complex every day.

Intelligent Test Case Generation and Optimization

Making and, more importantly, maintaining test cases is one of the biggest challenges in regression testing. AI is addressing this directly.

- Code Change Analysis: AI-driven technologies are now able to statically examine code commits in order to determine what has changed. The AI can automatically determine which parts of the application are most vulnerable and create or choose specific test cases to validate them by mapping these changes to the functionality of the application (typically using control flow graphs or data flow analysis). Eventually, the testing paradigm is changing from “test everything” to “test what matters.”

- Self-Healing Tests: Fixing faulty tests brought on by UI changes is a huge maintenance burden in test automation. Even though the functionality is correct, the test may fail if an element’s ID, CSS selector, or XPath changes. The different properties of UI elements can now be learned by ML-based algorithms. The algorithm can automatically heal the script or recommend other locators when a change is detected, significantly lowering false negatives and maintenance costs.

- Test Suite Optimization: Regression suites grow bloated over time, often containing low-value, redundant, or outdated tests. To find and suggest tests for culling, machine learning algorithms can examine test execution history, including pass/fail rates, code coverage, execution time, and defect history. They can produce a more complete suite for nightly builds, guaranteeing optimal resource utilization, as well as a lean, high-impact “smoke” regression suite that executes in minutes.

Visual Testing and UI Validation

Layout, formatting, color, and other visual components are difficult for traditional automation tools to verify. AI-powered visual validation tools use computer vision to compare screenshots of applications to a baseline.

It is possible to train visual AI to hone in solely on the structural and stylistic components. It means ignoring dynamic or unnecessary content (like news tickers or user-specific data).

Predictive Analytics and Risk Assessment

Perhaps the most progressive use of AI is this one. Predictive analytics selects tests based on what is likely to break rather than what was altered. Based on developmental patterns, the automation system can proactively spotlight testing for high-risk areas, more efficiently allocating resources and possibly identifying bugs before they are even introduced.

Shift-Left and Shift-Right: Creating a Continuous Quality Loop

The concepts of “Shift-Left” (testing earlier in the development cycle) and “Shift-Right” (testing in production) are coming together to form a continuous, all-encompassing feedback loop for quality.

Automating Unit Testing and APIs

Although the idea of shift-left is not new, its use is becoming more automated and sophisticated.

- API-First Regression Testing: The user interface (UI) frequently turns into a thin client on top of a complicated network of APIs as applications depend more and more on microservices architectures. Compared to UI-based testing, automating regression tests at the API level is quicker, more reliable, and offers more coverage. Regression test baselines can be automatically generated for each endpoint by tools that integrate with OpenAPI/Swagger specs, ensuring that backend integrity is preserved with each build.

- Unit Test Generation: Developers are encouraged to create a strong regression safety net at the most fundamental level by the ability of tools that use static code analysis to automatically generate unit test skeletons or even complete test cases with intelligent mock data.

Testing in Production

Once thought to be heretical, production testing now offers priceless real-world feedback that a staged environment cannot match thanks to advancements in methodology.

- Blue-Green Deployments and Canary Releases: A canary group, or small subset of users, receives the new code while the majority stick with the stable version. Continuous automated regression tests against the canary group include both synthetic transactions and real-user monitoring. The release is automatically rolled back before it has a significant impact if metrics show a negative deviation.

- Engineering Chaos: In order to test a production system’s resilience, chaos engineering – which was first made popular by Netflix – involves purposefully introducing failures (such as stopping servers or limiting network bandwidth). Regression testing for system stability and fault tolerance involves automating these experiments and their validation checks. This ensures that the system’s “immune response” to failure continues to function after every new deployment.

- Validation of A/B Testing: Automated checks can guarantee that the various A/B test variations are operating appropriately from a functional standpoint as well as an analytical one, avoiding a faulty experiment from impairing the user experience.

Because of this ongoing cycle, a bug discovered “right” in production is immediately “left” to become a new automated test case, avoiding its recurrence in the subsequent development cycle.

The Emergence of Low-Code and Codeless Automation Platforms

For many organizations, the lack of qualified automation engineers continues to be a major problem. Platforms for low-code and codeless test automation are becoming a potent way to make test creation more accessible.

- Democratization of Test Creation: Business analysts, product owners, and manual QA engineers can now create complex automated regression tests without ever writing a line of code, thanks to platforms that integrate visual modeling, drag-and-drop interfaces, and natural language processing.

- Improved Collaboration: Nevertheless, when the domain experts who are most familiar with the application are able to directly author tests, the regression suite’s quality and business relevance are significantly improved. This promotes a collaborative, superior culture in which all members participate.

- Focus on Logic, Not Syntax: Testers can focus on the “what” (the test scenario and validation points) rather than the “how” (the labyrinthine code required to communicate with the browser). This leads to faster script development and lower entry barriers.

- The Future of Hybrids: It is vital to remember that the ability to “drop down” into code (such as Python or JavaScript) is still necessary for extremely complicated or customized scenarios. In 2025, hybrid platforms that provide code-level flexibility for 20% of tests and codeless simplicity for 80% of tests are the trend.

Smart Test Data Management

Without the proper data to run it, even the most complex automated test is worthless. Since legacy techniques like restoring entire production databases are too slow, insecure, and in violation of laws like the CCPA and GDPR, test data management, or TDM, is increasingly receiving attention.

- Synthetic Data Generation: AI is being used to create high-quality, synthetic test data that replicates the relationships and statistical characteristics of production data while excluding any actual personal data. This is compliant, scalable, and quick.

- Data Masking and Subsetting: Automated tools can produce a masked (obfuscated) subset of the database for situations where production data must be used. This maintains security and privacy while offering a manageable, pertinent dataset for testing.

- On-Demand Data Provisioning: By integrating with CI/CD pipelines, TDM tools are enabling auto-provisioning of the necessary test data for every test run, be it synthetic, masked, or a particular dataset. This removes flakiness brought on by data state problems and guarantees that tests always contain clean, consistent, and appropriate data.

The Integration with DevOps: CI/CD Pipelines as the Central Nervous System

It is impossible to conduct regression testing alone. The mechanism that allows velocity without compromising quality is its deep integration into the CI/CD pipeline.

- Execution Driven by Pipelines: Pipeline events initiate automated regression tests – a simple smoke suite for each pull request, a more extensive suite for a successful build in a staging environment, and a final validation suite prior to production deployment.

- Gates of Quality: The outcomes of tests serve as quality gates. The pipeline can be set up to automatically “fail” and stop the build’s progress if a crucial regression test fails, keeping faulty code from getting closer to production. This gives developers quick, automated feedback.

- Infrastructure as Code (IaC) for Testing: Terraform and Ansible are two examples of the tools that are being used more and more to provision and configure the test environments automatically. This eliminates “it worked on my machine” issues and increases the reliability of regression test results by guaranteeing that the test environment is an exact replica of production.

Expansion Beyond Functional Testing: Non-Functional Regression

In order to make sure that accessibility, security, and performance do not deteriorate over time, regression testing is being extended to include non-functional requirements.

- Testing for Performance Regression: As a regression check, automated performance tests – such as those utilizing JMeter scripts – are being incorporated into the continuous integration pipeline. In addition to functional errors, a build may be rejected if it causes a noticeable drop in performance, such as slower response times or lower throughput when under load.

- Testing for Security Regression: Static Application Security Testing (SAST) and Dynamic Application Security Testing (DAST) tools are automatically carried out as part of the pipeline for security regression testing. In order to prevent known security flaws from being reintroduced or new ones from being created, they serve as a regression suite for security vulnerabilities.

- Accessibility Regression Testing: Regression suites are increasingly incorporating automated accessibility checks (with tools like Axe-core) as digital accessibility becomes a legal and ethical requirement. By automatically checking new pages and components for WCAG compliance issues, these tools can stop accessibility regressions.

Strategic Shift: Risk-Based and Business-Critical Regression Testing

A strategic change in perspective is the ultimate trend. Instead of aiming for 100% test automation coverage, which is often a costly and futile endeavor, the goal is now to achieve optimal coverage based on risk and business impact.

Risk-Based Selection of Tests: Analytics are being used by organizations to prioritize what needs to be tested. Among the factors are:

- Impact of a Code Change: Which features were impacted by a commit?

- Business Criticality: How much does this feature increase revenue or user satisfaction?

- Failure History: In the past, which parts of the application have experienced the most bugs?

- Customer Usage Analytics: What are the most frequently used user journeys? (Testing what customers actually use most often).

Emphasis on User Journeys: Rather than atomized testing of individual features, the focus is on automating end-to-end regression tests that replicate important business workflows and user journeys, such as “search for a product, add to cart, check out.” Making sure the main business process always runs is more valuable than checking each and every UI element individually.

Conclusion: The Autonomous Quality Engine of 2025

Regression testing automation is portrayed in the trends for 2025 as a potent enabling force rather than a bottleneck. It is evolving into:

- Astute: AI and ML-driven for development, upkeep, and optimization.

- Continuous: From left to right in the SDLC, deeply incorporated into CI/CD.

- Inclusive: All members of a product team can access it via low-code platforms.

- Holistic: Addressing issues related to accessibility, security, performance, and functionality.

- Strategic: Not just about coverage, but about user value and business risk.

Today, the regression suite is a self-optimizing, dynamic resource. In order to provide confidence at the speed of contemporary development, it will automatically adjust to the application it tests, anticipate failure locations, and carry out the exact tests that are needed. In addition to accelerating their release cycles, companies that invest in these trends will create software that is more valuable, secure, and robust, making quality assurance a clear competitive advantage. Regression testing has an independent, perceptive, and strategically important future in the software development industry.

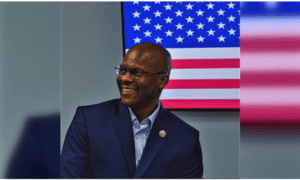

About the Author:

Dmitry Baraishuk is a partner and Chief Innovation Officer at a software development company Belitsoft (a Noventiq company). He has been leading a department specializing in custom software development for 20 years. The department has hundreds of successful projects in AI software development, healthcare and finance IT consulting, application modernization, cloud migration, data analytics implementation, and more for startups and enterprises in the US, UK, and Canada.