What’s Hugging Face?

Nope, not the cute emoji above.

TL; DR

The Hugging Face is a data science and community platform offering:

Hugging face transformers – tools that let us train, build, and deploy machine learning models on open source technologies.

It is a broad community of researchers, data scientists, and machine learning engineers – coming together on a platform to get support, share ideas and contribute to open source projects.

Hugging Face Transformers

They are a package in an enormously well-known Python library that offers pre-trained models with wide applications for various NLP – natural language processing tasks.

Why do you need Hugging face transformers?

While working on a machine learning project/problem, adaptation to a pre-existing solution and repurposing it helps get to a solution faster.

Using existing models not only aids ML engineers or data scientists but also helps businesses save on computational expenses as doing so requires less training. In today’s world, many companies offer open source libraries with pre-trained models, and the Hugging Face is hoisted on the same idea.

Hugging Face Platform

The platform works to normalize NLP and offer models accessible to all. The platform offers an NLP library providing multiple resources, including tokenizers, datasets and Hugging face transformers. Hugging Face got mainstream traction after launching the NLP libraries called Transformers and a massive variety of tools.

The focus?

NLP.

Hugging Face is focused on NLP tasks, and the plan is not only to recognize words but to be able to understand the meaning and context of those words.

Computers can’t process the information the same way humans can, which is why we need a pipeline – a cascading flow of steps for processing texts.

What’s next for Hugging Face?

For an enhanced interactive experience and communication as close to the human experience as possible, many companies are adding NLP technologies into their fleet of systems.

That’s where the Hugging Face comes into the picture – in this post; we will cover the Hugging Face and its transformers with details. It is imperative to note that Hugging Face is moving closer and closer to its goal of democratizing machine learning while making bleeding-edge AI/ML accessible for all without building them from scratch.

Hugging Face – the future?

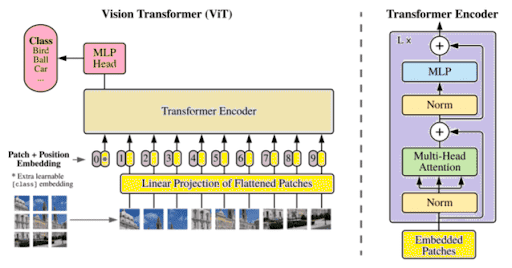

The transformers are evolving and intersecting domains to become a general purpose platform/architecture for computer vision (CV), speech, and even protein structure predictions.

Hugging Face Supported Tasks

Before we move on to the details of the deep working of the Hugging face model for the NLP solution, let’s look at some basic NLP tasks supported by the platform.

Sequence Organization

Owing to the number of classes, predicting the category of a sequence is the main task.

It comes as a predictive modeling problem with a massive range of applications. The real-world applications are – understanding the thought behind a review, grammatical corrections, and detecting spam emails.

Question and Answer

Offering a suitable answer to a contextual question.

The idea is to build a system that automatically answers humans. The questions can be anywhere from close or open-ended, and the system should cater to both. The answers can be constructed by either querying a structured database or by looking through the unstructured collection of data/documents.

Named Entity Recognition

It is a task of identifying a token as a person, organization or place.

It is used in many fields in NLP while helping to solve real-world problems. Here, one can scan articles and mine for principal entities, categorizing them into defined classes.

Summarization

Does anyone remember writing a summary in college?

Same task – given a document, with the help of NLP it can be converted to a concise text. A process of making a short, fluent and coherent version of a longer text.

Techniques used for Summarization

- Extractive – here, one can extract important sentences and phrases.

- Abstractive – here, we need to interpret the context and regenerate the text while keeping the core information unchanged.

Translation

It is the rendition or a translation from one language to another.

Replacement of atomic words is not enough as the task is to translate text like any human translator. That calls for a corpus that takes phonetic typology and translation of idioms to account for doing complex translations.

Language Molding

Molding or modeling language revolves around generating text to make sense of a sequence of tokens. Tokens that predict some phrases used to complete a text. Such tasks can be characterized as;

- Masked Language Modeling

- Casual Language Modeling

But that’s not all;

NLP tasks are more than only working with written texts; it also encapsulates CV, speech recognition, generation of transcripts and more. NLP tasks are difficult with Machine language, even after extensive research on them – thus come in the Hugging Face Transformers and pipelines in action.

Want to learn more or need access to a fantastic Hugging face repository of knowledge – head to Qwak!