In an era where conversational AI is rapidly evolving, VideoSDK has introduced Namo-Turn-Detection-v1 (Namo-v1) — an open-source semantic turn detection model that is poised to change how AI voice agents understand when a person has finished speaking.

Unlike conventional Voice Activity Detection (VAD) systems that rely solely on silence gaps or energy levels, Namo-v1 interprets meaning in real time. It doesn’t just listen for pauses — it comprehends intent. This semantic layer enables smoother, faster, and more natural conversations between humans and AI.

Breaking the Latency Barrier in Voice AI

For years, developers have battled the frustrating trade-off between “cutting users off too early” and “waiting too long” for responses. Silence-based VAD systems often feel robotic, while ASR endpointing models depend on punctuation or pauses that vary wildly by language.

VideoSDK’s Namo-v1 solves this with semantic understanding. By analyzing live ASR transcripts, it identifies whether a thought is complete or ongoing — delivering snappier responses, fewer interruptions, and consistent UX across languages.

Engineered for Real-Time, Global Use

Optimized with ONNX quantization, Namo-v1 runs at astonishing speeds — achieving inference times of under 19 ms for specialized single-language models and under 29 ms for multilingual variants. Despite its lightweight design (~135MB to ~295MB), it maintains up to 97.3% accuracy, even across 23+ supported languages.

That makes Namo-v1 not only faster but also highly scalable for production environments — from contact centers and voice assistants to gaming, telephony, and embedded devices.

Key Features at a Glance:

- Semantic Intelligence: Understands language meaning, not just silence patterns

- Ultra-Fast Inference: 19–29 ms response times on standard hardware

- Multilingual Coverage: 23 languages supported out of the box

- Enterprise-Ready: ONNX-optimized and plug-and-play with VideoSDK Agents SDK

- High Accuracy: 97.3% for specialized models; 90.25% average for multilingual

Benchmarks that Redefine Efficiency

Performance benchmarks show Namo-v1 achieving a 2.5× speedup over standard models and doubling throughput while maintaining near-identical accuracy rates. Specialized models such as Korean and Turkish reach 97%+ accuracy, while multilingual models maintain a strong 90%+ across 23 languages, including English, Hindi, Japanese, and German.

Open-Source, Developer-Friendly Integration

In line with VideoSDK’s commitment to open collaboration, Namo-v1 is fully open source and available on Hugging Face and GitHub. Developers can experiment with provided inference scripts, Colab notebooks, and documentation to fine-tune models for their own applications.

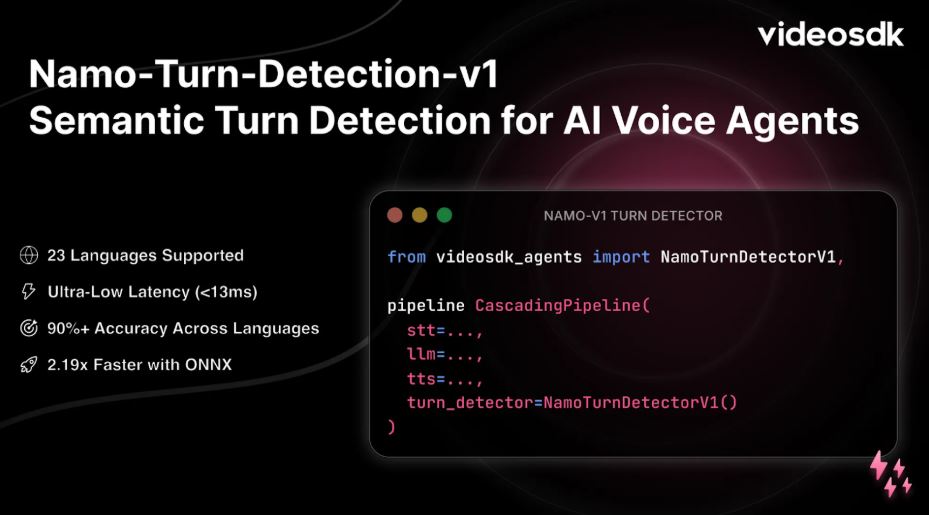

Integrating Namo-v1 into your agent pipeline takes only a few lines of code using VideoSDK’s Agents SDK, making it a drop-in replacement for legacy VAD systems.

Toward Truly Human AI Conversations

VideoSDK envisions a world where AI conversations no longer feel mechanical. With Namo-Turn-Detection-v1, the company is closing the final gap between human and machine communication — enabling real-time, context-aware, and emotionally intelligent interactions.

Future updates will expand into multi-speaker turn-taking, hybrid semantic-prosodic models, and adaptive confidence scoring, ensuring that Namo continues to evolve alongside the next generation of conversational AI systems.

“Namo-v1 represents a leap forward in natural conversational AI,” said the VideoSDK Team. “By bringing semantics into turn detection, we’re helping developers eliminate awkward pauses and interruptions — making AI truly conversational.”

To explore the models and start experimenting, visit:

- Documentation: https://docs.videosdk.live/ai_agents/core-components/turn-detection-and-vad

- Hugging Face Models: https://huggingface.co/videosdk-live/models

- GitHub Repository: https://github.com/videosdk-live/NAMO-Turn-Detector-v1

—————————————————————————–

About VideoSDK

VideoSDK is a developer-first platform enabling scalable, real-time video and voice experiences. From live streaming and video calls to AI voice agents and telephony, VideoSDK provides the infrastructure for building intelligent, interactive, and human-like communication systems.