Abstract

Automated systems are transforming decision-making in industries like finance, insurance, and payments. While these systems improve efficiency, they can also lead to biased outcomes, a lack of transparency, and decisions that are difficult to explain. This article explores what makes these systems trustworthy by highlighting real-world examples of biased models and explaining why fairness and transparency are essential. It also introduces key tools for detecting and reducing bias, looks at how companies are adopting better practices, and reviews new regulations shaping the industry. Drawing from my experience in the payments domain, I provide practical steps for building systems that are accurate, fair, and easy to understand.

Source: Shutterstock

Your credit score, job application, and identity verification may already be decided by an AI model. You don’t know how it works, can’t question its logic, and have no clear way to appeal its decisions.

As AI systems take on more responsibility in finance, hiring, and security, the impact on individuals is real. These models influence who gets approved, who gets flagged, and who gets left behind. The challenge isn’t just building accurate systems. It’s building ones that are also fair, explainable, and accountable.

In this article, I’ll explore what makes AI trustworthy in high-stakes environments. You’ll see where bias shows up in real-world systems, how explainability supports compliance and trust, and which tools and frameworks are most effective for evaluating fairness.

Drawing from my experience working in the payments domain, I’ll outline practical steps to align AI development with regulatory and ethical standards.

If you’re working with AI—or relying on systems that are—you need to know how these decisions are made, and how to make them better.

Why Fair AI Matters

Source: Shutterstock

AI systems learn from the data they’re trained on. If that data reflects historical inequalities, the model is likely to reflect them too.

Without safeguards, this can lead to outcomes that reinforce discrimination or exclusion. And when these decisions affect access to credit, employment, or identity verification, the consequences are serious.

Consider these real-world examples:

- Credit scoring disparities: A 2021 MIT Technology Review investigation found that Black and Hispanic mortgage applicants were more likely to be denied loans by AI-powered models—even when controlling for financial indicators. Historical bias in credit data played a major role.

- Bias in hiring algorithms: Amazon retired an experimental hiring model after discovering it consistently downgraded resumes that included the word “women’s,” mirroring past gender biases in the tech industry (Reuters).

- Facial recognition inaccuracy: A study from MIT Media Lab found that commercial facial recognition systems misclassified darker-skinned women up to 35 percent of the time, compared to less than 1 percent for lighter-skinned men.

These aren’t theoretical risks. They’re examples of how biased AI can disadvantage entire groups, even when that was never the intent.

Why Explainability Is Essential

In regulated domains like banking and hiring, explainability isn’t a luxury. It is a requirement.

If a loan is denied or a fraud alert is triggered, users and regulators want to know why. Without transparency, companies struggle to justify their decisions—and customers lose confidence in the system.

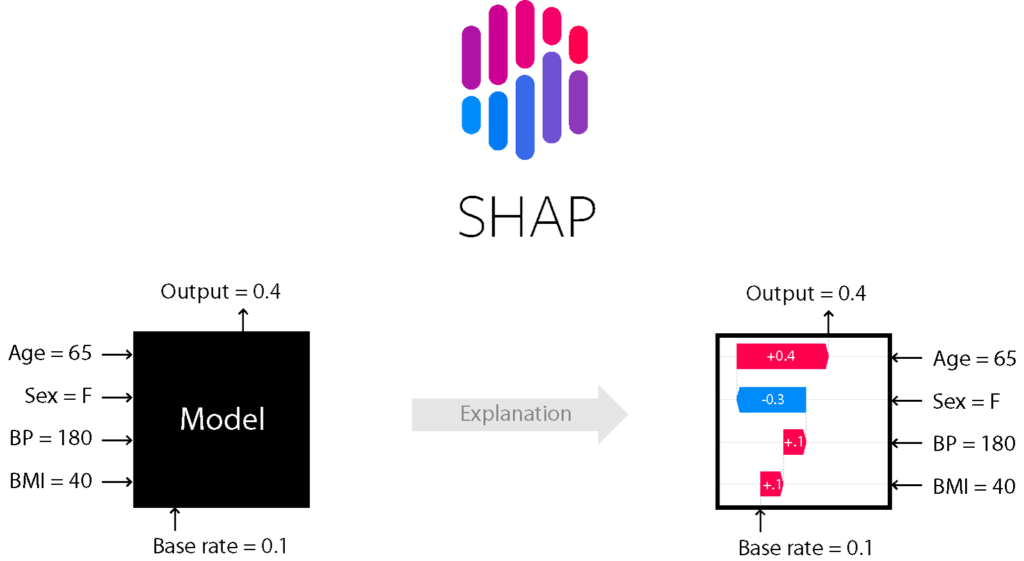

In my experience working in regulated industries (such as insurance), explainability plays a key role in ensuring that AI systems remain accountable and defensible. We’ve integrated explainability into model workflows using tools like SHAP (SHapley Additive Explanations). SHAP breaks down model predictions by showing the contribution of each input feature. For example, in a fraud detection model, we can identify whether transaction amount, location, or merchant behavior led to the alert.

Explainability supports:

- Compliance with data protection laws like GDPR and the Fair Credit Reporting Act

- Internal review, allowing product and legal teams to audit model behavior

- Customer trust, by providing transparent reasons behind automated outcomes

A model that can’t explain itself may be accurate, but it won’t be trusted.

Tools for Building Fair and Transparent AI

You can’t fix what you can’t measure. And when it comes to fairness in AI, guessing isn’t good enough.

That’s why I rely on a set of tools tested in real-world settings that help identify bias, explain decisions, and balance accuracy with accountability. Some are better suited for audits. Others shine in stakeholder demos. What matters is knowing which tool to use, and when.

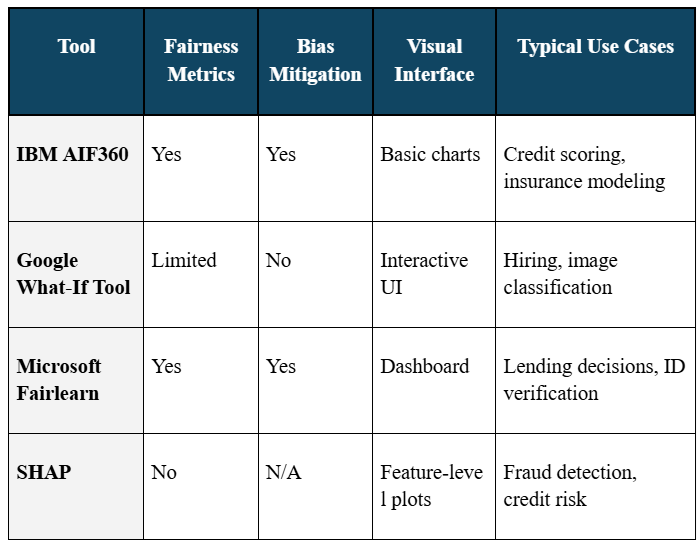

A Practical Comparison of Fairness and Explainability Tools

Here’s how I think about them:

- AIF360 gives you a wide lens on bias in structured data. It’s one of the best for deep-dive audits.

- What-If Tool is great when you need a fast, visual way to test “what happens if…?”

- Fairlearn helps you put fairness constraints directly into training—ideal when you need both compliance and control.

- SHAP doesn’t measure fairness, but it makes your model decisions transparent at a granular level, which is often just as important.

No single tool will solve everything. But together, they give you what you need to move from good intentions to responsible implementation.

Industry Adoption of Responsible AI Practices

Organizations across sectors are starting to embed fairness and transparency into how they design and deploy AI.

- DeepMind is conducting research on differential privacy and federated learning to reduce dependency on centralized personal data while maintaining performance.

- OpenAI has implemented Reinforcement Learning from Human Feedback (RLHF) in training large language models, helping align AI-generated outputs with human values.

- Meta has taken steps to reduce bias in its ad delivery algorithms after regulators raised concerns over disparities in who sees job and housing ads.

- In my experience working in the payments domain, I’ve seen how fairness checks can be embedded across fraud detection systems. These systems operate at scale, so it’s important they perform consistently across age, gender, and geography.

Across the board, responsible AI is becoming part of how leading companies measure quality, not just compliance.

The Role of Regulation

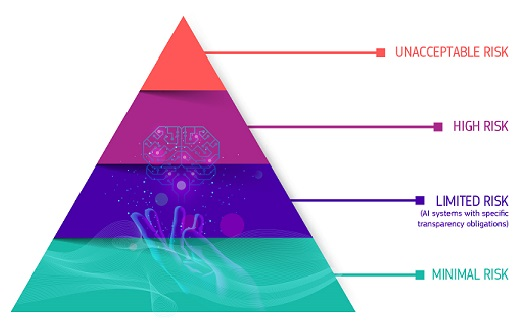

Governments and international bodies are developing guidelines to ensure AI systems operate within clear boundaries—especially when they affect human rights, finance, or safety.

Key frameworks include:

- The European Union AI Act : This proposed law classifies AI systems by risk category. High-risk systems, such as those used in hiring or lending, would be subject to stricter requirements for transparency, fairness, and documentation.

Source: European Commission

- The U.S. AI Bill of Rights: Released by the White House in 2022, it outlines principles for safe and non-discriminatory AI. It emphasizes notice, explanation, and opt-out options for individuals.

- NIST AI Risk Management Framework: This voluntary guide by the National Institute of Standards and Technology helps organizations evaluate, document, and reduce risks in AI systems.

These frameworks are helping companies shift from asking, “Can we deploy this model?” to “Should we—and if so, how?”

Recommendations for Building Trustworthy AI

Based on my experience working on AI systems in fraud detection and credit risk, these five practices are essential for building models that are not only accurate but also fair, explainable, and resilient over time:

1) Audit for bias regularly: Use tools like AIF360 or Fairlearn to evaluate whether your models produce uneven outcomes across demographic groups. Conduct these audits both before deployment and as part of ongoing monitoring.

2) Make transparency part of the design: Incorporate explainability tools such as SHAP early in the development process. Clear, interpretable outputs are critical for internal stakeholders, external partners, and regulators alike.

Source: SHAP

3) Use diverse and up-to-date training data: Ensure your datasets represent the populations your model is intended to serve. Revisit and update them regularly to reflect changes in user behavior, demographics, or context.

4) Maintain human oversight: In high-impact applications like credit approvals or fraud detection, human reviewers should remain part of the process. Their judgment is essential for handling exceptions and reviewing edge cases.

5) Prepare for regulation: Keep clear documentation of how your models are built, what risks have been identified, and how fairness is being measured. Treat this documentation as an active part of your product lifecycle—not a one-time task.

The Path Forward: Designing AI That Deserves Trust

AI is no longer experimental or isolated to niche applications. It is built into how we assess credit, screen candidates, verify identities, and deliver critical services. These systems do more than process information. They shape decisions with real consequences.

In my work across fraud detection and credit risk modeling, I’ve seen how a technically strong model can fall short if it lacks fairness, transparency, or explainability. A model that performs well in aggregate but creates disparities across user groups is not serving its purpose.

Fairness and transparency should be treated as core design requirements. They are essential for regulatory compliance, customer confidence, and long-term performance.

Organizations that take these responsibilities seriously will not only reduce risk. They will be better prepared to meet future standards, answer difficult questions, and earn trust in a competitive landscape.

AI doesn’t need to be perfect. But it must be accountable, explainable, and designed to treat people fairly and consistently.

That is how we build systems that deliver value while supporting the people who rely on them most.

References

- MIT Technology Review (2021). Black and Hispanic mortgage applicants were more likely to be denied loans by AI-powered models, even when their financial profiles were similar to white applicants.

https://news.mit.edu/2022/machine-learning-model-discrimination-lending-0330 - Reuters (2018). Amazon scrapped an AI hiring tool after it showed bias against female candidates, reflecting historical gender imbalance in the tech industry.

https://www.reuters.com/article/world/insight-amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK0AG/ - MIT Media Lab (2018). A study on commercial facial analysis systems found error rates as high as 35% for darker-skinned women, compared to less than 1% for lighter-skinned men.

https://www.media.mit.edu/articles/study-finds-gender-and-skin-type-bias-in-commercial-artificial-intelligence-systems/ - White House OSTP (2022). The U.S. AI Bill of Rights outlines key protections for individuals affected by automated systems, including notice, explanation, and opt-out rights.

https://www.weforum.org/stories/2022/10/understanding-the-ai-bill-of-rights-protection/ - European Commission (n.d.). The EU AI Act proposes a risk-based framework for regulating AI systems, with specific obligations for high-risk applications.

https://artificialintelligenceact.eu/ - NIST (2023). The AI Risk Management Framework provides guidance for developing trustworthy AI through risk identification, documentation, and continuous evaluation.

https://www.nist.gov/itl/ai-risk-management-framework