Introduction:

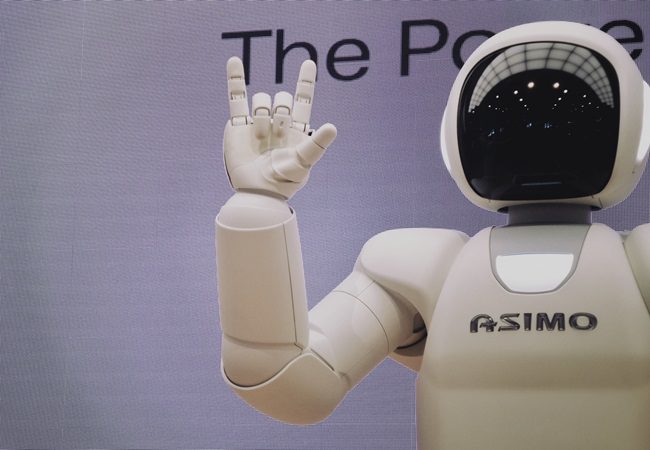

Artificial Intelligence (AI) has rapidly progressed, mimicking human intelligence through a complex web of algorithms and models. At the heart of this technological marvel lies neural networks, the digital counterpart to the human brain. In this exploration, we will take a deep dive into the intricacies of neural networks, unraveling the mysteries of how they function and contribute to the evolution of AI.

Understanding Neural Networks:

Imagine an intelligent system capable of learning from vast amounts of data, recognizing patterns, and making decisions without explicit programming. This is the essence of neural networks, computational models inspired by the interconnected neurons of the human brain. Neural networks consist of layers of nodes, or artificial neurons, each layer contributing to the overall learning process.

Short and sweet, neural networks are the foundation of machine learning. They’re the powerhouse behind image recognition, natural language processing, and other complex tasks that were once the exclusive domain of human intelligence.

Layers of Learning:

Input, Hidden, and Output:

Neural networks consist of three main types of layers: the input layer, hidden layers, and the output layer. The input layer receives data, whether it’s an image, text, or any other form of information. The hidden layers process this data, extracting features and patterns. Finally, the output layer produces the result, whether it’s identifying an object in an image or translating text from one language to another.

Each connection between nodes in different layers has a weight, determining the strength of the connection. During training, these weights are adjusted based on the network’s performance, fine-tuning its ability to make accurate predictions or classifications.

Activation Functions:

Firing Up Neurons:

Activation functions are crucial elements within each artificial neuron, determining whether it should “fire” or remain inactive based on the incoming data. These functions introduce non-linearities to the neural network, allowing it to learn complex relationships and representations. Common activation functions include sigmoid, hyperbolic tangent (tanh), and rectified linear unit (ReLU).

Training Process:

Iterative Learning:

The magic happens during the training process, where the neural network learns from labeled data to improve its performance. This iterative learning involves presenting the network with examples, comparing its predictions to the actual outcomes, and adjusting the weights accordingly. The goal is to minimize the difference between the predicted and actual outputs, refining the model’s ability to generalize and make accurate predictions on new, unseen data.

Backpropagation:

Fine-Tuning for Precision:

Backpropagation is the engine that drives the refinement of neural networks. It’s a process where the network adjusts its weights backward, starting from the output layer and propagating the error back through the hidden layers. By calculating the gradient of the error with respect to the weights, the network fine-tunes its parameters to minimize the overall error. This iterative feedback loop is the essence of how neural networks learn and improve over time.

Overfitting and Regularization:

Striking a Balance:

While the training process enhances a neural network’s ability to make accurate predictions, there’s a risk of overfitting. Overfitting occurs when a model becomes too specialized in the training data and struggles to generalize to new, unseen data. Regularization techniques, such as dropout or weight decay, help strike a balance, preventing the model from becoming overly complex and improving its performance on diverse datasets.

Types of Neural Networks:

Neural networks come in various architectures, each tailored to specific tasks. Convolutional Neural Networks (CNNs) excel in image recognition, using filters to detect features like edges and textures. Recurrent Neural Networks (RNNs) are designed for sequential data, making them suitable for tasks like speech recognition and natural language processing. Long Short-Term Memory (LSTM) networks, a variant of RNNs, address the vanishing gradient problem, enabling the processing of long sequences more effectively.

Transfer Learning:

Knowledge Transfer for Efficiency:

Transfer learning is a strategy that leverages pre-trained models on large datasets and adapts them to new tasks. This approach accelerates the learning process, especially when dealing with limited data for niche applications. By building upon the knowledge gained from broader datasets, transfer learning empowers AI systems to excel in specialized domains.

Challenges in Neural Network Training:

While neural networks have propelled AI to new heights, challenges persist. Vanishing and exploding gradients, where the gradients in the backpropagation process become too small or too large, can hinder training. Researchers continue to explore novel architectures and optimization techniques to address these challenges and push the boundaries of neural network capabilities.

Conclusion:

Neural networks are the backbone of artificial intelligence, emulating the intricate processes of the human brain. From the input layer to the output layer, from activation functions to backpropagation, each component plays a crucial role in the training process. As we continue to refine and expand our understanding of neural networks, the future holds exciting possibilities for AI applications that can truly rival human intelligence.