In regulated industries, test data is no longer purely an IT issue – it is also a compliance issue. Test data has to mirror production data closely enough to support realistic testing, but it is critical that it does not expose any actual customer or employee information in non-production environments.

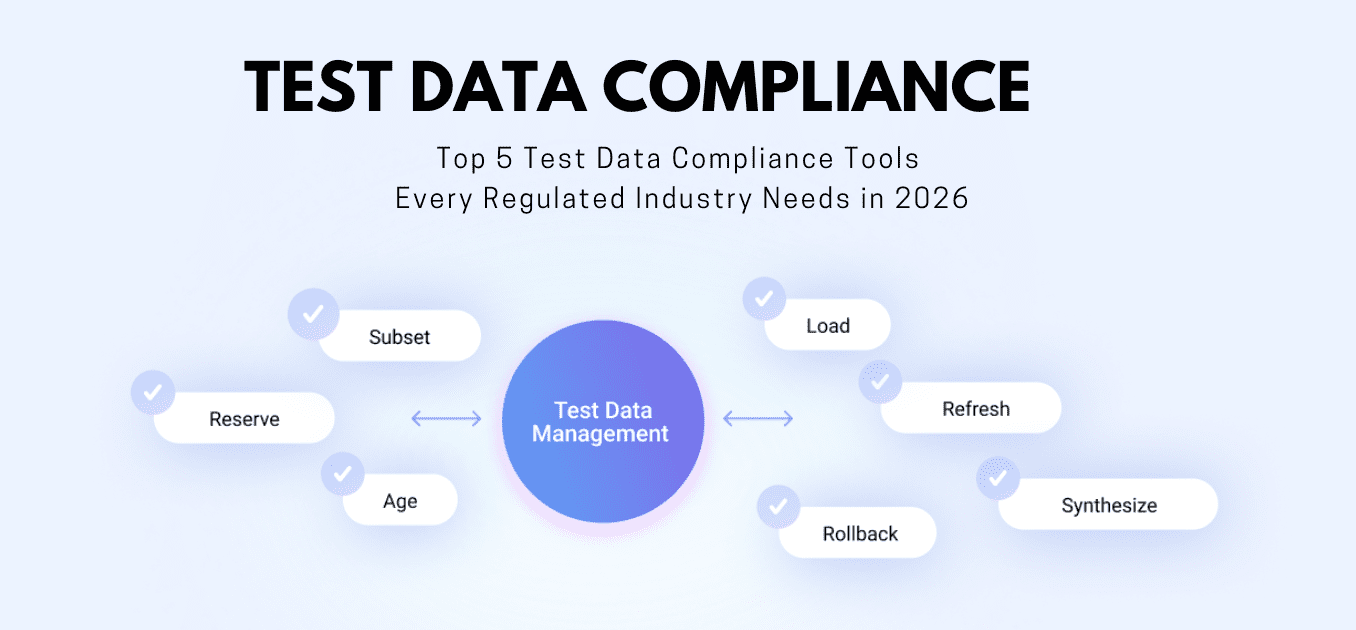

This is exactly what modern Test Data Management (TDM) solutions have become – compliance enablers. Today’s platforms do not simply copy and paste data from production systems, but instead use advanced methods of data masking, data subsetting, synthetic data generation, and automated data delivery to DevOps and CI/CD pipelines.

Let’s go through the top five test data management tools that organizations are using in 2026 to move fast without crossing any regulatory boundaries.

1) K2view Test Data Management

K2view takes a system-centric approach to ensuring test data compliance. Rather than treating databases as standalone entities, it builds production-like test data from a 360-degree view of systems and business entities, preserving referential integrity across sources even after masking or synthetic data generation.

The platform auto-discovers sensitive data and applies intelligent masking to structured and unstructured data sources, then switches to AI-based synthetic data generation when real data is deemed too sensitive to use directly. Everything – data subsetting, refresh, reservation, versioning, rollback, and aging – is operated from a single, self-service environment.

K2view is built with self-service in mind, enabling QA and DevOps teams to obtain the test data they need without depending on central IT. It integrates with CI/CD pipelines and supports deployment on-premises, in the cloud, or in hybrid environments, making it a strong fit for modern, regulated enterprises.

Best for: Large-scale enterprises in regulated industries that require fast, accurate, self-service test data across complex, multi-system environments.

2) Perforce Delphix

Perforce Delphix is largely focused on automating the delivery of compliant test data within modern DevOps pipelines. Its main advantage is data virtualization, which enables users to work with safe, masked copies of production data without creating full physical replicas. This helps reduce storage usage and accelerates environment setup.

By minimizing storage overhead and enabling fast provisioning of environments, Delphix allows companies to shorten test cycles and move testing earlier in the development lifecycle. Its data masking and synthetic data generation capabilities support compliance by eliminating or substituting sensitive values before they reach development and QA environments. However, its cost and implementation complexity make it better suited to robust, automation-driven DevOps teams than to smaller organizations.

Best for: DevOps-mature companies with extensive testing infrastructures that need virtualized, compliant test data delivered quickly into their pipelines.

3) Datprof Test Data Management

Datprof is designed for teams that seek compliance without the heavy complexity that often comes with enterprise-grade platforms. It integrates data masking, subsetting, and test data delivery into a simplified TDM solution that is easier to adopt and implement than many traditional tools.

The platform integrates into CI/CD pipelines and supports automation, allowing teams to generate smaller, right-sized data sets while adhering to data protection laws such as GDPR. Although it does not offer as many advanced features as some larger platforms, its usability and cost profile make it a valuable option for mid-market organizations that want secure test data without heavyweight infrastructure.

Best for: Medium-sized businesses needing secure, automated test data without the overhead and complexity of a typical legacy enterprise product.

4) IBM InfoSphere Optim

IBM InfoSphere Optim is a popular choice in sectors where legacy environments still exist. It is known for isolating meaningful subsets of information, applying effective data obfuscation, and creating right-sized test databases while maintaining referential integrity.

The product is regarded as stable and reliable, with support for a wide range of databases, operating systems, and mainframe platforms. However, its complexity and cost can make it challenging for smaller teams or organizations that need agile, DevOps-oriented technology and rapid iteration.

Best for: Large-scale organizations running legacy or mainframe systems that have long-term, regulated test data management requirements.

5) Informatica Test Data Management

Informatica Test Data Management extends Informatica’s broader data management portfolio into the test data domain. It provides discovery, masking, subsetting, and synthetic data creation through a self-service interface, helping standardize how test data is prepared and delivered across the organization.

The product tightly integrates with other Informatica tools and supports a broad set of data sources, including databases, big data platforms, and cloud systems. Although its implementation and performance can be demanding compared with newer, cloud-native tools, it remains a solid choice for firms already invested in the Informatica ecosystem and seeking consistent, integrated TDM capabilities.

Best for: Companies already using Informatica that require standardized, integrated test data management across multiple data platforms.

Conclusion

All five tools on this list help reduce risk and enhance testing speed – but they address different needs. IBM InfoSphere Optim and Informatica Test Data Management continue to be strong options for environments where legacy infrastructure and existing ecosystems are central. Delphix is better suited for testing in DevOps-heavy contexts where virtualized, on-demand test data is critical. Datprof is a good fit for growing teams that want compliance and automation without excessive platform complexity.

The standout tool on the list, however, is K2view. Its ability to offer self-service provisioning, intelligent masking, AI-driven synthetic data generation, and end-to-end system integrity within a single platform makes it especially compelling for regulated industries facing strict test data compliance demands in 2026 – while still needing to move quickly.