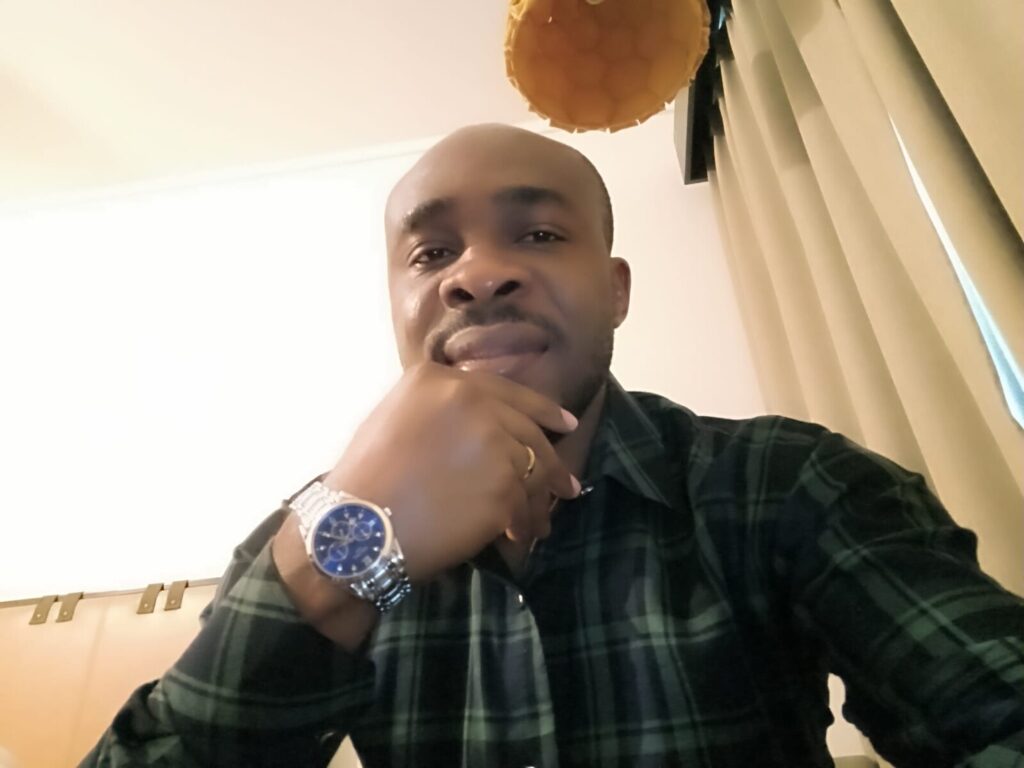

In the ever-shrinking world of nanotechnology, where devices are engineered at scales invisible to the human eye, even the smallest measurement deviation can derail an entire innovation cycle. Amidst this high-precision frontier, Mr. Thompson Odion Igunma has emerged as a key contributor to a globally significant breakthrough: the development of a comprehensive framework for modeling nanofabrication processes and implementing noise reduction strategies in metrological measurements.

His co-authored study, published in the International Journal of Multidisciplinary Research and Growth Evaluation, tackles one of the most persistent and often underestimated challenges in nanotechnology—noise. Thermal fluctuations, mechanical vibrations, quantum tunneling effects, and environmental interference have long compromised the accuracy of nanoscale measurements. In nanomanufacturing, these are not minor disruptions; they are critical threats that can corrupt the structural integrity of microchips, sensors, biomedical implants, and other cutting-edge nanodevices.

Thompson Odion Igunma

The research, undertaken with collaborators Zamathula Sikhakhane Nwokediegwu and Adeniyi Kehinde Adeleke, is notable for its integration of advanced computational modeling, experimental validation, and machine learning. At its core is a multi-tiered solution: predictive modeling to simulate the impacts of noise, real-time adaptive filtering to remove distortions during measurement, and data-driven noise suppression through artificial intelligence. The researchers also developed digital twin environments to enable real-time feedback and iterative optimization in fabrication processes—tools that are rapidly becoming essential in industrial-scale nanomanufacturing.

What distinguishes Igunma’s contribution is the blend of theory and application. Through experimental testing using high-resolution instruments like scanning electron microscopy (SEM), atomic force microscopy (AFM), and X-ray photoelectron spectroscopy (XPS), the study validated significant reductions in measurement uncertainty. For example, SEM feature deviation was reduced from ±5 nm to ±1 nm after implementing the proposed strategies. In AFM scans, surface roughness uncertainty dropped by more than 60%, while XPS results demonstrated improved chemical peak clarity and composition accuracy. These advances aren’t simply technical feats; they open the door to reliable, reproducible nanoscale production in real-world conditions.

Beyond the lab, the global implications of this research are profound. As countries intensify their push toward next-generation technologies—quantum computing, flexible electronics, neuromorphic chips—the reliability of their fabrication methods becomes a national priority. For governments and industries investing billions in semiconductor sovereignty, Igunma’s work offers a timely and scalable roadmap for quality assurance. This is particularly important for emerging economies and research institutions seeking to leapfrog into the nanotechnology space without duplicating the costly, trial-and-error legacy of more established labs.

Furthermore, Igunma’s research reinforces the growing convergence between AI and materials science. The study demonstrates how machine learning can be used not only for predicting fabrication outcomes but also for correcting them in real time. Adaptive noise suppression and signal averaging, when paired with deep learning algorithms, enable measurement systems to evolve autonomously—learning from previous data sets and becoming more accurate with each iteration. This paradigm shift suggests a future in which nanomanufacturing systems could self-regulate, minimizing human error and accelerating time-to-market for complex nanoscale devices.

At a broader level, the study serves as a call to reimagine precision in a digitally interconnected world. As nanoscale technologies permeate every sector—from healthcare to energy, from aerospace to agriculture—ensuring their reliability will require a global consensus on standards and metrological protocols. Igunma’s work offers a foundation for such a framework, one that integrates computation, engineering, and ethics in a unified pursuit of trustworthy science.

The promise of quantum sensors, personalized drug delivery systems, ultra-efficient microprocessors, and AI-enhanced diagnostic devices is growing daily. Yet none of these promises can be fulfilled without trustworthy measurement systems at the nanoscale. Mr. Thompson Odion Igunma’s pioneering research addresses this need at its root, helping remove the invisible obstacles that have long impeded progress. His work does not merely contribute to academic literature; it resets the baseline for what is considered possible in nanofabrication and measurement.

By confronting the problem of noise—both literally and metaphorically—Igunma has helped silence one of the most stubborn barriers to innovation, creating new global opportunities for reliability, efficiency, and technological inclusiveness. As governments, industries, and researchers look ahead, his contributions will likely stand as a defining chapter in the evolving story of precision engineering in the 21st century.