The artificial intelligence conversation has become dominated by capability. More parameters. More autonomy. More complex reasoning. Demos that show systems planning, deciding, and acting with minimal human involvement. Yet when these systems move from controlled environments into production, many of them stumble in familiar ways. Latency spikes. Costs surge. Governance gaps appear. Trust erodes.

The difference is rarely the model. It is the system underneath.

It is a pattern Amit Chaudhary has seen repeatedly, Senior Solutions Architect at a major cloud provider and an IEEE Senior Member with over a decade of experience designing scalable, secure, and resilient cloud solutions for enterprise and ISV customers, his expertise sits at the intersection of cloud modernisation, SaaS architecture, and generative AI with a proven track record guiding customers through complex transformations and enabling efficient, future-ready cloud platforms.

He approaches the current wave of AI not as a breakthrough to celebrate, but as an execution problem to interrogate. “When AI systems start acting,” he says, “the infrastructure stops being invisible. It becomes the boundary between trust and failure.”

That shift, from intelligence as assistance to intelligence as execution, is forcing the industry to confront a reality it has long deferred: models do not determine real-world outcomes. Systems do.

From Assistive AI to Acting Systems: Why Failure Looks Different Now

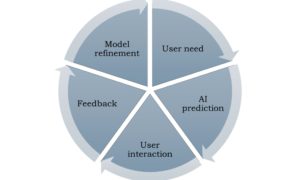

For years, AI systems lived comfortably in advisory roles. They ranked options, flagged anomalies, and suggested next steps. Humans remained the final decision-makers, absorbing uncertainty and correcting mistakes. Acting systems remove that buffer.

Autonomous execution changes how failure manifests. Decisions propagate instantly. Retries compound. Orchestration paths multiply. A system can behave exactly as designed and still produce consequences no one anticipated. What once appeared stable under supervision becomes fragile when machines close the loop. This transition is no longer speculative. Gartner projects that by 2028, a significant share of enterprise software will embed agentic AI, with a growing portion of routine decisions executed autonomously. That scale fundamentally alters the risk profile of enterprise systems.

Chaudhary’s perspective is shaped by working on systems where automation was already operating without human pacing. In environments handling hundreds of millions of requests per hour, he observed that the earliest failures were not model-related. They surfaced deeper in execution paths, retry loops compounding, routing logic behaving correctly but unexpectedly, and orchestration layers amplifying small timing assumptions into system-wide side effects.

“The most dangerous failures happen when the system does exactly what it was designed to do,” Chaudhary says. “Autonomy does not introduce chaos. It exposes the chaos that was already latent in the architecture.”

This is where trust first fractures, not because AI behaves irrationally, but because infrastructure was never designed for autonomous pacing.

Why Trust Breaks First in the Infrastructure Layer

As systems act more frequently, they generate more visibility data. Logs, traces, audit records, and telemetry expand to explain automated behaviour and satisfy accountability demands. At the same time, regulatory expectations around data handling and transparency continue to harden.

In practice, privacy, observability, and resilience are no longer separable concerns. They converge at the infrastructure layer, where execution actually happens. Policy frameworks offer intent. Architecture determines outcomes. The industry is already seeing the cost of ignoring this convergence. It is estimated that a substantial portion of agentic AI initiatives may be halted by 2027, driven not by model performance, but by governance gaps and operational risk.

As automated systems began executing decisions continuously, Chaudhary saw trust erode in a less visible way. Telemetry pipelines expanded rapidly to explain autonomous behavior, but those same pipelines became a source of risk. Execution logs retained more data than teams realized. Observability improved reliability while quietly increasing compliance exposure.

This tension between visibility and restraint, is one Chaudhary has evaluated both in practice and as a judge for the Globee Awards for Impact, where systems are assessed on whether they can sustain trust under real operational and regulatory pressure. “You cannot separate governance from execution,” he notes. “If your infrastructure cannot observe itself safely, autonomy becomes a liability.”

Here, trust is not a promise. It is an emergent property of infrastructure.

The Economic Reality Acting AI Systems Cannot Escape

Even when autonomy functions correctly and compliantly, another constraint emerges: cost. Acting systems scale decisions, not teams. Every automated action can trigger orchestration, retrieval, inference, and logging. Without discipline, expense grows faster than value.

This is already a defining pressure. Flexera’s 2025 State of the Cloud report shows that 84% of organizations cite managing cloud spend as their top challenge. Agentic systems amplify this tension by design.

Over time, the constraint that proved hardest to ignore was economic. In high-throughput environments, observability and orchestration layers scaled faster than the systems they were meant to protect. By redesigning execution paths, tightening real-time logging, stripping unnecessary data retention, and bounding telemetry at the infrastructure layer, Chaudhary helped reduce operational overhead significantly, in some cases approaching an order-of-magnitude improvement

The impact was not only financial. It restored confidence in allowing automated decisions to operate without constant human intervention. “AI systems rarely lose support because they fail technically,” he says. “They lose support because leadership stops trusting what they cost.”

Cost, in this context, becomes a diagnostic signal. Sustainable autonomy requires bounded execution, intentional telemetry, and architectures that surface inefficiency early. Without those controls, trust erodes quietly, long before systems fail outright.

Why Infrastructure Is Now the Product

As AI systems gain the ability to act, intelligence itself is becoming assumed. The differentiator is no longer what a system can decide, but whether it can execute repeatedly without surprising the business, regulators, or customers.

Beyond system design, Chaudhary has contributed publicly to this conversation, outlining architectural patterns for making AI-driven platforms more interpretable and governable. His position remains consistent: trust cannot be bolted on. It must be built into the infrastructure that carries decisions into the real world.

“The next phase of AI will not reward the most autonomous systems,” he says. “It will reward the systems that can act without creating new risk every time they do.”

In that future, infrastructure is no longer a support layer. It is the product. And the reckoning over how trust is engineered into acting systems has already begun.