As generative AI becomes a ubiquitous tool in professional services—from law and medicine to architecture and software development—a new and complex legal landscape is emerging. The central question is no longer “Can AI do the work?” but “Who owns the work, and who is liable when it fails?” For professional firms, the lack of a clear verification strategy is a ticking time bomb. Utilizing a reliable ai detector has become a vital component of risk management, ensuring that firms meet their “Duty of Care” and protect their intellectual property (IP) assets in an increasingly litigious digital world.

The Copyright Conundrum: Can You Own What a Machine Writes?

In many jurisdictions, including the United States, the law is becoming clear: content generated entirely by AI without “significant human creative input” cannot be copyrighted. For a marketing agency, a law firm, or a software house, this is a nightmare scenario. If you deliver a 50-page report or a codebase to a client that was 100% AI-generated, that client may not actually own the IP. A competitor could, in theory, take that content and reuse it with no legal recourse.

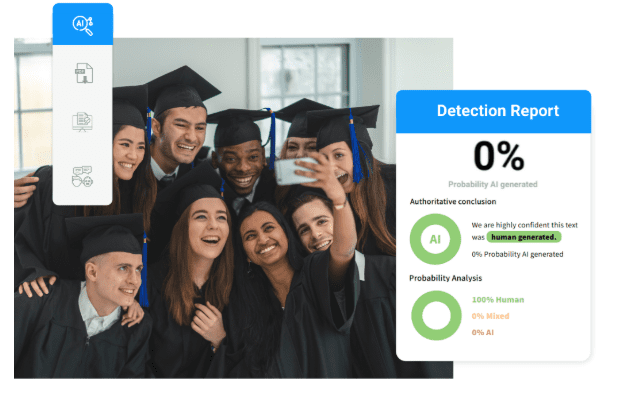

Firms must be able to prove “Human Authorship” to secure copyright protection. This requires a documented trail of human intervention and refinement. By using a detection tool, a firm can certify that their deliverables have a “High Human Probability” score, serving as a vital piece of evidence in any future IP dispute. It demonstrates that the AI was a tool, like a word processor, rather than the “author.”

Liability and the “Hallucination” Risk in Professional Advice

In professional services, accuracy is not just a goal; it is a legal requirement. AI models are known for “hallucinating”—creating fake legal citations, misinterpreting medical data, or suggesting structurally unsound engineering solutions. If a firm publishes or delivers AI-generated advice that contains such an error, the “I didn’t know the AI made it up” defense will not hold up in court.

The “Duty of Care” requires professionals to verify every piece of information they provide. If a junior associate uses AI to draft a legal brief and the senior partner doesn’t catch the synthetic errors, the firm is liable for malpractice. A verification layer acts as a safety net. It flags sections of a document that were generated by a machine, signaling to the senior reviewer that these specific paragraphs require intense, manual fact-checking.

Contractual Obligations and the Ethics of Disclosure

Clients are becoming increasingly sophisticated. Many are now inserting “AI Disclosure” clauses into their Service Level Agreements (SLAs). They want to know exactly how much of the work they are paying for is being done by a human expert versus a $20-a-month subscription to an LLM.

Failing to disclose AI use when a contract requires it can be considered “Breach of Contract” or even “Fraud.” To maintain ethical standing and contractual compliance, firms must have an internal “AI Audit” process. By running all outgoing work through a detector, the firm can provide a “Transparency Report” to the client. This builds a foundation of honesty and justifies the premium fees charged for human expertise.

The “Plagiarism by Proxy” Threat

AI models are trained on the entire public internet. This means they occasionally produce output that is “too close” to existing copyrighted material. While not a direct copy-paste, the structural and thematic mimicry can trigger plagiarism lawsuits.

Traditional plagiarism checkers often fail to catch these “paraphrased” similarities. However, a tool that identifies the “statistical signature” of an AI can alert a firm that a piece of content is unoriginal in its construction. This allows the firm to rewrite the section, ensuring it is a unique synthesis of ideas rather than a machine-rehash of someone else’s intellectual labor.

Safeguarding Corporate Secrets and Confidentiality

A major risk often overlooked is “Data Leakage.” When employees feed sensitive corporate data into public AI models to generate reports, that data becomes part of the model’s training set. This can lead to catastrophic leaks of trade secrets or private client information.

A company-wide policy of “Detection and Verification” encourages employees to be mindful of their AI usage. If employees know that all internal and external documents will be screened by an ai content detector, they are less likely to take “lazy shortcuts” that put the firm’s data at risk. It fosters a culture of accountability and professional rigor.

Conclusion: Verification as the Basis of Professional Trust

The future of professional services belongs to those who can master the “Cyborg” model—leveraging AI for speed while maintaining human accountability for quality and ethics. In this transition, verification is the most important bridge.

Investing in a high-grade ai content detector is not about stifling innovation; it is about protecting the very foundations of the profession: Trust, Liability, and Ownership. In a world of synthetic noise, the ability to prove that your thoughts are your own is the ultimate legal and competitive advantage.