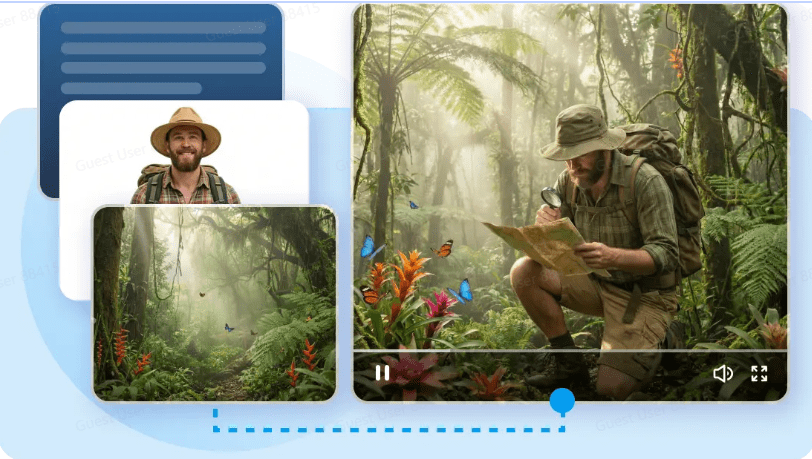

For the past two years, the generative AI video landscape has been characterized by a certain “lottery-like” unpredictability. Creators would input a prompt, wait several minutes, and cross their fingers, hoping the AI wouldn’t hallucinate a third arm or ignore a crucial camera movement. While visually stunning, these tools often fell short of professional production standards because they lacked the one thing creators value most: Controllability.

Everything changed with the global unveiling of

Seedance 2.0. Developed by Dreamina, ByteDance’s all-in-one creative powerhouse, this model isn’t just an incremental update; it is an industry-shaking leap that achieves “Top 1” performance across the four pillars of professional AI video: Multimodal Reference, Controllability, Quality, and Workflow Integration. By transitioning from simple “prompting” to “precision engineering,”

Seedance 2.0 AI Video Generator is effectively setting the new gold standard for the industry.

I. Multimodal Mastery: Breaking the “Text-Only” Bottleneck

The first major breakthrough of Seedance 2.0 lies in its Top 1 Multimodal Reference Capability. Most competitive models are limited by their narrow intake—they either understand text or a single static image. Seedance 2.0, however, functions more like a human brain, capable of synthesizing information from four distinct modalities: Image, Video, Audio, and Text.

-

The Power of 12 Simultaneous References

In a professional setting, a single reference is rarely enough to convey a vision. Seedance 2.0 supports a breakthrough capacity of up to 12 simultaneous reference files. This allows a creator to upload a specific character photo, a video clip of a trending dance move, an audio track for rhythm, and a text prompt for lighting—all at once. The AI doesn’t just “blend” these; it understands the relationship between them.

-

“Editing Video Like Editing Photos”

This multimodal depth enables a feature that was once thought impossible: One-sentence video editing. Traditionally, if you wanted to change the background of a video or swap a character’s jacket, you would need hours of rotoscoping and compositing. Seedance 2.0 makes “P-ing a video” as simple as “P-ing a photo.” Users can perform element replacement, deletion, or addition while maintaining multi-angle subject consistency. The AI ensures that the character’s identity remains unwavering across different perspectives, while only the specific elements you’ve commanded (like a style transfer or a costume change) are modified.

-

Replicating the “Viral Formula”

Beyond basic edits, Seedance 2.0 can learn complex creative dimensions including creative effects, camera movements, actions, and editing styles. If you see a viral “hit” with a specific kinetic camera pan and a unique glitch effect, you can feed that as a reference. The AI “decodes” the underlying elements, allowing you to “one-click replicate” the style with your own original content.

II. Precision Control: Achieving “Top 1” Consistency in Commercial Production

Consistency is the ultimate “Uncanny Valley” for AI video. If a brand’s logo or a character’s features flicker for even a millisecond, the illusion of quality is shattered. Seedance 2.0 addresses this with an upgraded architecture focused on three-dimensional consistency.

-

Human and Object High-Fidelity

Commercial production requires an exact match of characters and assets. Seedance 2.0 delivers Top 1 Effect Controllability by precisely restoring character details, facial features, and even the “timbre” of the scene’s audio-visual identity. Whether you are generating a close-up or a wide shot, the composition and character nuances remain locked, mirroring the original reference image with surgical precision.

-

The Typography Breakthrough

One of the most notorious failures of AI video models has been the inability to render stable text. Seedance 2.0 features a comprehensive upgrade in Font Consistency. This is a game-changer for marketing agencies and social media managers. The model maintains font style stability and high accuracy, ensuring that brand names or stylistic titles don’t warp or “jitter” during the video.

-

Mastering the “Fast Cut”

In the age of short-form content, rhythm is everything. Seedance 2.0 has optimized its control over details and pacing. It can handle “Fast Cut” transitions with a smoothness that feels professional rather than chaotic. The AI understands the weight and timing of a scene, ensuring that every transition aligns with the intended emotional beat.

III. Cinematic Excellence: The “Top 1” Quality Leap

If controllability provides the “bones” of a video, the output quality provides the “soul.” Seedance 2.0 elevates AI video from “experimental footage” to “cinematic storytelling.”

-

The “Smart Continuation” Philosophy

Most AI video tools generate isolated moments in time. Seedance 2.0 introduces Intelligent Continuation. It doesn’t just generate a new scene; it “continues the shoot.” By understanding the preceding frames’ lighting, physics, and narrative logic, it can “keep filming” the next scene with seamless continuity. This ensures that the story, character arcs, and camera movements don’t feel “split” or disjointed.

-

Native Audio-Visual Synchronization

Lip-syncing has traditionally been a separate, painful post-production step. Seedance 2.0 natively integrates Audio-Visual Sync. Whether it’s a single speaker or a multi-character dialogue, the mouth movements are generated in real-time to match the audio, while ambient sounds (like the clinking of glasses or wind in the trees) are synthesized to match the visual action.

-

Physics-First Rendering

By optimizing its understanding of physical laws, Seedance 2.0 produces videos that look “real” to the human eye. Fluids behave correctly, light bounces off surfaces naturally, and human movements adhere to gravity. This removes the “floaty” or “liquid” look that has plagued previous generations of AI video.

IV. The One-Stop Ecosystem: A Top 1 Integrated Workflow

The final piece of the puzzle is the Complete Workflow Integration. In the past, a creator’s desktop would be cluttered with ten different AI tools—one for upscaling, one for voice, one for images. Dreamina eliminates this friction.

By pairing Seedance 2.0 with the Seedream 5.0 Image Model and advanced AI Agents, Dreamina offers a “Double King” configuration. This ecosystem allows a creator to:

-

Generate a high-fidelity concept image in Seedream 5.0.

-

Use that image as a high-control reference in Seedance 2.0.

-

Utilize AI Agents to refine scripts or automate repetitive tasks.

Everything happens within a single interface, ensuring that the “creative spark” isn’t lost in the technical transition between different platforms.

Conclusion: The Era of “Intelligent Imagination”

Seedance 2.0 is more than just a technical update; it is a manifesto for the future of digital media. By achieving Top 1 status in multimodal reference, controllability, quality, and workflow, Dreamina has created a platform where the AI responds more accurately, thinks more intelligently, and produces more naturally.

As the industry moves away from the “black box” of random prompt generation, Seedance 2.0 offers a path toward Professional-Grade AI Production. For creators, agencies, and filmmakers, the message is clear: the age of guessing is over. The age of precision has begun.