Artificial Intelligence (AI) has made remarkable strides in recent years, from powering autonomous vehicles to streamlining industries with machine learning algorithms. But for all its advancements, AI remains firmly within the realm of narrow intelligence, performing specific tasks exceptionally well but lacking the versatility of human thought. Enter Artificial General Intelligence (AGI), an evolving concept that has captured the imagination of technologists, futurists, and ethicists alike.

So, what exactly is AGI?

AGI represents the next frontier in artificial intelligence—a system capable of understanding, learning, and applying knowledge across a broad range of tasks, much like a human being. The key difference between AGI and the AI systems we use today is flexibility. Narrow AI is great at solving pre-defined problems, like voice recognition or image classification, but it falls short when asked to handle tasks it wasn’t explicitly trained to do. AGI, on the other hand, would have the ability to adapt, reason, and problem-solve in unfamiliar domains without requiring additional programming. In essence, AGI could not only mimic human behaviour but also generate new ideas, make decisions, and adapt in real time.

This leap in capability sounds promising, but as we inch closer to AGI, we must ask ourselves: are we truly ready for it?

How far are we from achieving AGI?

The road to AGI is long and filled with technical, ethical, and philosophical hurdles. While the foundational building blocks are being laid, we’re still far from realising a system that can think, reason, and adapt like a human. Some estimates suggest that AGI could become a reality in the next few decades, but it’s crucial to approach these timelines with caution. AGI development isn’t just about scaling up today’s models; it’s about solving fundamental challenges around cognitive architectures, emotional intelligence, and even ethical decision-making.

Think of AGI as an uncharted territory in computing—where current AI models excel in specific areas, AGI would require an understanding of everything. The sheer scope of what’s needed to make AGI functional is mind-boggling. But perhaps the bigger question isn’t “when” we will achieve AGI but “whether” we’re prepared for the societal and economic changes it will bring.

What happens when AGI enters the real world?

The commercial applications of AGI could be vast. Picture an AGI system running a business’s strategic operations—analysing data, making decisions, and optimising processes in real time, without human intervention. It could handle everything from logistics to creative marketing strategies. The efficiency gains alone would be staggering, potentially revolutionising industries like finance, healthcare, and supply chain management. For example, in healthcare, AGI could take real-time patient data, compare it with millions of other cases, and recommend treatments that even the most skilled human doctors might miss.

But AGI’s impact wouldn’t stop at just doing things better or faster. It would fundamentally change how we approach work, economics, and innovation. A machine that can outperform human beings in almost any intellectual task would force us to rethink not only the future of employment but also how value is created and distributed in society. In a world where machines can think for themselves, what roles are left for us?

AGI: A tool or a threat?

While the potential for AGI is enormous, so too are the risks. AGI isn’t just another technological tool to be optimised for convenience or profit; it’s an entirely new form of intelligence that could reshape societal structures. There’s a danger that if AGI falls into the wrong hands or is poorly regulated, it could be used for malicious purposes—whether that’s automating large-scale cyber-attacks or creating disinformation campaigns with unprecedented efficiency. Moreover, AGI could widen existing inequalities by concentrating power in the hands of those who control the technology.

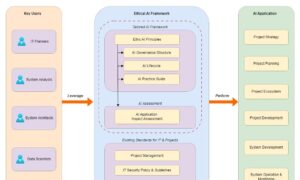

Regulation and ethical guidelines must be part of the conversation from the start. We cannot afford to wait until AGI is fully realised to begin thinking about its societal impacts. Collaboration between businesses, policymakers, and ethicists is crucial to ensure that AGI benefits humanity as a whole, rather than exacerbating global inequalities or being weaponised for harm.

Where do we go from here?

I’m excited by the potential of AGI. I see incredible opportunities for innovation in this space. But with great power comes great responsibility. Businesses and governments alike need to begin preparing for a world where AGI is not just a possibility but a reality.

We need to ask ourselves the tough questions now: How do we build ethical frameworks around AGI? How do we ensure that it’s used to benefit society rather than harm it? And how do we prepare for the massive economic shifts that AGI will inevitably bring?

AGI is coming, whether in ten years or fifty. The real challenge isn’t just developing the technology—it’s ensuring we’re ready to handle the profound changes it will bring to the world.