In today’s digital transformation era, data systems are undergoing a profound shift reshaping how enterprises manage and derive value from their information assets. This article, by Shubham Srivastava, brings forward innovative insights into the evolution from traditional data warehouses to modern lakehouse architectures. The author is an experienced researcher in scalable data solutions, dedicated to pushing the boundaries of contemporary data management. His work emphasizes a holistic approach that integrates flexibility with reliability, setting the stage for the next generation of data platforms.

Unified Data Management Breakthroughs

One of the core innovations highlighted in recent research is the unification of data storage and access. While dependable for structured reporting, traditional architectures struggled to cope with the diversity and sheer volume of modern data workloads. The lakehouse model breaks these barriers by integrating conventional warehouses’ robust, ACID-compliant features with the flexible, cost-effective nature of data lakes. This unified system provides a single source of truth, eliminating redundant data copies and streamlining access for real-time analytics and machine learning. Organizations can now enjoy a seamless and efficient data experience by erasing the rigid boundaries between separate storage systems.

Accelerated Processing and Performance

Modern data architectures are now defined by their advanced processing capabilities. Innovations in intelligent data skipping, indexing mechanisms, and optimized query engines have dramatically reduced query scan times—often by as much as 85% compared to legacy systems. The integration of batch and streaming processes within a single framework not only simplifies data pipelines but also enhances operational efficiency. Enhanced metadata management and unified access protocols ensure that performance remains robust even as data volumes swell. These advancements allow businesses to run complex analytical queries on petabyte-scale datasets with impressive speed, ultimately driving faster insights and decision-making.

Enhanced Governance and Operational Efficiency

Beyond raw speed, performance optimization in architectures relies on a robust governance framework integral to the lakehouse approach. It enforces unified policies, tracks comprehensive data lineage, and implements fine-grained security. Consistent metadata management minimizes operational overhead and reduces duplication and poor quality costs, while advanced compression and cost-based query optimization boost storage efficiency and performance.

Technological Horizons and Intelligent Automation

A forward-looking aspect of the lakehouse paradigm is its readiness to incorporate emerging technologies. By integrating artificial intelligence into core data operations, these platforms become self-optimizing. They use predictive resource allocation and automated tuning based on workload patterns, dynamically adapting to business needs. Additionally, edge computing processes data near its source, minimizing latency and transfer costs. Exploration of quantum-ready structures hints at efficient intelligent data platforms that preempt challenges.

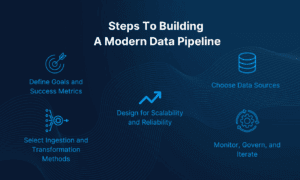

Streamlined Migration and Future Readiness

Another critical innovation is the strategic framework for migrating from traditional data warehouses to lakehouse architectures. When approached methodically, this migration process has been shown to significantly reduce implementation timelines and operational disruptions. A systematic assessment of current data environments and a careful evaluation of technical and operational requirements form the backbone of a successful migration strategy. By focusing on scalability, integration capabilities, and governance standards, organizations can ensure a smooth transition to a platform that is both future-proof and responsive to emerging analytical demands.

Informed Evolution and Sustainable Impact

Innovations in data architecture are not only about immediate performance improvements—they also set the stage for sustainable and adaptive enterprise data strategies. The lakehouse model is a testament to how technological evolution can yield significant cost savings, operational efficiencies, and enhanced analytical capabilities. With the ability to handle diverse workloads ranging from real-time analytics to large-scale machine learning, these architectures are poised to drive the next wave of digital innovation. The strategic integration of AI, edge computing, and emerging quantum-ready technologies promises a future where data platforms are more responsive, efficient, and secure.

In conclusion, Shubham Srivastava’s perspective underscores a pivotal moment in data management innovation. The transformation from traditional data warehouses to lakehouse architectures reflects a broader shift towards systems that combine flexibility with reliability, ensuring that enterprises are well-equipped to navigate the complexities of modern data demands. As organizations evolve in the digital age, the innovations embedded in the lakehouse paradigm will undoubtedly serve as the cornerstone for future breakthroughs in data architecture and analytics.