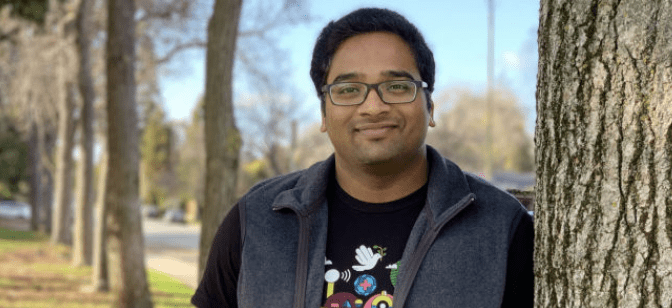

The modern data ecosystem is vast, dynamic, and often overwhelming. With petabytes of data moving through increasingly complex pipelines, the challenge for today’s engineers is not simply scale, it’s orchestration. How can organizations design systems that are powerful enough for massive workloads, yet simple enough for broad accessibility? The answer, according to Surya Karri, lies in bridging architecture and usability.

A Staff Software Engineer and Senior IEEE Member, Karri a seasoned engineer has spent over 15 years transforming the way engineers interact with data infrastructure. His work focuses on designing platforms that make big data workflows more intuitive, efficient, and reliable, empowering both technical and non-technical teams to innovate faster. “Engineering productivity is not just about speed,” he explains. “It’s about building systems that think alongside developers, reducing complexity so creativity can thrive.”

From Code to Composition: Rethinking Workflow Orchestration

The turning point in Karri’s career came with the creation of Spinner Composer, a pioneering no-code, UI-based orchestration platform that reimagined how data pipelines are designed and managed. Developed initially as FloHub in 2019, the system evolved into Spinner Composer as Karri led its integration into Airflow, one of the most widely used open-source workflow engines in the world.

Spinner Composer allowed engineers, analysts, and even product managers to visually create and manage data workflows through a drag-and-drop interface, automatically translating visual configurations into executable code. What once required hours of scripting could now be achieved in minutes. The platform not only improved efficiency but also democratized access to data infrastructure.

“Big data engineering has traditionally been a gated space,” Karri says. “The goal was to open it up, to make workflow creation as simple as storytelling.”

Within six months of its launch, Spinner Composer achieved impressive adoption rates: over 180 weekly active users and 65 daily active users. The results were immediate. Workflow creation times dropped from an average of 40 minutes to just 12, a 70% increase in team velocity.

Engineering for Scale and Reliability

Underneath its intuitive interface, Spinner Composer was built with the rigor of large-scale distributed systems. Karri’s architectural vision emphasized fault tolerance, horizontal scalability, and zero-downtime reliability, the holy trinity of modern data engineering.

The platform used Python Celery workers with a database-backed scheduler to ensure high-availability execution, even across massive workloads. Karri engineered a thread-based monitoring system for asynchronous job cancellation, a complex feature that allowed workflows to be safely terminated across diverse external systems like SparkSQL and Presto.

To enable seamless upgrades, he designed rollover deployments, a system where parallel worker clusters handled transitions without disrupting in-progress workflows. This innovation allowed Spinner Composer to maintain uptime while continuously evolving its architecture.

“Reliability at scale doesn’t happen by chance,” Karri notes. “It’s designed into the system from the very beginning—every retry, every rollback, every checkpoint.”

Democratizing Data Engineering

One of the most profound impacts of Karri’s work was its inclusivity. Spinner Composer brought complex data engineering tasks within reach of non-technical teams. Product managers, analysts, and designers could now prototype and deploy data workflows without writing a single line of code.

By reducing technical barriers, Karri’s platform enabled cross-functional collaboration and dramatically improved productivity across departments. “When you give non-engineers the tools to automate their ideas,” he says, “you don’t just save developer hours, you unlock entirely new categories of innovation.”

This human-centered approach to infrastructure design reflects Karri’s broader philosophy of engineering as enablement. His systems are built not just to scale machines, but to scale people, to give teams the autonomy to experiment, iterate, and deliver value faster.

Shaping the Future of Data Systems

As a paper reviewer at the 2025 International Conference on Real World Applications of Agentic AI for Web 3.0 (ICOW3), Karri is at the forefront of discussions about the convergence of automation, AI, and large-scale data infrastructure. His insights bridge two worlds, the precision of systems engineering and the dynamism of AI-driven decision-making.

Looking ahead, he envisions a future where big data systems evolve from passive pipelines into intelligent collaborators that self-optimize and adapt to user intent. “We’re moving toward platforms that learn from the workflows they host,” he says. “Imagine a system that can predict bottlenecks before they occur, allocate compute dynamically, or even recommend better orchestration patterns based on prior performance. That’s where we’re headed.”

A Legacy of Scalable Simplicity

Karri’s contributions extend beyond code—they represent a shift in how data systems are conceived and experienced. His leadership in architecting reliable, user-friendly platforms has influenced how companies approach developer productivity, workflow automation, and infrastructure design.

In an age where data drives every decision, Surya Karri’s work reminds us that true innovation lies not just in scaling technology, but in simplifying it. His philosophy is both pragmatic and visionary: systems should be powerful enough for engineers, accessible enough for anyone, and intelligent enough to evolve with the needs of the business.

As the data landscape grows ever more complex, his message remains clear, the future of big data belongs to platforms that make the complicated, effortless.