Tucked behind lines of medical code and rows of curated imaging data, a group of engineers with roots in India are making quiet but measurable gains in how health startups run. These scientists and data professionals lead their own company, Trinzz, a platform dedicated to handling biomedical data. Their focus stays fixed on a single objective: making medical research move faster and more reliably.

What they built may not draw headlines at the surface level. Yet inside labs, clinics, and academic centers, teams using their tools say time-consuming workflows are being replaced with something easier to manage, easier to trust, and easier to build from.

Handling Complexity with Technical Simplicity

The startup’s platform was developed to organize and support complex biomedical imaging formats such as DICOM and NIfTI standards found in nearly every modern hospital system. These files contain details critical to clinical research and diagnosis. Yet because of their size and structure, they require significant preparation before becoming useful to data models.

The Indian engineers at the center of this effort created tools that can process these files automatically. Their system prepares large volumes of image data for review, labeling, and annotation, all inside a secure digital environment. Radiologists and pathologists, often working across time zones, can now evaluate scans on shared timelines without switching between platforms.

Security stays central to this system’s design. Information is encrypted during transfer and storage, and the infrastructure meets essential regulatory formats like HIPAA, ISO 27001, and SOC 2. These precautions make the platform viable for both early-stage research trials and hospital-linked clinical teams alike.

Code, Collaboration, and Custom Workflows

Technical flexibility was a top priority for the engineers. Their platform connects to cloud storage providers such as Google Cloud Platform and Amazon Web Services, which supports institutions that already keep data in existing environments. Uploads, imports, and updates happen without causing friction across teams.

Developers using the system are able to access open APIs and SDKs tools often buried within closed enterprise systems. These options let users automate workflows, trim down steps, and standardize performance across multiple projects. Teams are also able to set checkpoints for quality assurance, verifying image accuracy before those files move forward in the labeling process.

Tracking features within the platform show where delays happen and where revisions are needed. For researchers building models that depend on thousands of consistently labeled images, these checkpoints become a necessary part of delivering trusted results.

A Team That Keeps Projects on Track

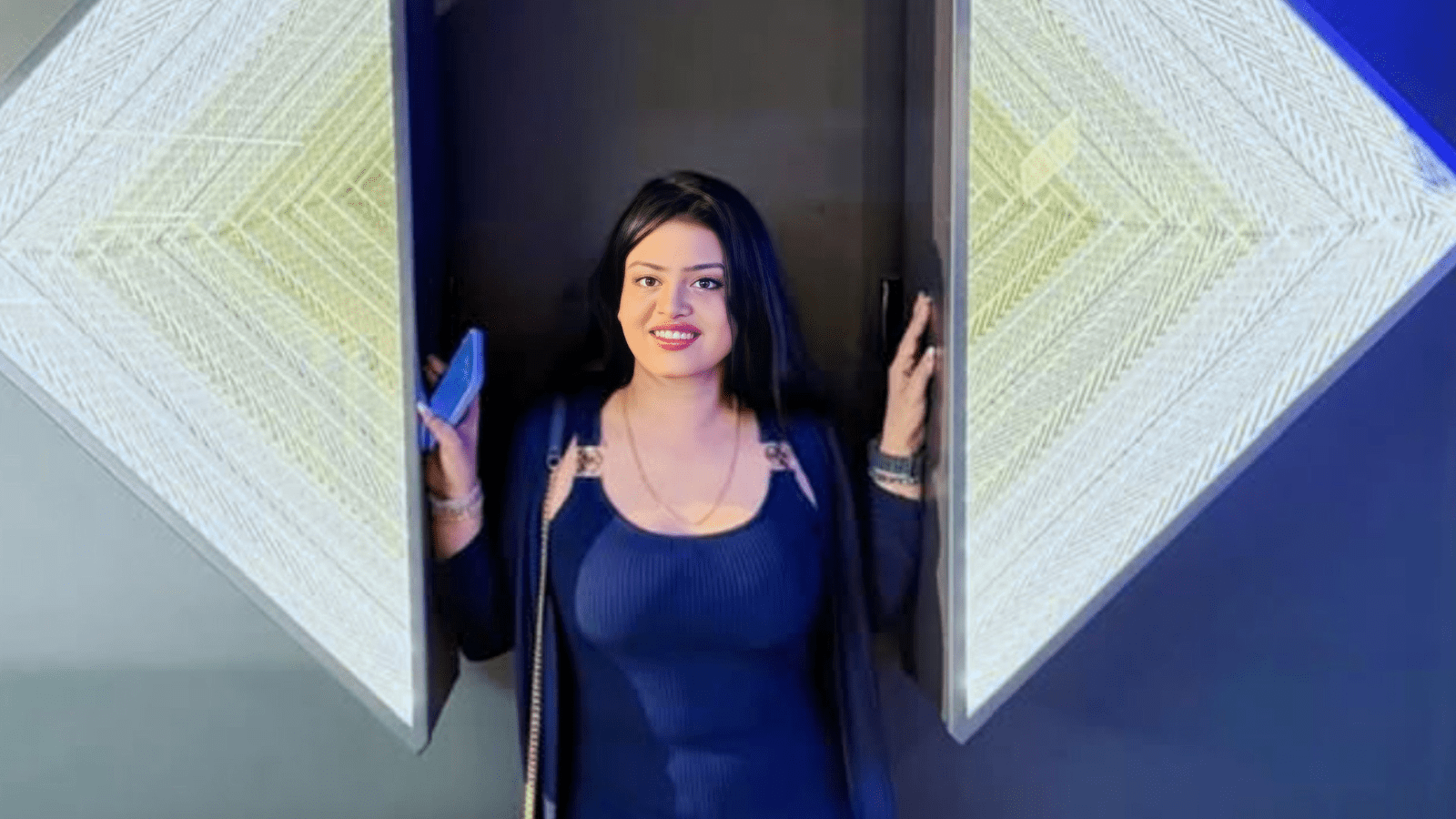

The technical leadership behind this platform includes engineers trained in fields like visual computing, biomedical informatics, and annotation design. Their previous work spans clinical research labs, early-stage ventures, and cross-country medical collaborations. While based in Silicon Valley today, many on the team began their professional paths in India, where they studied technologies many hospitals now rely on.

The team also works directly with certified medical image reviewers including radiologists and pathology professionals who bring necessary clinical knowledge to the process. This collaboration helps ensure final datasets stay grounded in real-world application as well as scientific standards.

Having tools is one thing. Making those tools flexible enough for hospitals and research groups of different sizes is another. By offering structure where teams usually face bottlenecks, this platform does more than speed up tasks; it makes the process more reliable at every point of the workflow.

The engineers leading this effort rarely speak publicly, allowing their platform to speak through usage and outcomes. Still, the impact their work leaves behind is traceable in how medical teams report progress. For health researchers juggling complex, high-volume projects, these tools may continue playing an essential role in helping data move faster without sacrificing accuracy where it matters most.