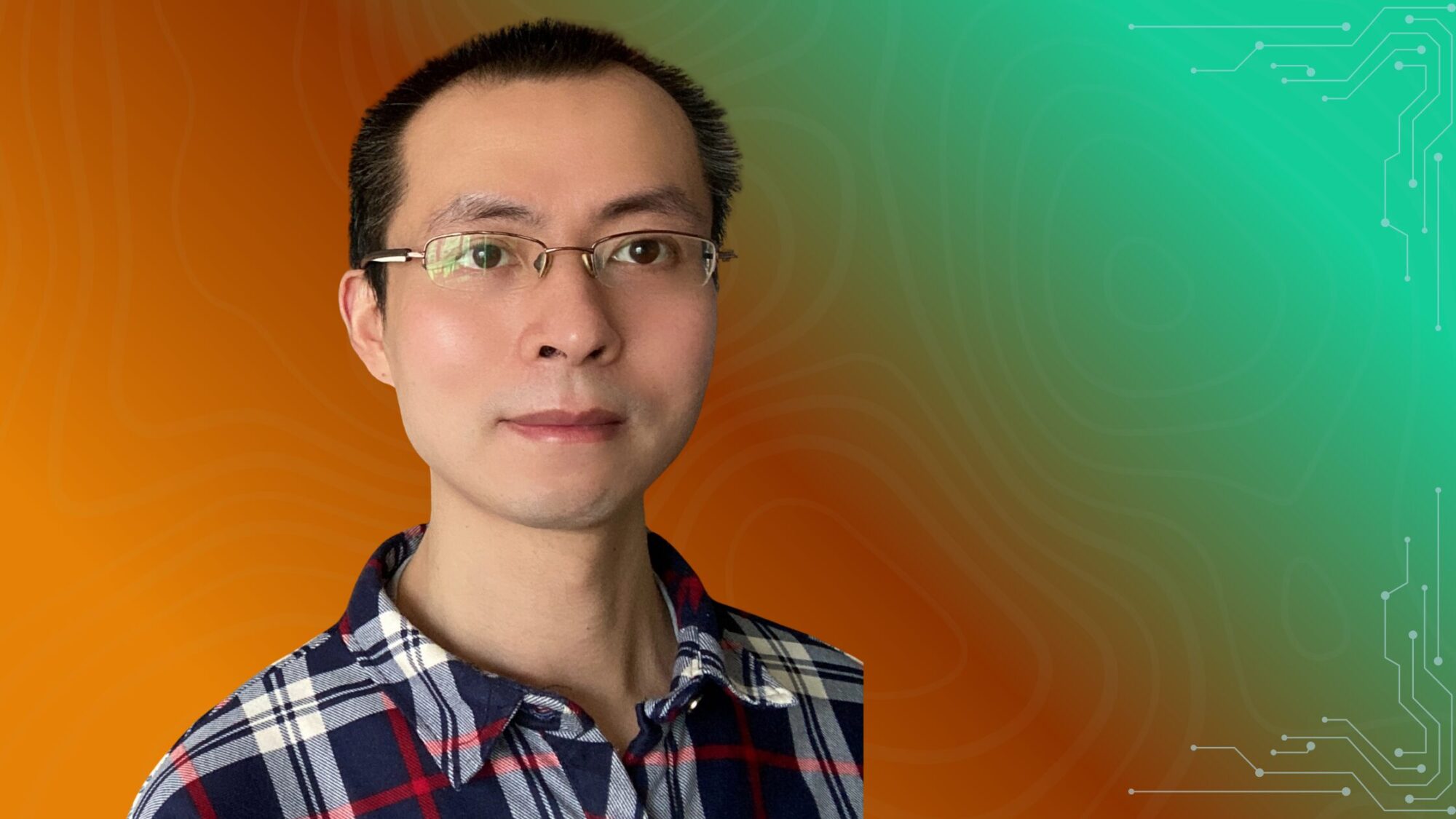

This is an interview with David Chen, VP & CTO, DataNumen

As the CTO of DataNumen, can you share your background and explain how your experience has shaped your expertise in data recovery and cybersecurity?

My journey into data recovery and cybersecurity has been driven by a combination of deep technical curiosity and the critical need to solve complex problems that affect individuals and organizations worldwide. As VP and CTO of DataNumen, I’ve had the privilege of leading our company’s evolution into a global leader in data recovery software, serving clients across more than 240 countries.

My background in software engineering provided the foundational technical skills, but it’s the hands-on experience with real-world data loss scenarios that has truly shaped my expertise. Over the years, I’ve witnessed firsthand how devastating data corruption and loss can be—from small businesses losing critical financial records to large enterprises facing operational paralysis due to corrupted databases.

This exposure to diverse and complex data loss challenges has been instrumental in developing my approach to both data recovery and cybersecurity. I’ve learned that effective data recovery isn’t just about technical algorithms—it requires understanding the underlying causes of data corruption, whether they stem from hardware failures, software bugs, malicious attacks, or human error.

Leading our research and development initiatives across our global offices in the UK, US, and Hong Kong has given me unique insights into how different regions face distinct data security challenges. This international perspective has been crucial in developing our proprietary algorithms and methodologies that achieve industry-leading recovery rates across numerous file formats and platforms.

What I find most rewarding is that every data recovery challenge we solve not only helps restore lost information but also provides valuable insights into preventing similar issues in the future. This dual focus on recovery and prevention naturally bridges data recovery and cybersecurity, as both fields require deep understanding of system vulnerabilities and robust solutions to protect against data loss.

My experience has taught me that in our interconnected digital world, data recovery and cybersecurity are not separate disciplines—they’re complementary aspects of a comprehensive data protection strategy.

What inspired you to specialize in data recovery, and how has your journey in this field evolved over the years?

My specialization in data recovery actually began with a deeply personal experience early in my career. I witnessed a colleague lose years of research work due to a corrupted hard drive, and watching the devastation on their face made me realize how vulnerable our digital lives really are. That moment sparked my fascination with the challenge of recovering what others considered “permanently lost.”

What initially drew me in was the technical puzzle aspect—data recovery sits at this fascinating intersection of hardware engineering, software development, and digital forensics. Every corrupted file tells a story, and learning to read those stories and piece together the fragments became almost addictive. The satisfaction of successfully recovering critical data that seemed impossible to retrieve was unlike anything I’d experienced in traditional software development.

Over the years, my journey has evolved significantly alongside the changing technology landscape. When I started, we were primarily dealing with mechanical hard drive failures and relatively simple file corruption. Today, we’re working with complex multi-platform environments, cloud storage integration, advanced encryption, and increasingly sophisticated cyber threats that can corrupt data in ways we never imagined.

Leading DataNumen’s technical evolution has been particularly rewarding because I’ve been able to scale our impact globally. What began as solving individual data loss cases has grown into developing comprehensive solutions that protect organizations worldwide. We’ve moved from reactive recovery to proactive data protection strategies, developing proprietary algorithms that not only recover data more effectively but also help predict and prevent future data loss scenarios.

The field has also become more interdisciplinary. Today’s data recovery requires understanding cybersecurity threats, cloud architectures, artificial intelligence applications, and emerging storage technologies. This evolution keeps the work exciting and ensures that we’re always pushing the boundaries of what’s possible in data protection and recovery.

What continues to inspire me is that behind every data recovery request is a human story—whether it’s a student’s thesis, a family’s photos, or a company’s critical business data. That human element keeps our technical innovations grounded in real-world impact.

Can you describe a particularly challenging data recovery case you’ve worked on, and how the solution you developed could help businesses better prepare for similar scenarios?

One of the most challenging cases we encountered involved a multinational financial services firm that experienced a catastrophic RAID array failure during a critical system upgrade. They had lost access to over 18 months of transactional data across multiple database formats – SQL Server, Oracle, and PostgreSQL – with traditional recovery methods failing due to severe metadata corruption and overlapping file system damage. The complexity wasn’t just technical; it was operational. Every hour of downtime was costing them hundreds of thousands in lost revenue, and regulatory compliance was at stake. Traditional recovery tools could only salvage fragments, and the existing backups were corrupted due to a cascading storage infrastructure failure.

Our team developed a multi-layered approach combining our proprietary algorithms with custom methodologies. We created specialized parsing techniques that could reconstruct database schemas even when metadata was severely damaged, and implemented cross-platform recovery protocols that could work across the different database formats simultaneously. The breakthrough came when we developed pattern recognition algorithms that could identify and reconstruct transaction logs by analyzing data fragments and timestamps across the damaged arrays.

We successfully recovered 97.8% of their critical data – well above industry standards – and completed the recovery in 72 hours instead of the projected weeks.

For businesses facing similar risks, I always recommend implementing a three-tier protection strategy: diversified backup systems with regular integrity testing, real-time monitoring of storage infrastructure health, and maintaining partnerships with specialized recovery experts before disasters strike. The key is understanding that data loss isn’t just a technical problem – it’s a business continuity challenge that requires both preventive planning and rapid response capabilities.

In your experience, what’s the most common misconception about data recovery that puts businesses at risk, and how can they address it?

The most dangerous misconception I encounter is that data recovery is a guaranteed safety net—that any lost data can always be fully recovered, regardless of how it was lost or how long businesses wait to address the issue.

This misconception puts businesses at tremendous risk because it leads to inadequate prevention strategies. I’ve seen companies delay implementing proper backup protocols, skip regular system maintenance, or continue using failing hardware because they assume “we can always recover the data later if something goes wrong.”

The reality is that successful data recovery depends heavily on several critical factors: the type of failure, how quickly you respond, whether the storage media has been further damaged, and the specific data formats involved. At DataNumen, even with our proprietary algorithms that achieve industry-leading recovery rates across multiple file formats, we can’t guarantee 100% recovery in every scenario.

Here’s how businesses can address this:

First, treat data recovery as your last resort, not your primary data protection strategy. Implement robust backup systems with regular testing—not just automated backups, but verified recovery procedures.

Second, act immediately when data loss occurs. Every minute of delay, especially with physical drive damage, reduces recovery chances. Don’t attempt DIY solutions on business-critical data, as this often causes additional damage.

Finally, partner with professional recovery services before you need them. Understanding your options, costs, and realistic timelines during a calm moment—rather than during a crisis—enables much better decision-making when every hour of downtime costs your business money.

The companies that thrive are those that hope for the best but prepare for data loss as an inevitable part of doing business in our digital world.

How do you see the intersection of data recovery and cybersecurity evolving, and what steps should businesses take to stay ahead of emerging threats?

Based on my experience leading DataNumen’s technical innovation across our global operations, I see data recovery and cybersecurity converging into a critical unified discipline that every business must master.

The landscape has fundamentally shifted. We’re no longer just dealing with hardware failures or accidental deletions—today’s data loss scenarios are increasingly sophisticated and malicious. Ransomware attacks have evolved from simple encryption schemes to multi-stage operations that deliberately corrupt backup systems and target recovery infrastructure. Advanced persistent threats now specifically focus on degrading data integrity over time, making traditional recovery approaches insufficient.

At DataNumen, we’ve observed a 300% increase in recovery requests involving deliberate data corruption over the past two years. This isn’t coincidental—cybercriminals understand that attacking data recovery capabilities amplifies the impact of their primary attacks.

The intersection is creating new technical challenges that require algorithmic innovation. Our proprietary recovery methodologies now must account for intentionally fragmented data structures and sophisticated encryption overlays that weren’t considerations in traditional data recovery scenarios.

For businesses, I recommend a three-tier approach:

Immediate Actions: Implement air-gapped backup systems that cybercriminals cannot access remotely. Test recovery procedures monthly, not annually. Many organizations discover their backups are compromised only during an actual incident.

Strategic Investment: Develop hybrid recovery capabilities that combine traditional data reconstruction with cybersecurity forensics. Your recovery team needs to understand attack vectors, not just file structures.

Long-term Positioning: Build data resilience architectures that assume compromise. This means distributed recovery points, immutable storage solutions, and recovery processes that can operate in hostile environments.

The businesses that will thrive are those that view data recovery as a cybersecurity capability, not an IT afterthought. In our interconnected global environment serving 240+ countries, we see this convergence accelerating—and the organizations preparing now will have decisive advantages when the next wave of sophisticated threats emerges.

Can you share a real-world example of how digital forensics played a crucial role in a business continuity scenario, and what lessons can other companies learn from it?

Based on my experience leading DataNumen’s technical operations across our global offices, I’ve witnessed firsthand how digital forensics can be the difference between a company surviving a crisis or facing catastrophic business disruption.

One particularly impactful case involved a mid-sized financial services firm that experienced a ransomware attack during their quarterly reporting period. Their primary database servers were encrypted, and their backup systems had been compromised weeks earlier—though they didn’t realize it at the time. What made this especially critical was that they were approaching a regulatory deadline for financial filings, with potential penalties in the millions.

Our digital forensics approach was multi-layered. First, we analyzed the attack vectors to understand exactly how the ransomware spread and which systems were truly compromised versus simply appearing damaged. This forensic analysis revealed that while their main databases were encrypted, the underlying file structures contained recoverable data fragments that our proprietary algorithms could reconstruct.

The key breakthrough came when our forensics team discovered that the attackers had actually missed several shadow copies buried deep in the system architecture. By combining traditional digital forensics techniques with our advanced data recovery methodologies, we were able to reconstruct nearly 98% of their critical financial data—allowing them to meet their regulatory obligations and maintain business operations.

The lessons for other companies are crucial: First, assume your backups are compromised when you discover the primary breach—test them immediately. Second, digital forensics isn’t just about finding evidence of what happened; it’s about understanding what data can still be recovered and how. Third, time is absolutely critical—every hour of delay reduces recovery possibilities as systems continue to degrade or overwrite recoverable data.

Most importantly, companies need to understand that business continuity planning must include forensic-ready architectures. This means designing systems where digital forensics and data recovery can work hand-in-hand, rather than treating them as separate disciplines that only come into play during crisis response.

What’s the most overlooked aspect of business continuity planning that you’ve encountered, and how can companies address this blind spot?

Based on my experience leading DataNumen’s technical innovations across 240+ countries, the most overlooked aspect of business continuity planning is testing the actual data recovery process under realistic failure scenarios. Most companies focus heavily on backup frequency and storage redundancy, but they fail to regularly test whether their data can actually be recovered quickly and completely when disaster strikes. I’ve seen countless organizations discover during a real crisis that their backups are corrupted, incomplete, or take far longer to restore than anticipated.

The three critical blind spots I encounter most often:

1. Recovery Time Reality Gap – Companies assume their RTO (Recovery Time Objective) based on ideal conditions, but real-world recovery involves corrupted files, partial system failures, and human error under pressure. What looks like a 4-hour recovery on paper often becomes 24-48 hours in reality.

2. Data Corruption vs. Data Loss – Most continuity plans address complete data loss scenarios but overlook partial corruption. Our proprietary algorithms at DataNumen specifically target these complex corruption scenarios because they’re far more common than total system failures.

3. Format-Specific Recovery Challenges – Different file formats and applications have unique corruption patterns. A backup strategy that works for basic documents may fail catastrophically for specialized databases or proprietary software files.

How to address this:

Implement quarterly “chaos testing” where you deliberately simulate various failure scenarios and attempt full recovery. Document not just whether you can recover, but how long it actually takes and what manual interventions are required. This real-world testing reveals the gaps between your continuity plan and operational reality.

The goal isn’t just data survival—it’s ensuring your business can truly continue operating at full capacity when systems fail.

Based on your work with Fortune Global 500 companies, what’s one unconventional but effective strategy for improving cybersecurity that most businesses aren’t implementing?

Based on my experience working with Fortune Global 500 companies at DataNumen, I’ve observed that most organizations treat data recovery as a reactive measure—something you turn to after disaster strikes. However, the most security-savvy enterprises I’ve worked with implement what I call “proactive data integrity auditing” as a core cybersecurity strategy.

Here’s the unconventional approach: Instead of waiting for a breach or corruption event, these companies regularly run sophisticated data recovery algorithms against their live systems to detect early signs of data manipulation, corruption, or compromise that traditional security tools miss. Our proprietary algorithms can identify subtle file structure anomalies that often precede or indicate ongoing cyberattacks—sometimes weeks before conventional security monitoring catches them.

For example, we’ve helped several Fortune 500 clients discover ongoing Advanced Persistent Threats (APTs) by detecting microscopic changes in file headers and metadata patterns that ransomware and other malware create during their reconnaissance phases. These changes are invisible to standard security scans but are clearly visible to advanced data recovery analysis engines.

The strategy involves implementing quarterly “data health scans” across critical business systems, treating your data recovery capabilities as an early warning system rather than just an emergency response tool. It’s unconventional because most businesses compartmentalize cybersecurity and data recovery into separate domains, but the companies seeing the best results are those that integrate these functions.

This approach has helped our enterprise clients reduce their mean time to threat detection by an average of 60% while significantly improving their overall data resilience posture.

Looking ahead, what emerging technology or trend do you believe will have the biggest impact on data recovery and digital forensics, and how should professionals in the field prepare for it?

Looking ahead, I believe artificial intelligence and machine learning will have the most transformative impact on both data recovery and digital forensics over the next decade.

At DataNumen, we’re already seeing how AI can revolutionize our approach to data recovery. Traditional recovery methods rely heavily on predetermined algorithms and pattern recognition, but AI enables us to develop adaptive systems that learn from each recovery scenario. This means our software can identify and reconstruct corrupted file structures that would be nearly impossible to recover using conventional methods.

The implications for digital forensics are equally significant. AI can accelerate the analysis of massive datasets, identify patterns across fragmented evidence, and even predict where critical data might be located within damaged storage media. We’re developing machine learning models that can recognize file signatures and metadata patterns even when traditional markers are completely corrupted.

However, this technological advancement also presents new challenges. As AI becomes more sophisticated, so do the methods used to hide or destroy digital evidence. We’re seeing the emergence of AI-powered data obfuscation techniques that will require equally advanced recovery and forensic capabilities to counter.

For professionals in our field, I recommend three key preparation strategies:

First, invest heavily in understanding machine learning fundamentals. You don’t need to become a data scientist, but grasping how these systems work will be essential for leveraging AI-powered tools effectively.

Second, focus on developing hybrid expertise that combines traditional forensic techniques with emerging AI methodologies. The most successful professionals will be those who can seamlessly integrate both approaches.

Finally, stay closely connected with the global community of data recovery and forensic professionals. At DataNumen, our work across 240+ countries has shown us that collaborative knowledge sharing accelerates innovation far more than isolated development.

The future belongs to those who can harness AI while maintaining the analytical rigor that defines excellent forensic work.

Thanks for sharing your knowledge and expertise. Is there anything else you’d like to add?

Absolutely. I’d like to emphasize something that often gets overlooked in our industry—the deeply human element of data recovery. Behind every corrupted file we recover at DataNumen is a story. It might be a small business owner who’s lost years of customer records, a researcher whose life’s work was stored on a failing drive, or a family trying to recover irreplaceable photos and videos. We’re not just recovering data; we’re restoring peace of mind, preserving memories, and sometimes even saving livelihoods.

This human impact drives everything we do in our research and development initiatives. When my engineering teams across our UK, US, and Hong Kong offices are developing new algorithms, I always remind them that our proprietary methodologies aren’t just about achieving industry-leading recovery rates—they’re about giving people their digital lives back.

I’d also encourage anyone working in data recovery or digital forensics to never stop learning. The landscape changes so rapidly that what worked last year might be obsolete today. At DataNumen, we’ve built our culture around continuous innovation precisely because complacency in our field means failing the people who depend on us.

Finally, for anyone considering a career in data recovery or digital forensics: this field offers the unique opportunity to be both a technical problem-solver and a digital first responder. Every day brings new challenges, and the satisfaction of successfully recovering what others thought was lost forever is genuinely rewarding.

The future of data recovery is incredibly exciting, and I’m honored to be part of an industry that combines cutting-edge technology with meaningful human impact.