Introduction

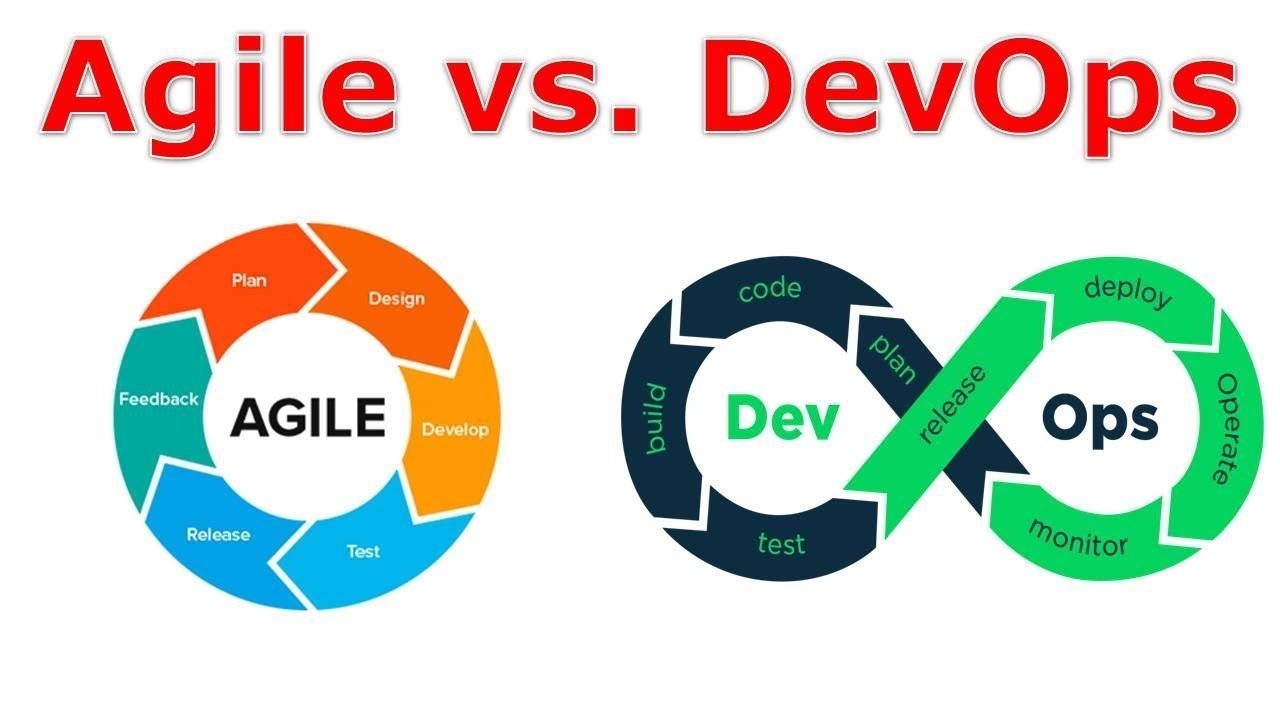

In today’s era of digital transformation, Software Quality Assurance (SQA) is evolving beyond code correctness and test coverage. It now encompasses usability, human-centered design, and the integration of real-world user behavior into the development lifecycle. With the emergence of Agile and DevOps frameworks, usability testing—once considered a separate phase—is becoming a core aspect of modern quality engineering.

The Human Element in Software Quality Assurance (SQA)

Traditional QA focused heavily on system functionality, often missing the critical human element. But as software becomes increasingly user-centric—especially in high-stakes sectors like fintech, healthcare, and IoT—testing must account for how humans interact with systems.

Incorporating human factors means testing not just how software works, but how it feels. Usability testing evaluates user satisfaction, efficiency, and error rates, helping teams deliver applications that are intuitive and accessible. This shift is essential for QA in smart systems and cybersecurity, where usability directly impacts system security and effectiveness.

Usability Testing in Agile QA Strategies

Agile QA strategies embrace iterative development, where testing is embedded in every sprint. This enables continuous integration of usability feedback through short, fast feedback loops that allow rapid refinements—reducing costly rework and enhancing product quality.

Early user feedback sessions, A/B testing, and scenario-based evaluations are vital tools. They help teams understand how humans actually interact with the product, aligning well with intelligent testing systems that adapt to user behavior patterns and metrics.

DevOps and Continuous Testing with Human Focus

DevOps and continuous testing practices accelerate delivery cycles without compromising quality. However, the drive for speed must not eclipse the need for user satisfaction. Embedding usability testing into DevOps pipelines allows automated validation of user flows, accessibility compliance, and real-time experience metrics.

When integrated with AI-powered testing and machine learning, DevOps can even anticipate usability issues. For instance, predictive analytics can flag potential bottlenecks in UI flows based on historical user interactions or session logs.

Role of AI, ML, and Data Science in Usability Testing

Modern QA leverages data science to turn user data into actionable insights. AI in software engineering supports automated heuristic evaluations, natural language processing (NLP) of user feedback, and emotional sentiment analysis from interactions.

Machine learning models help detect common user errors, adjust interfaces, and power predictive maintenance—preventing small usability defects from escalating into systemic issues. AI-powered testing also enables the auto-generation of test cases based on real user behavior, making test coverage more representative and robust.

Next-Gen Testing Tools and Self-Healing Automation

Self-healing automation tools are revolutionizing QA by enabling scripts to dynamically adapt to UI changes without manual intervention. These tools mimic human behavior and understand interface context—even when object identifiers change—ensuring seamless continuity across the SDLC.

When combined with Java-based or open-source testing frameworks, these adaptive tools bring together robust scripting power and real-time responsiveness, helping QA teams stay productive even in fast-changing environments.

Cloud-Native QA and Low-Code/No-Code Testing Platforms

With distributed teams and globally dispersed user bases, cloud-native QA platforms are essential. They offer capabilities like remote usability testing, real-time bug tracking, and cross-platform compatibility assessments at scale.

At the same time, low-code/no-code testing platforms empower non-technical team members—such as UX designers or product managers—to contribute directly to usability testing. This democratizes QA and accelerates development cycles while maintaining test integrity.

Scalable Usability Testing for Enterprise Software

Enterprise systems serve diverse user roles and require scalable QA strategies. Usability testing must span access levels, workflows, device types, and accessibility standards. Frameworks designed for scale often include synthetic monitoring, heatmaps, and session replay tools.

Such tools provide insights into how real users behave across enterprise environments. These insights help reduce onboarding time, improve workflows, and enhance user productivity—critical factors in domains like fintech, healthcare, and industrial IoT.

Case Study: Usability in High-Impact Public Sector Applications

Usability isn’t just important for commercial software—it’s critical in public service applications as well. In high-impact government or nonprofit initiatives, software systems must be intuitive and reliable to ensure that users can complete essential tasks under time-sensitive or stressful conditions.

In such scenarios, usability directly influences equity, accessibility, and public trust. QA teams must design testing strategies that reflect real-world user behavior, including diverse user personas, language barriers, and varying levels of digital literacy. For instance, test scenarios might simulate applicants navigating complex eligibility requirements or submitting sensitive data under pressure.

These projects often require comprehensive usability testing that includes stress testing, accessibility audits, and scenario-based walkthroughs to ensure every user, regardless of background or device, can successfully engage with the platform.

Conclusion: The Future of Human Factors in QA

As software ecosystems grow more intelligent and interconnected, human factors in QA will no longer be optional—they will be essential. The fusion of usability testing, AI, automation, and Agile/DevOps frameworks represent a transformative approach to software quality assurance.

Organizations that embrace intelligent testing systems, cloud-native QA, and self-healing automation will not only build better software—they will create better user experiences. In this age of rapid innovation, testing for usability is testing for success.

About the Author

Daniel Raj Jeevaguntala is an internationally recognized expert in software quality assurance and AI-driven test automation, and a distinguished IEEE Senior Member. With a career spanning over 18 years—including 10 years in India and 8 years in the United States—he has delivered impactful innovations in both global and government technology ecosystems.

Daniel is known for pioneering self-healing automation frameworks, advancing predictive maintenance testing through machine learning, and leading cybersecurity testing for cloud and IoT-based systems. He has played critical leadership roles in mission-driven IT projects, mentored over 40 professionals, and influenced enterprise-wide QA strategies. He also serves as a Hackathon Judge, IEEE Conference Session Chair, and peer reviewer and editor for reputed journals. His work continues to shape the future of intelligent software testing and quality engineering on a global scale.