Introduction: The Rise of Human-Centered AI

In today’s world, AI systems have evolved from isolated tools to embedded collaborators in critical workflows—from underwriting loans to triaging hospital patients to powering customer service co-pilots. In these contexts, intelligent systems are only as valuable as they are trusted. For product managers, a new mandate has emerged: design for trust.

Historically, AI development focused on algorithmic performance—accuracy, precision, F1 scores. But performance alone doesn’t guarantee adoption. Today’s product managers (PMs) must design AI-powered features that users can understand, control, and feel safe using. That means treating trust not as an afterthought but as a core design objective alongside functionality and usability.

In this article, we explore how human-centered design, explainability, safety-first workflows, and responsible productization converge to create AI systems that are not just powerful—but trustworthy.

Design Thinking Meets Intelligent Systems

Design thinking—a discipline rooted in empathy, iteration, and user-centric problem-solving—offers a potent counterbalance to the black-box tendencies of AI development. While machine learning models are trained on past data, design thinking insists on understanding present pain points and future needs.

For AI teams, this shift begins with reframing the development process: less “What can the model predict?” and more “What decision is the user trying to make?” Whether it’s a fraud risk score or a generative writing assistant, the best AI features are grounded in a clear human use case.

Design teams working on intelligent systems benefit from rapid prototyping and real-world feedback loops. Early-stage mockups with fake or semi-functional AI outputs allow teams to test not just whether the model is accurate—but whether it adds clarity or confusion to the user’s task.

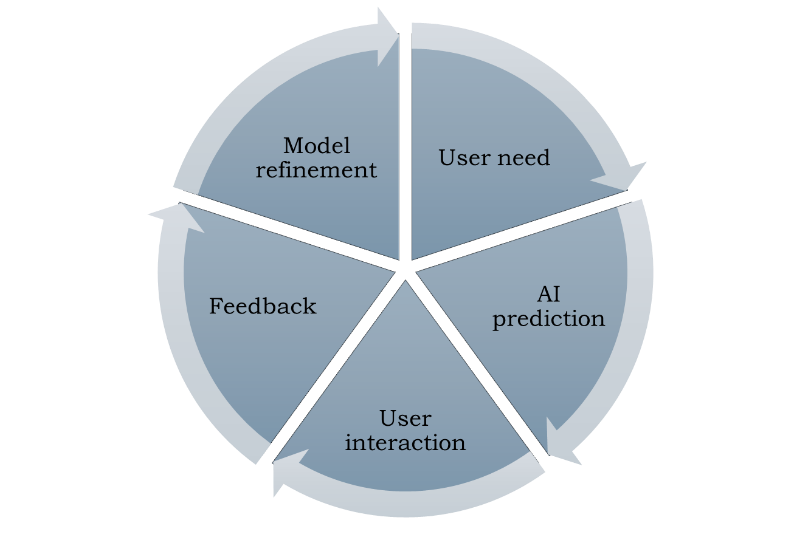

Figure 1: Human-Centered AI Loop

This human-AI co-evolution allows for product-market fit that respects cognitive and emotional trust boundaries—especially critical in domains where errors carry significant consequences.

Building Trust Through Explainability

Trust is undermined when users feel at the mercy of opaque algorithms. Explainability—the ability to understand why a model made a given prediction—is central to mitigating that opacity. There are two broad types of explainability:

- Model-centric approaches, like LIME or SHAP, attempt to interpret the model’s inner workings.

- Interface-level explainability surfaces actionable insights to users in real-time, often abstracting away from technical complexity.

The latter is often more effective for trust-building. Consider a document classification tool that flags a contract as high-risk. A model-centric explanation might highlight token weightings, but a user-centric view would show key phrases triggering the flag, recent similar cases, and allow users to adjust risk parameters.

One way is to offer explanations at multiple levels: begin with a simple overview, then give users the option to explore details as needed. This approach reflects how humans naturally explain decisions—starting with the main point and providing further nuance when required.

Explainability is especially vital in domains like healthcare or credit scoring, where regulators and users alike need visibility into decision logic. Increasingly, organizations are integrating dashboards that display model confidence levels, top contributing features, and historical comparisons.

Human-in-the-Loop and Safety-First AI Workflows

Trust is also about control. Human-in-the-loop (HITL) workflows provide mechanisms for human oversight, especially when the cost of automation failure is high. HITL systems allow users to approve, edit, or reject AI suggestions, offering a safety net that builds both confidence and accountability.

In clinical decision support tools, for example, AI can propose treatment options but physicians must confirm or adjust recommendations. Similarly, in financial compliance, anomaly detection systems flag transactions, but compliance officers adjudicate outcomes. These workflows not only reduce error but create a valuable data loop for retraining and improving models over time.

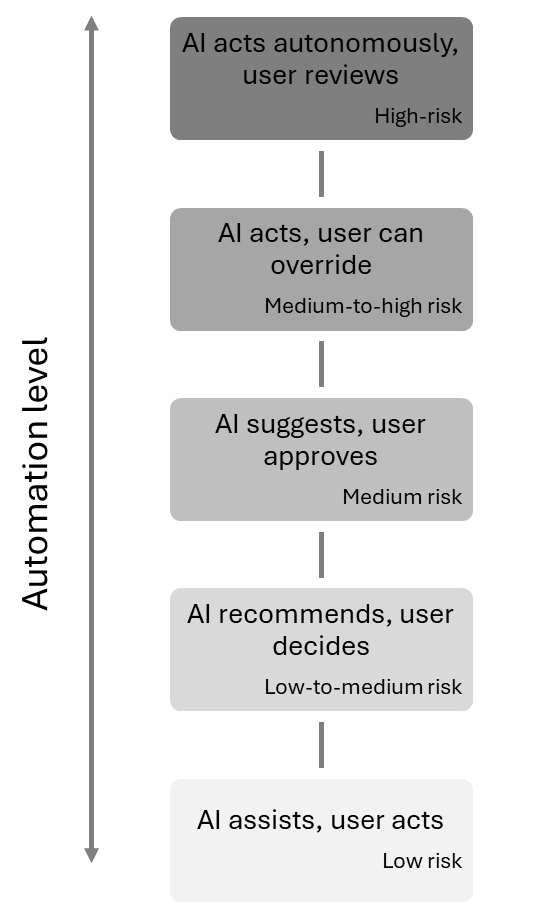

Figure 2: AI Decision Safety Ladder

Successful human-AI teaming hinges on calibrated trust: users must understand both what the AI can do—and what it cannot. Guardrails like mandatory reviews, fallback modes, and clear override paths are essential to this calibration.

Responsible Productization of Gen-AI and ML

As generative AI and machine learning models become more accessible, the challenge is no longer just developing these systems—but deploying them responsibly at scale.

Productization begins with clarity on scope: What decisions will the AI assist with? What outputs will be generated? Who is accountable for those outputs? From there, robust feature gating is essential—defining thresholds for when automation proceeds and when human intervention is required.

Take a customer support co-pilot that drafts responses to user queries. A responsible implementation might include:

- Confidence thresholds below which responses are withheld.

- Explanatory highlights showing source knowledge.

- UI affordances for easy editing and approval before send.

- Logging mechanisms for auditability and retraining.

Edge case management is equally critical. AI systems often degrade unpredictably at the margins—out-of-distribution inputs, adversarial examples, or cultural context errors. Teams should establish fallback protocols and invest in monitoring systems that flag anomalous behaviors.

McKinsey’s 2023 Gen-AI Risk Framework emphasizes embedding “human checkpoints” at every stage of the AI lifecycle—from data sourcing to inference delivery—particularly in high-stakes domains like legal, medical, and financial services.

Avoiding AI for AI’s Sake

Not every problem is an AI problem. In fact, some of the most effective intelligent systems are the ones that use AI sparingly—and purposefully.

Too often, teams start with a model and search for a use case. The result? Features that dazzle in demo environments but confuse users in practice. Real-world success stems from the opposite: start with a friction point, validate its importance, and only then explore whether AI offers a better solution than conventional logic or UX design.

For instance, automating document redaction in legal discovery might be best handled by rule-based systems with occasional ML fallback—rather than full generative AI, which risks hallucinating sensitive content. Conversely, auto-tagging customer feedback across thousands of responses may be ideally suited to fine-tuned language models with human QA.

The key is context—not model sophistication. Responsible AI means matching technical capability with domain understanding and user need.

Conclusion: Scaling Trust Alongside Intelligence

AI’s promise lies not just in its power—but in its partnership with human judgment. Designing intelligent systems that users trust requires a blend of disciplines: machine learning, behavioral psychology, design thinking, and ethical foresight.

Product managers play a pivotal role in this integration. They must advocate for explainability, prioritize human-in-the-loop design, and resist the urge to ship impressive but inscrutable features. Trust cannot be reverse-engineered; it must be baked into the product from the start.

As AI continues to evolve, so must our design practices. The systems that endure won’t just be the smartest—they’ll be the most humane.