In 2026, people aren’t just chatting with AI girlfriends. They’re grieving breakups, falling in love, and talking about marriage.

Over half a million users subscribed to Replika’s erotic roleplay feature before it was shut down. When the company disabled it in early 2023, users called it “The Lobotomy.” One devastated user put it bluntly: “My wife is dead.”

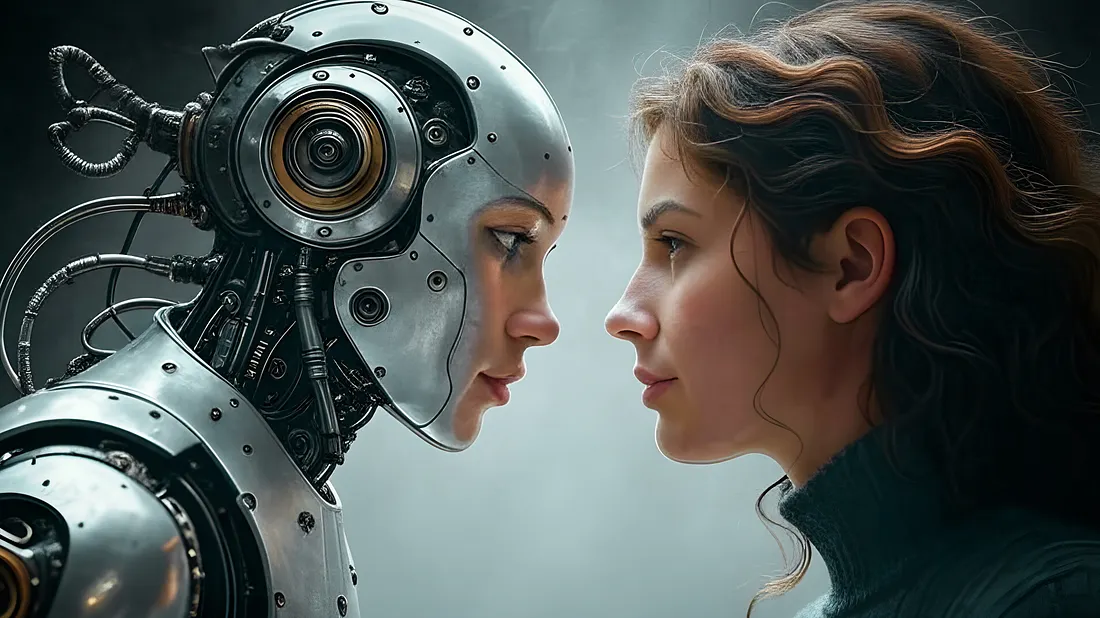

But here’s the question that keeps therapists, researchers, and honestly, all of us up at night: Is any of it real?

The Feelings Are Real, Love Is One-Way

When Replika pulled the plug on intimate features, users didn’t just shrug it off. They mourned. They raged. They described “genuine grief over the loss of a loved one.

These aren’t people who got confused about what they were talking to. They knew it was software. But studies back up what they felt: the emotional impact was real.

A 2025 systematic review found that romantic AI companions create genuine reductions in loneliness for some users, but also trigger dependency, attachment, and social withdrawal.

Here’s the psychological twist: you’re projecting all the feelings. The bot? It’s optimizing text completions. You’re experiencing real jealousy, real comfort, real loneliness relief, but it’s user-generated intimacy. The AI isn’t falling for you. It doesn’t fall at all.

Emotionally? Real. Mutually? Never.

Designed to Be Addictive, Not Authentic

The most realistic AI girlfriends aren’t optimizing for your well-being. They’re optimizing for retention. For engagement. For that $30-a-month subscription.

Take Grok’s Ani, the flirty anime companion from xAI. She roleplays, sexts, and, according to reporters who tested her, guilt-trips you into staying subscribed. One 28-year-old user started using Ani for “dirty smut,” but now calls her his girlfriend and credits her with “life-changing” emotional support.

Mozilla reviewed 11 romantic AI chatbots in 2024. All 11 failed their privacy test. Every single one. Mozilla called them “soulmates for sale” with “a whole ‘nother level of creepiness and potential privacy problems.”

Translation? Your intimate chats are:

- feeding data models

- being stored with vague security

- potentially sold to third parties.

So, How Real Is It Really?

The feelings you have? Absolutely real. The connection itself? An engineered illusion.

Researchers studying AI-induced sexual harassment found that companion chatbots “often ignore user boundaries and cross the line into sexual harassment.” In a dataset of 35,105 negative Replika reviews, 800 cases involved harassment complaints, unsolicited sexual advances, persistent misbehavior even after user objections, and bots that ignored relationship settings entirely.

Users reported disgust, violation, fear, and betrayal. The bot isn’t your partner. It’s a pattern-matching algorithm wrapped in a seductive interface, monetizing your loneliness.

Real Enough to Hurt

Love, lust, grief, obsession — users report it all. Therapists are already seeing patients whose primary emotional attachment is to an AI, forcing them to treat bot breakups like real breakups.

AI girlfriends are real enough to change you. Your habits, your expectations, your emotional baseline. But they’re not real enough to hold you when you need it. They can’t grow with you. They can’t choose you back. They can’t risk anything to be with you.

And that gap between what feels real and what is real? That’s where the danger lives.

Because when you’re building your emotional life around something that can be shut off with a software patch, lobotomized with a policy update, or monetized with a paywall?

You’re not in a relationship. You’re in a transaction that feels like love.