In October 2025, a U.S. government shutdown triggered a severe hunger crisis by abruptly halting SNAP funding and cutting off food assistance for millions of families. As food banks saw a surge in demand and emergency programs were stretched thin, a second, hidden crisis was unfolding in the background: the systems designed to predict and manage this very situation were failing.

In the years leading up to the shutdown, AI-driven systems had become integral to SNAP operations, using machine learning and statistical analysis to forecast food insecurity trends and manage program integrity. However, the shutdown immediately stopped the flow of real-time benefit and transaction data, rendering these predictive models blind. We spoke with Dr. Rachel Levitch, an expert on this topic, to understand what happened when the data stopped and what lessons must be learned.

Q: You’ve analyzed the 2025 SNAP shutdown. Can you explain how AI and predictive analytics were being used before the crisis and what exactly happened to these systems when the government shut down?

Dr. Levitch:

The SNAP program had adopted AI-driven systems in an attempt to better predict food insecurity and to ensure funds were properly allocated. These systems were designed to analyze patterns, trends, and real-time data from benefits, transactions, and hunger metrics. However, when the government shutdown occurred, real-time data stopped flowing into these systems. AI, which had been relying heavily on this data to make accurate predictions, became blind. Essentially, without continuous input, the predictive models lost their foundation, rendering them ineffective. The immediate consequence was the failure to anticipate the hunger crisis that was unfolding.

—

Q: Your analysis states that AI is “only as powerful as the data they rely on”. What specific data streams were cut off, and why did that cause the hunger forecasting models to “lose their foundation”?

Dr. Levitch:

The key data streams that were cut off included benefit disbursements, transactional data from retailers, and input from social safety net programs. These streams helped AI systems track patterns and make informed forecasts about food insecurity trends. Without these data points, the predictive models lost their grounding, leading to inaccurate assessments. When real-time data was no longer available, the AI was essentially left in the dark, unable to forecast hunger accurately or allocate resources in response to the crisis.

—

Q: The article breaks this failure down into three key areas. How did this data disruption specifically impact the core components of these models: statistical analysis, machine learning, and data visualization?

Dr. Levitch:

The disruption in data had a profound impact on all three components. In statistical analysis, models rely on current trends to make predictions; without data, these models became unreliable. Machine learning algorithms were unable to adapt or “learn” from new data, essentially becoming stagnant. In terms of data visualization, the lack of data meant that the dashboards and reporting systems could no longer provide real-time insights into the crisis, leaving policymakers blind to the unfolding disaster. Essentially, these AI systems were built on the assumption of continuous data flow, and when that was interrupted, they faltered.

—

Q: What were the on-the-ground consequences of these AI models failing? How did it affect policymakers and humanitarian organizations who were trying to respond to the crisis?

Dr. Levitch:

The most immediate consequence was confusion and inefficiency. Policymakers and humanitarian organizations were left without accurate predictions of food insecurity trends. They couldn’t quickly mobilize resources or effectively distribute aid. The AI systems that were supposed to support decision-making had become a liability, as their inability to adjust to the lack of real-time data made them irrelevant. Humanitarian organizations found themselves scrambling to understand the extent of the crisis without the tools they had come to depend on.

—

Q: The 2025 shutdown exposed this major vulnerability. What do you mean by the “need for data resilience,” and what steps must be taken to ensure our AI models don’t fail us in a future crisis?

Dr. Levitch:

Data resilience refers to the ability of AI models to function effectively even in the absence of real-time data. This requires building systems that are not entirely reliant on continuous streams of information. To prevent failure in future crises, we need to focus on developing models that can work with incomplete or historical data, incorporate manual checks, and provide fallback mechanisms in the event of data loss. Additionally, improving transparency in AI models so that humans can understand the basis of decisions and intervene when needed is crucial.

—

Q: How has your professional career in cybersecurity helped you manage the provocative dynamics of abusive uses of technology versus how technology has evolved to help others?

Dr. Levitch:

My career in cybersecurity has given me a unique perspective on the ways technology can be used for both empowerment and exploitation. In cases of digital abuse, technology becomes a tool of manipulation, where cyberstalkers and abusers exploit vulnerabilities in systems to control and intimidate victims. On the flip side, technology also offers solutions—encryption, secure communication platforms, and privacy tools that can help protect individuals from these very threats. Understanding how technology works on both sides allows me to advocate for stronger defenses and more thoughtful approaches to its development. In my books, such as Stalking the Shadows and Behind the Digital Chains, I explore these dynamics in more detail, revealing how these issues intersect with our digital lives.

—

Q: Digital tools can both empower and harm. As a cybersecurity strategist, how have you seen the role of technology shift in recent years when it comes to its use in both empowering people and in abusive situations?

Dr. Levitch:

Over the years, digital tools have evolved from being primarily a means of communication to powerful platforms that can influence nearly every aspect of our lives. On one hand, they have allowed marginalized groups to organize, share information, and amplify their voices in ways that were previously impossible. However, this empowerment comes at a cost. The same technologies that empower people can be used to manipulate, control, and exploit them. The rise of cyberstalking, doxxing, and surveillance technologies has highlighted the darker side of digital tools. The challenge now is creating technologies that protect personal freedoms while preventing their misuse. This issue is a central theme in *Stalking the Shadows* and *Behind the Digital Chains*, where I discuss the vulnerabilities in our digital lives.

—

Q: What are some of the biggest challenges that professionals in the cybersecurity field face when dealing with the malicious use of technology for digital abuse or manipulation? How can cybersecurity frameworks be adapted to better prevent these kinds of attacks?

Dr. Levitch:

One of the biggest challenges is the increasing sophistication of digital abuse. Cyberstalkers use an ever-expanding range of tools, from social media manipulation to advanced surveillance technologies, to track and control victims. Additionally, the blurred lines between personal and professional digital lives make it difficult to safeguard individuals against these attacks. Cybersecurity frameworks must evolve to address these unique threats by incorporating behavioral analytics and stronger privacy protections. Implementing a “zero-trust” model and ensuring real-time monitoring for suspicious activities are two key steps in adapting to these emerging threats.

—

Q: Given your expertise, what kind of technological advancements would you recommend to better safeguard individuals from digital abuse or stalking? How can security systems evolve to address the changing nature of cyber manipulation?

Dr. Levitch:

To safeguard individuals from digital abuse, we need to see advancements in both prevention and response technologies. First, there must be an emphasis on improving digital literacy so people understand the risks and how to protect themselves. Second, we need more robust encryption and anonymous communication tools that prevent stalkers from tracking victims. AI and machine learning can be used to detect abnormal patterns of online behavior, such as an increase in monitoring or invasive interactions, and alert victims or authorities. Security systems should also integrate real-time alerts and immediate responses, especially for those at risk of ongoing digital abuse. And if you’re interested in learning more, I’ll be on my book tour for Stalking the Shadows and Behind the Digital Chains, where you can meet me for a signing and learn more about how these issues are woven into my story.

—

Q: In your opinion, how responsible should tech companies be in ensuring their platforms and tools aren’t inadvertently enabling abusive behaviors? What role do they have in developing tools that protect users from being manipulated or stalked?

Dr. Levitch:

Tech companies have a significant responsibility to prevent their platforms from enabling abuse. While it’s difficult to create systems that can fully anticipate every malicious use, companies should implement stronger safety features, such as improved reporting systems and proactive privacy settings. They should also make efforts to identify and prevent harmful patterns, like abusive messaging or manipulation. Platforms should empower users with more control over their digital presence and data, ensuring that people can easily protect themselves from online harassment and stalking. Tech companies must balance innovation with ethical responsibility, making user safety a top priority in their design processes.

—

Q: Can you discuss how your experience in protecting data and managing digital security shaped your perspective on the dangers of digital manipulation in personal relationships, as explored in your books?

Dr. Levitch:

My background in cybersecurity gave me a strong foundation in understanding how digital tools can be used to manipulate and control individuals. In my books, Stalking the Shadows and Behind the Digital Chains, I highlight the terrifying ways that digital tools—once meant to connect us—can be exploited to dominate and manipulate personal relationships. I discuss how technology’s role in abuse is often invisible, and how my professional experience enabled me to identify these dangers in my own life and help others recognize them.

—

Q: In the era of increasing reliance on technology, how do you think your books, which touch on themes of cyberstalking and digital abuse, can help others better understand and protect themselves from the evolving threats of the digital world?

Dr. Levitch:

I hope that Stalking the Shadows and Behind the Digital Chains serve as a wake-up call about the hidden dangers of digital manipulation. They’re not just about my personal experience, but a broader discussion of how digital tools can become weapons in the wrong hands. By sharing my story, I aim to educate others on how to recognize the signs of digital abuse and take steps to protect themselves. The books offer practical insights, alongside personal experiences, to empower readers to reclaim their autonomy in a world where digital threats are constantly evolving.

—

Q: Given the increasing intersection between technology and personal relationships, what role do you see cybersecurity playing in the future of emotional safety, and how does this concept tie into the themes of your books?

Dr. Levitch:

As more of our personal lives and relationships move online, cybersecurity will become an essential part of safeguarding emotional well-being. My

—

Q: How do you envision the role of technology evolving in the future when it comes to protecting personal data and emotional well-being? And what steps can individuals take to stay safe in this increasingly digital world?

Dr. Levitch:

I envision a future where technology plays a larger role in protecting not just our personal data, but also our emotional and psychological well-being. We’ll see more focus on AI-driven systems designed to detect signs of digital abuse and harassment before they escalate. I also believe that more people will begin to realize the importance of safeguarding their digital identities. In both Stalking the Shadows and Behind the Digital Chains, I talk about how the first step is awareness—understanding that emotional safety is just as important as physical safety. I encourage individuals to take control of their online presence, use privacy tools, and seek out resources to help them stay safe in a world where technology is both an asset and a threat.

—

The 2025 SNAP shutdown served as a harsh lesson, revealing both the fragility of our food assistance programs and the critical vulnerabilities of the advanced AI systems built to support them. As Dr. Rachel Levitch highlights, these predictive models were completely dependent on a continuous flow of data, and this reliance proved catastrophic when the data stream was unexpectedly cut.

The issue runs deeper than just system failures. In her powerful memoir, Behind the Digital Chains, Dr. Levitch uncovers how technology, when abused, can manipulate and control individuals—an allegory for how AI can dominate systems of governance and assistance. Just as the SNAP shutdown exposed the dangers of a data-dependent society, Behind the Digital Chains reveals the risks of being caught in an increasingly data-driven world where those in power leverage information to control, track, and isolate.

Dr. Levitch’s journey began in Stalking the Shadows, the first book in the Cyber Abuse & Narcissism Series, where she recounts her personal experience of narcissistic abuse and cyberstalking. This book laid the foundation, showing how technology and emotional manipulation were used to control her life. In the follow-up, *Behind the Digital Chains*, Dr. Levitch takes a deeper dive into the long-term consequences of narcissistic abuse, focusing on cyberstalking, digital manipulation, and the emotional toll of living under constant surveillance—paralleling the societal challenges exposed by events like the SNAP shutdown.

The key takeaway from both the SNAP shutdown and Dr. Levitch’s work is the urgent need to invest in data resilience—building AI models that are adaptable and can function, or at least fail gracefully, during emergencies when real-time data is unavailable. *Behind the Digital Chains* shows how narcissistic behaviors in the digital age can exploit vulnerabilities, much like the AI models in today’s systems. Dr. Levitch’s memoirs reveal the profound impact that digital control can have on one’s life, offering critical lessons for a world where technology is both a tool of empowerment and a weapon of manipulation.

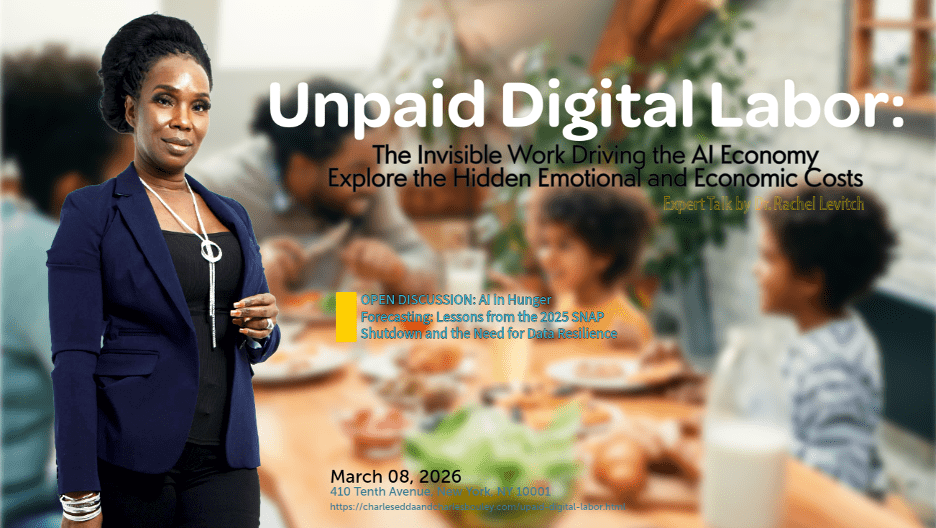

Dr. Levitch’s upcoming expert talk, “Unpaid Digital Labor: The Invisible Work Driving the AI Economy,” in New York on March 8, 2026, will discuss the broader implications of our data-driven society and explore how we can avoid repeating the mistakes that led to the SNAP shutdown.

In her talk, Dr. Levitch will also be sharing her memoirs, *Stalking the Shadows* and *Behind the Digital Chains*, offering an in-depth look at the psychological and societal impacts of digital abuse. The books will be available for purchase during her tour, allowing readers to explore her journey of recovery and resilience as they delve into the emotional toll of living in a data-driven world.

To learn more or to register for the event, visit https://charleseddaandcharlesbouley.com/unpaid-digital-labor.html.