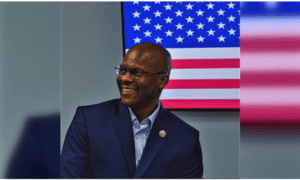

In an increasingly digitized financial landscape, where cybercriminals evolve faster than firewalls and the stakes of a single breach can ripple across continents, one researcher is stepping into the chaos with clarity, conviction, and code. His name is Enoch Oluwabusayo Alonge, a machine learning expert whose recent scholarly work on fraud detection is reshaping how institutions think about cybersecurity and trust in the digital age.

Fraud isn’t what it used to be, Alonge begins. It doesn’t come knocking at the front door with a mask anymore. It slips through the data, blends in with the noise, and adapts faster than static systems can respond. We needed a smarter way, and that’s where machine learning comes in.

His landmark publication, Enhancing Data Security with Machine Learning: A Study on Fraud Detection Algorithms, is more than a research paper. It is a declaration that legacy fraud detection systems, those built on fixed rules and rigid logic, are no longer sufficient in a world of synthetic identities, AI-powered phishing schemes, and real-time transactional manipulation.

We’re not just dealing with criminals anymore, he says. We’re dealing with algorithms built to exploit weaknesses at speeds that manual or rule-based detection simply can’t match. That’s why I focused this study on building intelligent systems, systems that not only detect, but learn, adapt, and explain.

The study, published in a respected multidisciplinary journal, explores a wide spectrum of machine learning tools, from logistic regression and support vector machines to anomaly detection models, neural networks, and hybrid learning frameworks. But what distinguishes the work is not just its technical depth, it’s Alonge’s insistence that fraud detection must also be ethical, interpretable, and scalable.

Accuracy alone is not enough, he explains. A fraud detection model that’s 99 percent accurate but can’t tell you why it flagged a transaction is a liability. Especially in sectors like banking and healthcare where one mistake can ruin a life or destroy trust.

To that end, Alonge’s research rigorously integrates explainable AI tools, frameworks like SHAP and LIME that allow analysts and regulators to see exactly how a machine learning model arrived at its decision. The future of machine learning isn’t just performance, he asserts. It’s transparency.

And transparency, he notes, is especially critical in systems that directly impact people’s access to money, credit, and identity validation. When someone is falsely flagged as a fraud risk, the damage goes beyond inconvenience. It touches dignity, access, reputation. We must build systems that respect those boundaries.

Behind the academic clarity lies an undeniable urgency. Alonge speaks not like someone theorizing from the sidelines, but like someone who has seen the speed and sophistication of modern fraud up close. These are not isolated breaches, he warns. They’re systemic, coordinated, and increasingly automated. One compromised API or one synthetic transaction can escalate into millions in losses within minutes.

To tackle such high-speed threats, Alonge’s study proposes a layered approach. We start with supervised learning for known fraud patterns, he explains. But the real power comes when we layer in unsupervised learning, clustering, anomaly detection, that finds the patterns we don’t even know to look for yet.

He also emphasizes the importance of deep learning in modeling temporal behavior, how people normally spend, log in, or transact over time. That’s where recurrent neural networks and autoencoders come in. They allow us to detect not just single anomalies, but shifts in behavior that unfold over sequences.

But even the most powerful models, he notes, are only as good as the data they learn from. One of the major challenges in fraud detection is data imbalance. Fraudulent transactions often represent less than one percent of a dataset. If your model is just chasing the majority, it’s going to miss the fraud almost every time, he says.

To combat this, his research employs sophisticated data balancing techniques like SMOTE and anomaly-focused feature engineering. You have to give the model a chance to learn from the rare, because fraud is rare but devastating.

Perhaps most compelling is Alonge’s attention to real-world deployment. It’s not enough to prove the model works in theory, he says. It must work at scale, in real time, under real constraints.

His research benchmarks algorithms using live, open-access datasets and applies them in simulated financial environments. He leverages ROC curves, confusion matrices, and k-fold validation, but never loses sight of the practical question. Will this system stop fraud before the damage is done?

It has to be fast, but it also has to be fair, he adds. Too many systems flag false positives, and that hurts the wrong people. We’re not just solving math problems, we’re making decisions about trust.

Alonge’s commitment to fairness extends beyond algorithms into governance. He insists that any fraud detection system deployed in today’s regulatory environment must be auditable and compliant. You can’t have a model that just spits out a binary label. Financial institutions need to be able to justify their decisions, not just to customers, but to auditors, regulators, and courts.

The research outlines how institutions can build model pipelines that are not just accurate, but interpretable and legally defensible. He calls for a convergence of machine learning, legal standards, and user experience design. Good AI is not just about the output. It’s about the accountability behind it.

This integration of AI and accountability is where Alonge sees the future headed. We’re moving toward a world where every financial transaction, every insurance claim, every login attempt is monitored by intelligent systems. The danger is when those systems are opaque, biased, or misaligned with public interest.

To counter that, his paper recommends not just better algorithms, but better institutions, ones that invest in AI literacy, ethical deployment frameworks, and cross-sector collaboration. No single company or country can fight fraud alone, he says. This has to be a global effort. Fraud is global. AI must be global too.

That sense of scope is part of what makes Alonge’s voice so vital. He sees the problem not as a narrow technical challenge but as a social one, a systemic threat that requires interdisciplinary solutions. It’s not just data scientists who need to understand this. It’s executives, policymakers, legal teams, customer support, infrastructure providers. Everyone.

Despite the complexity of the systems he builds, Alonge never loses sight of the human stakes. At the end of the day, what we’re really defending is trust, he says. Trust in banks. Trust in platforms. Trust in digital identity. That’s the currency. That’s what we lose when fraud wins.

His study concludes with a clear call to action. He advocates for the adoption of blockchain-enhanced verification, real-time stream analytics, and federated learning models that allow institutions to collaborate on fraud detection without sharing raw user data. Privacy doesn’t have to be sacrificed for security, he insists. We can build systems that protect both.

He also calls for greater research into quantum-resilient fraud models, especially as quantum computing becomes a credible threat to current encryption standards. If we don’t future-proof our fraud systems now, we’ll be chasing threats we’re not prepared to stop.

As global cybercrime surges into trillions in annual damages, the urgency of Alonge’s work cannot be overstated. And yet, for all the technical brilliance and systemic insight, his message remains strikingly grounded.

I just want to build systems that do the right thing, he says. Systems that catch the bad actors without punishing the innocent. That protect data without eroding privacy. That make sense, not just to engineers, but to everyone.

It is this clarity, this dual fluency in technology and ethics, that makes Enoch Oluwabusayo Alonge a voice to watch and a researcher whose work may well shape the next generation of cybersecurity, not just in theory, but in practice.

We have the tools, he says. Now we need the will to use them wisely.

And in that quiet but resolute declaration, a new standard for fraud detection is born, not just intelligent, but just.