As software architecture continues its migration from monoliths to microservices, communication, not computation, has emerged as the core challenge of scale. Distributed systems aren’t just collections of services; they are orchestrated conversations. And as latency, observability, and resilience become table stakes, cloud-native message buses like Kafka, NATS, RabbitMQ, and gRPC have become the silent backbone of production-grade systems.

These tools enable asynchronous, loosely coupled communication—essential for building platforms that can evolve independently without introducing cascading failures. However, message buses also bring complexity: retry logic, backpressure, event ordering, and eventual consistency all require careful engineering judgment.

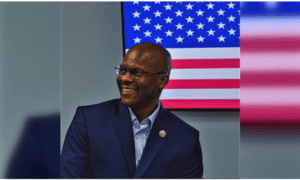

Few engineers understand this intersection better than Aniruddha Maru, VP of Infrastructure at Standard AI, where he leads Site Reliability Engineering (SRE) for a real-time, ML-powered retail platform. With over 16 years of experience spanning software development, mobile engineering, backend APIs, and large-scale infrastructure, Maru’s career is a study in how systems become conversations and how those conversations are made reliable.

From REST to Event Streams: Why Message Buses Matter

In traditional systems, services often communicate via REST which is synchronous, transactional, and tightly coupled. However, as system complexity increases, so does the fragility of this model. Modern architectures increasingly rely on message-driven communication, where services emit and respond to events without hard dependencies on one another’s availability.

This evolution is not just about scale—it’s about resilience and modularity. Maru’s work at Standard AI, where real-time decision-making depends on a constellation of microservices working across both cloud and edge environments, exemplifies this shift. To support their checkout-free retail platform, Maru’s team designed a cloud-native, message-based infrastructure that ingests sensor signals, runs real-time video inference at the edge, and coordinates with backend services — all while maintaining sub-second latency.

Instead of relying on traditional REST-based communication or heavyweight brokers, they adopted Hedwig, an open-source inter-service communication bus built for AWS and GCP environments. Hedwig enforces message payload validation, enabling early detection of contract mismatches, and promotes loose coupling between publishers and consumers.

For background asynchronous workflows that don’t require immediate responses, Maru also contributes to Taskhawk docs, and Go SDK), a sister project that provides reliable, structured task execution across cloud environments. Like Hedwig, Taskhawk emphasizes schema enforcement and operational simplicity, making it ideal for modern distributed systems.

Maru has been the long-time maintainer of both Hedwig and Taskhawk, advancing their core implementations through OSS projects like hedwig-python, hedwig-go, and the official documentation site. These tools are now enabling teams beyond Standard AI to adopt structured, schema-driven messaging and task-processing patterns at scale.

Engineering Communication as Infrastructure

Maru’s philosophy is that communication should be treated as first-class infrastructure. “You don’t scale microservices by scaling the services. You scale them by improving how they talk to each other,” he notes. This means investing in event schema governance, distributed tracing, and operational fail-safes like dead letter queues and circuit breakers.

At Standard AI, his team built internal tooling to visualize message flow, track delivery latency, and enforce event contracts across teams. The goal wasn’t just functional correctness, but observability and trust. If a service fails to respond, the system logs it, retries it, or routes around it without compromising the rest of the pipeline.

This focus on reliability isn’t new for Maru. His earlier career spans mobile and desktop application development, but it was his transition into backend engineering and DevOps that shaped his approach to distributed communication. Over the years, he’s led major infrastructure transformations using Go, Python, and Kubernetes – skills that now underpin Standard AI’s ability to scale machine learning in real-world retail spaces.

Message Buses in the Age of ML Inference

What makes communication in microservices particularly challenging is the rise of machine learning workloads that require hybrid coordination. Inference doesn’t happen in one place – it often starts at the edge, passes through a message queue, and triggers decisions in a centralized cloud model. Maintaining state and continuity across these asynchronous stages is one of the toughest engineering problems in AI infrastructure today.

Maru’s team built a hybrid system that supports ML model updates, inference coordination, and telemetry aggregation, all over message buses. From orchestrating retraining pipelines to coordinating edge-device updates through event-driven deployment triggers, the system balances performance with flexibility.

And unlike traditional batch pipelines, this architecture is reactive, that is, capable of adjusting in real time as new data arrives, services change, or edge conditions evolve.

What Engineers Can Learn from Infrastructure Conversations

Maru’s work underscores a growing realization: the future of microservices is not just modular, it’s conversational. And mastering those conversations means thinking beyond code to events, signals, queues, and fallbacks.

He advocates for a culture where engineers are not just consumers of APIs but designers of communication contracts. Where observability is part of the design doc, not just the incident postmortem. And where infrastructure teams act as communication architects, ensuring that every service speaks with clarity, consistency, and accountability.

In a world increasingly powered by machine-to-machine interaction, message buses are the new nervous system. And thanks to leaders like Aniruddha Maru, we’re learning how to wire it with care.