Introduction

Briefly introduce what LLM (Large Language Model) applications are, and the growing demand for deploying them into production. Mention the importance of hiring skilled LLM engineers for successful deployment.

Key Considerations Before Building LLM Applications [Word Count: 100]

Discuss the factors to consider before starting to build LLM applications, such as data availability, computational resources, and cost implications.

Essential Tools and Technologies for LLM Application Development [Word Count: 150]

Highlight the essential tools, frameworks, and technologies required for developing LLM applications, including libraries like GPT-3.5 Turbo, tools for prompt engineering, and vector databases.

Best Practices for Prompt Engineering and Fine-Tuning [Word Count: 200]

Explain the importance of prompt engineering and fine-tuning in improving the performance and accuracy of LLM applications. Include examples of effective prompt optimization techniques.

Strategies for Efficient LLM Application Deployment [Word Count: 200]

Provide strategies for deploying LLM applications into production, focusing on load balancing, managing cost and latency, and ensuring scalability.

Implementing Retrieval-Augmented Generation (RAG) [Word Count: 100]

Discuss the role of Retrieval-Augmented Generation (RAG) in enhancing the performance of LLM applications by combining retrieval techniques with LLMs.

Real-Time Monitoring and Maintenance [Word Count: 150]

Explore the importance of real-time monitoring and maintenance for LLM applications in production. Highlight tools and practices that ensure optimal performance and quick troubleshooting.

Case Study: Successful Deployment of an LLM Application [Word Count: 100]

Present a case study that illustrates the successful deployment of an LLM application, showcasing the challenges faced, solutions implemented, and the results achieved.

Prompt Engineering: Prompt Engineering helps to craft compelling, coherent, and relevant prompts and outputs to standardize interactions. For prompt engineering tools, you can leverage PromptBase, Hugging Face Transformers, Promptify, Portkey, etc.

Conclusion [Word Count: 100]

Summarize the key points discussed in the article and provide a call-to-action for businesses looking to hire LLM engineers for their projects.

Building and Deploying LLM Applications for Production

LLM full form stands for Large Language Models, which serve as AI models performing natural language-related processing tasks. Its deep learning architecture and algorithms, coupled with large datasets, help to enhance customer service, automate tasks and notch up the content creation process. Due to their vast applications, LLMs are being deployed in various industries. Therefore, organizations are seeking to hire LLM engineers developers to build and deploy successful applications. The blog post dives deep into how to build successful LLM apps, outlining key considerations, best practices, efficient strategies, and much more.

Key Considerations Before Building LLM Applications

Building efficient LLM applications depends on the following factors:

- Data Availability: A successful AI project deployment depends on the quality and availability of the data. Meanwhile, data security, privacy and integrity is paramount.

- Computational Resources: Extensive resources such as hardware, software setup, infrastructure requirements featuring specialized TPUs, etc, are required to train datasets. The equipment must be of latest versions, compatible and accessible to engineers.

- Budgetary Constraints: From the initial infrastructure setup to the final deployment of applications, the cost of building a successful LLM application is high. Organizations need to set up a budgetary framework and monitor every cent spent.

Essential Tools and Technologies for LLM Application Development

Building LLM Applications is a complex process for which various tools & technologies are required for successful deployment. Listed below are the essential framework required for LLM applications

- LLM models & Platforms: Existing & pre-trained LLM models and platforms such as Open AI’s GPT-3.5 Turbo, Gemini etc can be leveraged & trained accordingly to perform specific tasks.

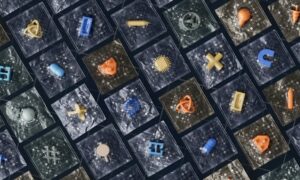

- Prompt Engineering: Prompt Engineering helps to craft compelling, coherent and relevant prompts and outputs to standardize interactions. For prompt engineering tools, you can leverage PromptBase, Hugging Face Transformers, Promptify etc.

- Vector Databases: Vector Databases help to personalize user experiences by storing data for semantic or similar queries. Use vector databases tools such as Pinecone, ChromaDB, Milvus, etc.

- Additional Tools: For scalable & flexible LLM models, deploy TensorFlow or other tools. Meanwhile, cloud platforms such as AWS or Microsoft Azure can be leveraged for computational resources.

Best Practices for Prompt Engineering and Fine-Tuning

Effective, prompt engineering depends on your LLM model’s nuances, application preferences and requirements. Compelling prompt structure & templates improve the overall content quality and relevance of the output. Meanwhile, to maintain consistency across all output, LLM models need to be fine-tuned. In order to improve the performance and accuracy of efficient LLM applications, consider using the following best practices for prompt engineering and fine-tuning:

- Clear & Concise Prompt Creation: Pen down clear instructions & prompts to ensure robust understanding by your LLM models. Using straightforward and concise prompts help in improving overall quality of response.

- Few- Shot Prompting: Leverage Few-shot prompting technique to enhance accuracy of your model. Without requiring excessive training, this technique can help to generalize responses using a few correct and relevant examples.

- Domain-specific datasets: For fine tuning, use large datasets which are varied in nature and also relevant to the specific domain. Insufficient data sets can adversely affect the LLM performance.

- Regular Audits: Make sure to put down continuous testing and monitoring programs at regular intervals. This will help you to detect errors and issues quickly to enhance overall user experience and lead with a competitive edge.

Strategies for Efficient LLM Application Deployment

This section of the article explores various efficient strategies for efficient deployment of LLM application. Sometimes detailed planning and meticulous execution is not enough. Additional strategies to go beyond the usual strategic framework is required for building successful LLM applications.

- Optimized Load Management: Distribute and deploy online traffic across multiple servers for optimal load management. It helps to serve better performance under peak traffic hours or increased surge of web activities. Additionally, it will reduce the amount spent on preventing downtime and resolving related issues.

- Cost Optimization: Working under specific budgetary constraints poses a major challenge for LLM developers. Choose the right hosting server and optimize the cost of infrastructure and equipment.

- Resolving Latency Issues: Likewise, cost optimization and managing latency issues are headaches. Consider deploying caching, model compression, and hardware acceleration to minimize response times.

- Scalability: The initial design of your LLM application should take into consideration the increased user demands, scalability requirements and maximized workloads.

Implementing Retrieval-Augmented Generation (RAG)

RAG stands for Retrieval-Augmented Generation (RAG). This technique blends external information retrieval with existing language models, whereas the RAG technique extracts relevant information from an external database or knowledge base. It then uses this information to improve the accuracy and relevancy of outputs thereby reducing hallucinations. The ideal case scenario for implementing RAG is as follows:

- Lacking Context: When the system is found lacking on knowledge or contextual relevance.

- Lacking Latest or Recent Information: When LLM models are ill-equipped to handle the latest or recent information, RAG comes into play for deploying the updated datasets in LLM algorithms.

- Inadequate Domain-specific Data: When the datasets in the LLM Models lack the domain-specific information, RAG helps to incorporate domain-specific data into the models.

Real-Time Monitoring and Maintenance in LLM Models

Real-time monitoring and maintenance is an indispensable part of deploying LLMs into production. Listed below are ways in which you can continuously monitor your models:

- KPIs evaluation: Key performance indicators such as downtime, memory leaks, user analytics, error rates, etc., are essential for evaluation. Set a system to continuously track and monitor these metrics.

- Regular Testing: One of the key aspects of monitoring is to regularly evaluate and conduct testing at thorough intervals.

- Scheduled Maintenance: Set up pre-determined scheduled maintenance to not affect user activity & experience. The maintenance tasks must revolve around retraining, setting or updating new parameters to ensure relevancy.

- Feedback Mechanism: Conduct thorough feedback to improve user experience. Set up surveys and research to identify areas of improvement, potential errors and improve overall performance of the LLM model.

Tools such as Langsmith, Lunary etc can help you in setting up monitoring and maintenance systems for LLM applications.

Case Study: Successful Deployment of an LLM Application

Over the years, LLM models have been successfully deployed across various applications that have gain huge market traction and revolutionized the way we conduct tasks. Some of the most successful and popular LLM applications are listed below:

- GPT: LLM model deployed in the ChatGPT, a generative AI application has taken over the world by storm. Serving as a popular chatbot, it generates human-like text responses.

- PaLM 2: Following the release of paLM, this LLM model is the backbone of Bard by Google, a popular chatbot that rivals ChatGPT and now goes by the name of Gemini.

- Llama 2: Llama 2 serves as an open-source LLM model. Built by Meta, the latest versions of Llma can handle various content-related tasks in several languages.

Closing Thoughts

Building LLM applications for production requires impactful expertise, in-depth knowledge and considerable experience. Organizations can hire remote LLM engineers to successfully deploy LLM applications and navigate the labyrinth of intricate challenges. Put into practice the strategies to deliver effective applications to your users. Consider cost-cutting and optimization practices to level up your LLM models. Set up real-time monitoring practices, implement RAG if necessary, and leverage cutting-edge tools and technologies. Ensure that LLM models are continuously monitored and updated.