Posts By Noor

-

660Finance News

660Finance NewsWhat Steps Can Organizations Take to Improve Audit Readiness?

Regular audit is the key to streamlined, crucial business operations, especially finance. It allows you to catch mistakes early, prevent fraud, and...

-

508Technology

508TechnologyThe Future of Digital Entertainment: How Technology and Online Platforms Are Changing Our World

Digital entertainment is growing faster than ever before. From mobile apps to manga reading platforms, technology has transformed how we watch, read,...

-

467Business Reviews

467Business ReviewsPedro Vaz Paulo: Transforming Businesses Through Strategic Consulting

In today’s fast-changing business environment, companies need more than ambition to stay competitive. They require structured strategies, efficient operations, and the right...

-

542Latest News

542Latest NewsThe Best CRM in Malaysia 2025: What Real Teams Need

About Malaysia Malaysia is going digital fast. Internet penetration is 97%. Mobile connections are more than the population. Business no longer just...

-

924Information Technology

924Information TechnologyLearn Data Science from Scratch and Land Your First Job

Data science has become one of the most in-demand fields in tech today, offering ample opportunities for growth, learning, and career advancement....

-

908EdTech

908EdTechICTEDUPolicy.org: Shaping the Future of Education Through ICT Integration

In the twenty-first century, education is inseparable from technology. From online learning platforms to artificial intelligence in classrooms, the world is rethinking...

-

429Business Reviews

429Business ReviewsThe Future of Business Consulting: How PedroVazPaulo is Driving Digital Transformation and Strategic Growth

In today’s hypercompetitive and digitally driven economy, businesses cannot afford to stand still. Technology is evolving at lightning speed, customer expectations are...

-

343Travel Technology

343Travel TechnologyFrom Blackouts to Camping Trips: Why More Canadians Are Turning to Portable Power Stations

In Canada, electricity is more than a convenience—it’s essential for survival. Heating systems, food storage, communication devices, and even access to clean...

-

440Digital Marketing

440Digital MarketingExploring 5 Key Forms of Digital Advertising

Picture: Unsplash Online advertising has taken a significant portion of business-customer communication. There are so many types of them that it is...

-

315Digital Marketing

315Digital MarketingThe Ultimate Guide to Marketing Your Podcast Online

Picture: Unsplash Starting a podcast gives you a chance to make your voice heard, build your brand, and reach listeners worldwide. But...

-

640How To

640How ToWhy Hiring an Expert Lawyer Is One of the Most Important Decisions You Can Make

Legal issues are some of the most stressful challenges you can face in life. Whether you’re starting a business, navigating criminal charges,...

-

420HealthTech

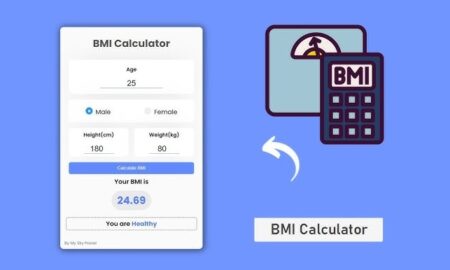

420HealthTechBMI Calculator for Women – Instantly Calculate Your Body Mass Index

Staying on top of health and fitness has never been easier. With a BMI Calculator for Women, you can quickly see whether...

-

406HealthTech

406HealthTechHow ACFT Calculator Helps You Prepare for the Army Fitness Test

Did you know that nearly 40% of soldiers struggle to meet the Army Combat Fitness Test (ACFT) standards? If you’re one of...

-

348Technology

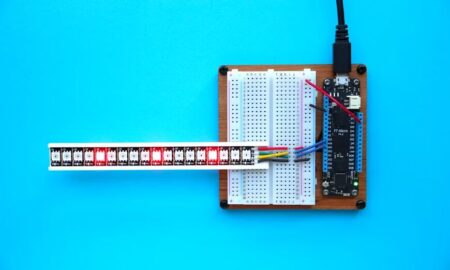

348TechnologyKey Considerations for Choosing Connectors in Electronics

Don’t let connector failures cost you thousands on your next electronics project Choosing the right connector for your application is important....

-

462Digital Marketing

462Digital MarketingiZoneMedia360.com: Redefining Digital Marketing and Media for the Modern Age

In today’s hyperconnected world, businesses can no longer rely on traditional marketing alone. Digital presence is the new storefront, and visibility across...

-

1.1KInformation Technology

1.1KInformation TechnologyBest Practices for Effective Website Development & Design 2025

Website development is evolving so rapidly. The user-centric designs add more beauty to it. Moreover, the advanced technologies and robust security measures...

-

729Digital Banking

729Digital BankingTechnology Driving Innovation in Mobile Apps: How Engineering Excellence Powers Product Growth

In the fast-paced digital landscape, mobile applications have become the cornerstone of user engagement, driving businesses to enhance performance, security, and user...

-

523Artificial intelligence

523Artificial intelligenceArtificial intelligence Optimizing Deep Learning Deployment: How AI Infrastructure is Transforming Efficiency

Artificial intelligence (AI) and deep learning are at the heart of modern technological innovation, powering advancements in autonomous vehicles, cloud computing, and...

-

260Technology

260TechnologyHydraulic Trash Rack Cleaning Machines with Scanners: What Worth to Know

In the realm of water management, the efficiency of hydraulic trash rack cleaning machines is paramount. These machines play a crucial role...