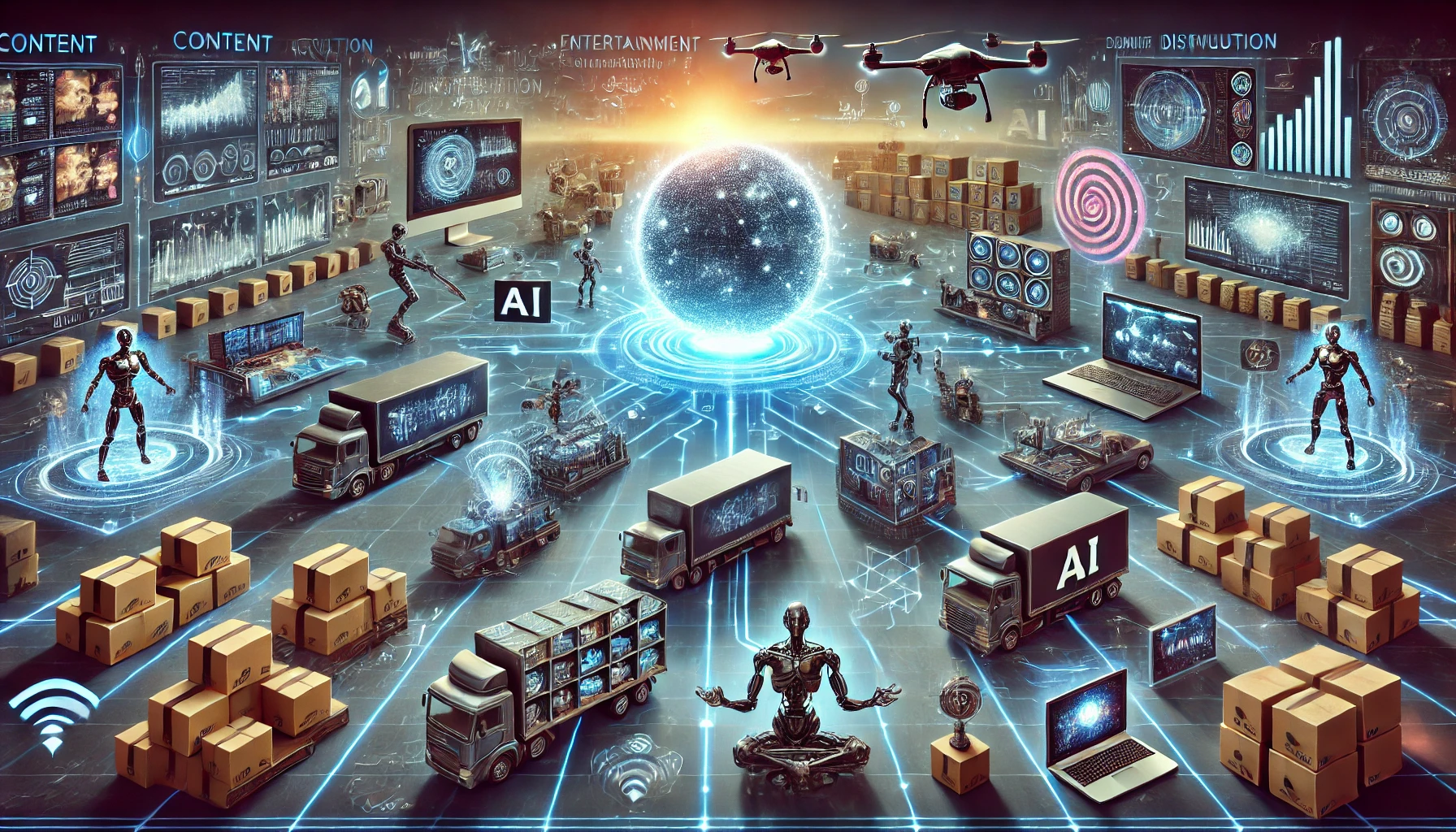

In 2026, the boundary between “playing” a game and “watching” a movie has evaporated. We have entered the era of Generative Entertainment, where content is no longer “static” but “elastic.” As of February 2026, the global market for AI in media and entertainment is projected to reach $4.8 billion, with major studios reporting that AI-powered pipelines have reduced production costs by up to 60%. For a modern Business, the “Blockbuster” model is being replaced by “Niche-at-Scale,” where AI allows a single concept to be spun into thousands of personalized versions. Meanwhile, Digital Marketing has moved into the realm of “Autonomous Fandom,” where virtual influencers with millions of followers are managed by sophisticated AI agents.

The Technological Architecture: The Real-Time Production Stack

By 2026, “Post-Production” is no longer a separate phase; it happens simultaneously with creation.

-

Real-Time Rendering & “Act-One”: In February 2026, tools like Runway Gen-4 and OpenAI Sora 2 have solved character consistency. Directors now use “Act-One” technology to map human emotional performances onto AI-generated characters in real-time, allowing for “Live Animation” that feels indistinguishable from reality.

-

Neural VOD (Video on Demand): Streaming platforms have transitioned to Neural Streaming. Instead of downloading a fixed video file, your device receives a “Scene Graph” and uses local AI to render the visuals, allowing for instant “Deepfake Dubbing” where actors’ lips move perfectly in 50 different languages.

-

Spatial Audio 3.0: Using 5G-enabled “Wearable Audio” (Article 68), 2026 media offers “Object-Based Sound.” As you move your head, the audio environment shifts dynamically, making a “Living Room Movie” feel like a front-row seat at the actual filming location.

Artificial Intelligence: The “Sentient” Storyteller

In 2026, Artificial Intelligence is no longer just a tool for editors; it is a co-writer and a living character.

1. “Sentient” NPCs (Non-Player Characters)

The breakout trend of February 2026 is Memory-First AI in gaming. NPCs in titles from Ubisoft and Rockstar are now powered by “Agentic LLMs.” They don’t follow scripts; they have “Souls” (long-term memory buffers). They remember how you treated them three weeks ago, hold grudges, and can engage in unscripted, natural voice conversations that affect the game’s outcome.

2. Personalized Cinema (The “VCM” Mode)

2026 marks the rise of Variable Character Movies (VCM). Viewers can now opt into “Full-Generation Mode,” where AI integrates their likeness and voice into the film. You aren’t just watching a hero save the world; you are the hero, with the AI-generated script adjusting the dialogue to match your personality.

3. Agentic Game Design

Game development cycles have been slashed from 5 years to 18 months. Using Agentic UGC (User Generated Content), players in Roblox or Fortnite can give a high-level voice command—“Build me a Neo-Tokyo cyberpunk level with three stealth missions”—and the AI agent handles the level design, asset placement, and logic in seconds.

Digital Marketing: The Virtual Influencer Takeover

Digital Marketing in 2026 is dominated by Synthetic Personas who never sleep.

-

The Virtual Megastar: AI influencers like Aisha Neo and Lil Miquela have reached peak cultural relevance this February. With market sizes for virtual influencers hitting $15.9 billion, these 24/7 digital beings provide brands with “Zero-Scandal” consistency and hyper-targeted engagement that human creators cannot match.

-

AEO (Answer Engine Optimization) for “Vibes”: As fans ask their personal AI agents, “What should I watch to feel ‘Cerebral Melancholy’ tonight?”, studios are optimizing their “Emotional Metadata” to ensure their content is the top recommendation.

-

“Dynamic Trailer” Marketing: In 2026, you don’t see the same movie trailer as your neighbor. AI analyzes your viewing history to generate a personalized trailer that highlights the elements you care about (e.g., focusing on the romance for one user and the action for another).

Business Transformation: From “Content” to “Context”

The internal Business model of entertainment has shifted toward Experience-as-a-Service.

-

The “Infinite Stream” Model: Leading platforms have moved away from “Seasons.” In 2026, some shows are “Infinite”—using AI to generate new daily episodes based on fan feedback and real-world events, turning a TV show into a living, breathing digital soap opera.

-

The “Micro-IP” Economy: AI has democratized “Blockbuster” visuals. Small, independent teams are producing studio-quality films for 1/100th of the traditional cost, leading to a surge in “Micro-IP” where niche fanbases fund and co-create their own cinematic universes.

-

Hybrid Monetization: In February 2026, the “Subscription vs. Ad-Supported” war has ended in a Hybrid Flow. Viewers can “Pay with Interaction,” allowing AI agents to conduct brief market research in exchange for premium, ad-free “Personalized Cinema” sessions.

Challenges: The “Slop” Backlash and Intellectual Property

The 2026 media revolution faces an “Authenticity Crisis.”

-

The “AI Slop” Fatigue: As the internet is flooded with “Vapid Filler” (low-effort AI content), 2026 has seen a massive “Human-Made” movement. Premium brands are now using “100% Human-Curation” labels as a luxury status symbol.

-

The “Character DNA” Legal Battle: Who owns the rights to an AI character that evolved based on player interactions? In February 2026, courts are grappling with “Synthetic IP Law,” trying to define where a studio’s copyright ends and a player’s creative “prompting” begins (Article 65).

Looking Forward: Toward “The Holodeck Reality”

As we look toward 2030, “Media” is moving toward “Neural Immersion.” We are approaching a world where Direct-to-Brain interfaces will allow us to experience stories not just through sight and sound, but through simulated “Qualia”—feeling the warmth of a digital sun or the adrenaline of a virtual chase.