Experienced actuary Aashish Verma, explains how he cut data errors by 95% and saved hours of manual work

Digital Insurance surveyed 120 insurance leaders in early 2025 and found something surprising: 78% plan to increase tech spending this year, with AI as the top priority. But there’s a problem. Many insurance companies still run few operations on outdated tools like Access databases. These old systems create errors, slow down reporting, and make it nearly difficult to meet modern compliance requirements.

Aashish Verma knows this problem firsthand. As a Senior Actuarial Associate at an Insurance Company in Philadelphia, he worked on fixing systems after the company completed a major acquisition of group insurance business for $6.3 billion. The company was using Access databases for critical reporting, with data errors reaching 1-2%.

Verma holds two of the highest actuarial credentials—Fellow of the Institute and Faculty of Actuaries (UK) and Member of the American Academy of Actuaries (US). Before that, he earned a Computer Science degree from Panjab University and a Master’s in Business Analytics from the University of Cincinnati. In his earlier consulting role from 2017 to 2022, he supported international insurance clients with regulatory compliance and actuarial consulting work, advancing through three promotions in under five years.

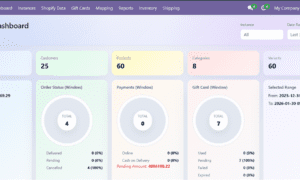

His work on two separate projects delivered significant results. For the Paid Family Leave reporting redesign, he eliminated Access database dependencies and integrated automated validation, reducing data errors to below 0.1%. For the reporting model transformation, he achieved an 85% reduction in update times, from a couple of hours to 5-10 minutes, while adding mid-month reporting capability. In this interview, Verma explains his approach and what other insurance companies can learn from the process.

Parts of your Paid Family Leave reporting process used Access databases, and you cut data errors from 1-2% to below 0.1%. What improvements did you make?

Only certain parts of the PFL reporting process relied on Access databases. The existing setup was functional, but I saw opportunities to improve internal efficiency. Data discrepancies across systems were hitting 1-2%, which left room for improvement in regulatory reporting accuracy. I built a unified reporting framework that eliminated the Access database dependencies where they existed. I integrated automated validation checks throughout the process. The result was getting discrepancies down to below 0.1%, which significantly improved the reliability of our regulatory submissions.

After your company acquired a major group benefits business, you worked on system remediation. What did that involve?

Following the acquisition, we had to remediate the processes, systems, and databases to work within our platform. This was critical for operational continuity and regulatory compliance. The work involved migrating from legacy systems and databases to our own platform. We had to ensure everything functioned properly for the group life and disability insurance business after the transition. It was a complex undertaking that required careful planning and execution to avoid disruptions.

You cut reporting update times by 85% from a couple of hours to 5-10 minutes. How did you achieve that?

I redesigned the reporting models for group life and disability products. The goal was to make them more transparent and informative, with enhanced automation. The new design also integrated functionality to support mid-month close reporting, which wasn’t possible with the old setup. The transformation reduced model update time from a couple of hours down to just 5-10 minutes.

You have a Master’s in Business Analytics with training in machine learning. How does that apply to actuarial work?

My Master’s program at the University of Cincinnati covered machine learning techniques—linear and logistic regression, time series modeling, natural language processing, neural networks, and random forest. The program also included courses in data management, data visualization using Tableau, and A/B testing. In my final semester, I worked on a real-world capstone project, solving an analytical problem for a Swiss multinational food and drink processing conglomerate . Actuarial work is much broader than just regulatory reporting. It’s fundamentally about pricing, reserving, and capital and solvency modeling in the insurance industry. The field draws heavily on the same principles used in business analytics—understanding correlations between key variables, applying financial modeling and financial analysis. It’s essentially about taking those analytical tools and applying them in a highly specialized insurance context to understand risks, evaluate uncertainty, set sustainable and competitive insurance prices, and maintain financial strength of the insurer. Traditional statistical methods remain important in actuarial work because they provide the rigor and transparency needed for professional standards. Machine learning is valuable for data analysis and pattern recognition, complementing traditional actuarial approaches. The key is knowing which tools to use for which problems.

What technical skills do you use in your actuarial work?

My Computer Science degree gave me a foundation in programming and quantitative problem-solving. I use SQL for database queries and Tableau for visualization. I also work with tools like SAP and ProVal for financial modeling. In my earlier consulting role with my former employer Aon, I worked with data analysis for pension plans and regulatory reporting. In my current position, I’ve worked with financial modeling, reserve evaluation, and reporting automation. Being able to work directly with data and systems makes a difference in how efficiently you can analyze and solve problems. The actuarial qualifications, FIA from IFoA UK and MAAA in the US, cover the professional standards and methods. The technical skills handle the implementation.

Based on your experience with the major system integration and reporting redesign, what lessons would you share?

System modernization projects need thorough planning. Understanding current processes and dependencies before making changes is critical. We had to maintain operational continuity while transitioning systems, which meant running parallel processes during migration. Building in validation and testing is essential. The automated validation checks we added for PFL reporting were key to reducing errors. And projects always take longer than expected, so realistic timelines matter. The technical work is only part of it. Getting buy-in from the people who use the systems and ensuring they can adapt to changes is just as important as the technical implementation.