Many organizations are spending more on data infrastructure than ever, yet gaining less control over how that money is actually used. Traditional data platforms were designed around a one-size-fits-all model, where very different workloads, SLAs, and business priorities compete for the same compute resources. As AI workloads grow, this approach starts to collapse. Query volumes rise, consumption becomes unpredictable, and cloud budgets begin to fluctuate without a clear mechanism to govern them.

“AI changed the rules,” says Ido Arieli Noga, CEO and co-founder of Yuki. “Workloads are more dynamic, less predictable, and much more expensive to run. One-size-fits-all setups can’t react in real time, so teams either over-provision or lose performance.”

On January 22, 2026, Yuki announced a $6 million seed funding round after operating in stealth for the past year. The round was led by Hyperwise Ventures, with participation from Fresh.fund, Tal Ventures, VelocitX, and Yakir Daniel, co-founder of Spot.io. The funding is intended to accelerate Yuki’s vision of building a control layer for modern data platforms, including Snowflake, Google BigQuery, and Iceberg-based data lakes.

“Data is the only resource in an organization that no one truly manages,” Noga explains. “We know how to store it, but not how to govern it. There are budgets, cloud infrastructure, and teams, but the data itself has no control system.”

Yuki is attempting to change that by introducing automation directly into how workloads are executed. Instead of acting as another monitoring or reporting layer, the platform operates in the execution path itself. Its core model, Yuki Fabric, continuously learns from workload behavior, performance requirements, and cost patterns, and then dynamically routes queries, allocates resources, and adjusts execution behavior in real time.

Most cloud management tools stop at visibility, Noga argues. “They show you what’s happening, but they don’t change anything. We focus on action. We change how workloads run in real time. Optimization becomes automatic instead of manual.”

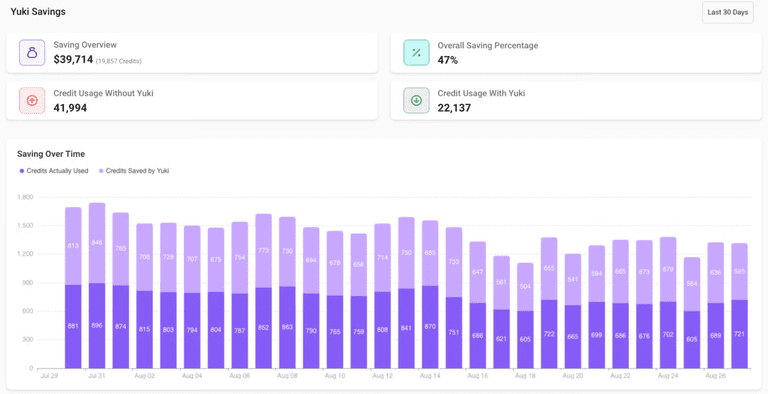

According to the company, early Yuki customers have achieved average savings of around 42.6% on their data infrastructure costs, with some large enterprises reportedly saving millions of dollars. But the shift goes beyond cost. “The biggest change is cultural,” says Noga. “Teams stop thinking in warehouse sizes and tuning tricks, and start thinking in workloads and outcomes. Once the system adapts automatically, engineers trust it to use resources wisely and spend more time building products instead of managing infrastructure.”

Investor Yakir Daniel believes the timing is right. “After building Spot, it was easy for me to recognize the pain point Yuki is solving. They’re building the control layer for data cost optimization just as AI is turning data spend into a board-level issue.”

That pain is becoming sharper as companies move toward multi-engine environments. Today, organizations increasingly mix Snowflake, Databricks, ClickHouse, and open-source tools, often while adopting open table formats such as Iceberg. Without a unified control system, this fragmentation leads to duplicated pipelines, duplicated workloads, and growing inefficiencies.

For many teams, Snowflake cost optimization is becoming one of the first places where this lack of control is felt most clearly, as warehouse sprawl and unpredictable compute usage drive budgets higher month after month.

“At many companies, the same workload is effectively running twice,” says Noga. “Not because they want it to, but because there’s no control layer above the engines. Yuki sits above them, so teams can handle complexity without constantly re-architecting their stack.”

The rise of Iceberg and similar open formats also shifts how governance must work. When storage and compute are decoupled and multiple engines access the same data, governance can no longer live inside a single platform.

“Permissions, ownership, and auditability have to move to the data layer,” Noga explains. “Once governance becomes data-centric, you also need real-time control over how workloads run on top of it. Otherwise efficiency breaks governance at scale.”

Another design decision behind Yuki is zero-change deployment. The platform does not require code or query modifications. “Most enterprises want to modernize, but they’re under constant delivery pressure,” Noga says. “If a solution requires migrations or refactors, it usually never gets adopted at scale. Zero-change deployment removes friction and lets teams prove value immediately.”

Yuki’s business model follows the same philosophy. Customers only pay a percentage of realized savings. “It changes the risk profile completely,” says Noga. “If we don’t save money, we don’t get paid. That makes adoption easier and aligns incentives on both sides.”

Yuki co-founders | Left to right: Ido Arieli Noga (CEO), Amir Peres (CTO)

Q&A with Ido Arieli Noga, CEO and co-founder on Yuki’s Mission

1) Please tell us more about yourself.

I’m the CEO and co-founder of Yuki. I’m an efficiency freak by nature, I like to live life to the fullest and I really don’t like wasting resources or energy. That mindset shapes everything I build, including Yuki, turning unnecessary friction into something simple and efficient.

2) As AI workloads grow, many enterprises are moving away from one-size-fits-all data stacks. Why has the need for a dedicated “control layer” become so urgent now?

AI changed the rules: workloads are more dynamic, less predictable, and much more expensive to run. One-size-fits-all setups can’t react in real time, so teams either over-provision or lose performance.

And the hidden cost is people: too many strong data teams waste hours tuning infrastructure instead of building data pipelines that drive real insights and revenue. A dedicated control layer is urgent because it takes the firefighting off humans, continuously balances cost and performance, and lets teams focus on business value.

3) Yuki reports average customer savings of 42.6%. What typically changes inside an organisation when data infrastructure becomes workload-aware rather than manually managed?

The biggest change is cultural. Teams stop thinking in terms of warehouse sizes and tuning tricks, and start thinking in terms of workloads and outcomes. Once the platform adapts automatically, engineers spend less time managing infrastructure and more time building products, moving faster, and trusting the system to use resources wisely.

4) You recently raised a $6m seed round led by Hyperwise Ventures, with backing from experienced operators in the cloud space. What convinced investors that Yuki is well-timed for today’s market?

Investors saw a clear timing shift: the AI blast created a workload blast. Data platforms suddenly became a lot more dynamic and expensive to run, and manual tuning just doesn’t scale.

At the same time, companies are going multi-engine, mixing Snowflake, Databricks, ClickHouse, and open-source tools. Without the time or knowledge to adapt every use case, they end up duplicating jobs and pipelines, and often running the same workload twice.

Add to that the wave of acquisitions across the data stack, which shows how fast the market is moving and how often the “right” tool changes. Yuki is well-timed because it brings a control layer above the engines, so teams can handle this chaos, cut waste, and keep focus on building customer value instead of constantly re-architecting.

5) The cloud management tools market is estimated at $9.8bn and still expanding. How does Yuki stand out in a field of more than 200 vendors?

Most tools in this space focus on visibility and reporting. Yuki focuses on action. We don’t just show what’s happening, we change how workloads run in real time.

We focus specifically on the data layer and base every decision on actual workload data, not static rules or assumptions. By sitting directly in the execution path and being workload-aware, Yuki optimizes automatically, without dashboards, alerts, or manual tuning.

6) Iceberg adoption is accelerating as companies separate storage from compute. How does this shift alter the economics and governance of enterprise data platforms?

As adoption of open table formats like Iceberg rises, governance becomes the real challenge.

When storage and compute are decoupled and multiple engines access the same data, governance can no longer live inside a single platform. Permissions, ownership, policies, and auditability have to move to the data layer itself. Without that shift, teams lose control quickly, the same data is accessed in different ways, by different engines, with no single source of truth.

This is also where a control layer matters. Once governance is data-centric, you need real-time control over how workloads run on top of it, making sure the right engine is used, policies are respected, and efficiency doesn’t break governance at scale.

6) Your platform deploys without code or query changes. How important is this simplicity for enterprises trying to modernise under tight budgets and delivery pressure?

It’s critical. Most enterprises want to modernise, but they’re under constant delivery pressure and can’t afford risky migrations or long refactors. If a solution requires code or query changes, it usually never gets adopted at scale.

Zero-change deployment removes friction. Teams can get value immediately, prove impact fast, and modernise incrementally, without slowing delivery or burning budget just to “get started.”

8) You charge only a percentage of realised savings. How does this model change the way large organisations evaluate risk and long-term value?

It changes the risk profile completely. Large organisations are used to consumption-based models from vendors like Snowflake and Databricks, you pay only when you actually use and get value.

By charging a percentage of realised savings, we align with that same logic. There’s no upfront risk, no budget guesswork, and no long-term commitment before value is proven. If we don’t create savings, we don’t get paid, which makes the long-term value very clear and easy to justify.

9) With customers in cybersecurity and media, what common mistakes do fast-growing, data-heavy companies make when managing fluctuating workloads?

A common mistake is over-provisioning because cybersecurity and media workloads are naturally spiky and hard to predict. Data volumes and concurrency can jump suddenly, so teams size for peak and keep it running.

That creates a lot of waste during normal periods and pushes teams into constant manual tuning instead of letting the platform adapt automatically in real time.

10) With funding earmarked for R&D expansion in Israel and sales growth in the US, which product capabilities or market trends will matter most for Yuki over the next two years?

Two things will matter most: control and expansion.

On the product side, real-time, workload-aware control will become even more critical as AI and multi-engine setups grow. Customers will expect automatic optimization across engines, stronger governance at the data layer, and decisions driven by live workload behavior, not static rules.

On the market side, continued AI adoption and rising data costs in the US will keep pressure on teams to do more with less. Companies won’t buy more dashboards, they’ll buy systems that actively reduce waste without slowing delivery.

Conclusion

In industries like cybersecurity and media, where workloads are naturally spiky and unpredictable, Yuki often sees the same pattern. “Teams size for peak and keep everything running. That creates waste and forces constant manual tuning. A control layer lets systems adapt automatically instead of locking companies into permanent over-provisioning.”

Looking ahead, Noga believes two things will define the next phase of enterprise data infrastructure: real-time control and execution-level automation. “Companies won’t buy more dashboards,” he says. “They’ll buy systems that actually change how their infrastructure behaves.”

With fresh funding and growing pressure on data budgets driven by AI adoption, Yuki is positioning itself not as another cloud management tool, but as an emerging control layer for the way enterprise data platforms operate.