The Principal Tech Lead for Knowledge Engineering at Amazon Web Services explains how ontologies and graph databases can solve the data modeling challenges behind applications that claim to understand human feelings.

The AI industry faces an uncomfortable truth: applications promising to understand human emotions, dreams, and surreal experiences are only as good as their underlying data architecture. A chatbot can generate sympathetic-sounding responses. A wellness app can track mood entries. But genuine understanding—the kind that maintains context across sessions, recognizes emotional patterns, and responds appropriately to nuanced psychological states—requires something traditional databases cannot provide.

This architectural challenge emerged in sharp focus at DreamWare Hackathon 2025, where 29 teams spent 72 hours building applications that attempted to “engineer the surreal.” Projects ranged from AI-powered emotional sanctuaries to systems that transform typing patterns into reactive music. Each faced the same fundamental question: how do you model something as unstructured as a dream?

The DreamWare Challenge

DreamWare Hackathon 2025 asked participants to build dream-like digital experiences—applications where interfaces behave organically, time operates non-linearly, and systems remember feelings rather than just data points. The event attracted submissions that pushed into genuinely novel technical territory.

The winning project, “Garden of Dead Projects” by ByteBusters (4.26/5.00), created an interactive experience exploring abandoned software. “The Neural AfterLife” by NeuraLife (4.15/5.00) built a memory preservation concept using GPT-4 integration with custom Three.js visualizations. “The Living Dreamspace” by The Dreamer (4.15/5.00) analyzed user behavior to generate reactive, emotion-driven music in real-time.

What distinguished the top submissions wasn’t just technical polish—it was how thoughtfully they approached the representation problem. An emotion isn’t a row in a database. A dream doesn’t fit into a relational schema. The teams that recognized this architectural reality built more coherent experiences.

The Knowledge Engineering Perspective

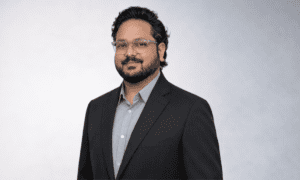

Veera V S B Nunna knows the representation problem intimately. As Principal Tech Lead for the Knowledge Engineering team at Amazon Web Services, he builds the ontologies and knowledge graphs that help AI systems understand complex, interconnected domains—the same architectural patterns that emotional AI applications desperately need.

Nunna’s work at AWS centers on RDF-based ontologies and knowledge graphs designed to make machine learning systems contextually aware. His patent, “Ontology-Based Approach For Modeling Service Dependencies In A Provider Network,” addresses how to model complex, non-obvious relationships between entities—the kind of relationships that exist between emotions, memories, time, and context in human experience.

His credentials span the full stack of cloud data architecture: AWS Certified Machine Learning Specialty, AWS Certified Solutions Architect, AWS Certified Big Data Specialty, and AWS Certified SysOps Administrator. He also holds IBM Certified Application Developer certification from earlier in his career. Before leading knowledge engineering at AWS, Nunna architected data platforms for enterprise clients including Fannie Mae, FINRA, and state government systems—environments where representing complex relationships correctly isn’t optional, and where data modeling errors have regulatory consequences.

Nunna’s educational background—a Master of Science in Computer Science from the University of New Mexico and a Bachelor of Technology in Information Technology from GITAM University—provided the theoretical foundation for his work in semantic technologies. His nearly decade-long tenure at AWS has given him practical experience implementing these concepts at global scale.

Nunna received the Amazon Inventor Award for his patent work on service dependency modeling. The core insight: when you need to understand why systems fail, you need to model not just what exists, but how things relate to each other in ways that aren’t immediately visible. A service might depend on another service that depends on a third service, and that dependency chain might only matter under specific conditions. Traditional monitoring misses these relationships. Knowledge graphs capture them.

Apply that thinking to emotional AI, and the problem becomes clear. A user’s anxiety about work might depend on their relationship with a specific colleague, which depends on a past conflict, which only matters when certain project types arise. Flat data models cannot represent this. Knowledge graphs can.

Why Traditional Databases Fail for Emotional Data

Nunna’s work on service dependencies illuminates why emotional AI faces architectural challenges that most developers don’t anticipate. Consider what happens when an AI wellness application tries to remember that a user feels anxious on Monday mornings before important meetings, but calm on Monday mornings when the week is unscheduled. A relational database can store “anxiety + Monday + morning” as a record. But it cannot represent the conditional logic, the temporal patterns, the causal relationships that make this understanding useful.

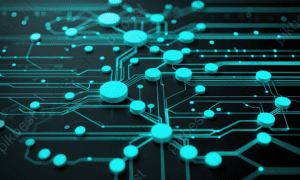

Knowledge graphs solve this through semantic relationships. In RDF (Resource Description Framework), information is stored as triples: subject-predicate-object statements that capture not just data, but meaning. “User-feelsAnxious-MondayMorning” becomes linked to “MondayMorning-precedes-ImportantMeeting” and “ImportantMeeting-triggers-Anxiety.” The graph structure allows AI systems to traverse these relationships and infer context that flat data cannot provide.

OWL (Web Ontology Language) extends this further by defining classes and constraints. An ontology for emotional states might define that “Anticipatory Anxiety” is a subclass of “Anxiety,” that it “hasTemporalTrigger” which can be any “FutureEvent,” and that “FutureEvent” objects have “Certainty” and “Stakes” properties affecting anxiety intensity. This isn’t just organization—it’s a machine-readable theory of how emotions work.

DreamWare projects like “ECHOES,” which creates an AI-powered emotional sanctuary using GPT-4, generate therapeutic narratives based on user emotional inputs. The project earned the “Best Concept & Depth” category award (4.30/5.00) for what judges described as “exceptional artistic depth and emotional sophistication.” But without structured knowledge representation, each session starts from zero. The AI cannot build genuine understanding of a user’s emotional patterns over time.

Technical Patterns for Surreal Applications

Drawing on his experience building knowledge infrastructure at AWS, Nunna identifies specific architectural patterns that dream-like applications require—decisions that differ fundamentally from conventional data modeling.

Temporal Flexibility: Dreams don’t follow linear time. Knowledge graphs can model temporal relationships that traditional databases cannot—events that happen “before” other events in emotional significance rather than chronological order, or memories that exist in multiple time contexts simultaneously. RDF’s flexibility allows representing “User-remembers-Childhood Event” alongside “ChildhoodEvent-emotionallyConnectedTo-CurrentAnxiety” without forcing artificial sequencing.

Semantic Ambiguity: Dream logic permits contradiction. A symbol can mean safety and threat simultaneously. OWL’s description logic allows for probabilistic class membership and fuzzy boundaries that rigid schemas reject. An entity can be “partiallyInstanceOf” multiple classes with different confidence weights.

Relationship Richness: The DreamWare project “DreamGlobe” (4.07/5.00) lets users share dreams on a global map with AI voice interaction. The voice feature orchestrates music pausing, speech-to-Gemini processing, Google TTS synthesis, and music resumption. This coordination requires understanding relationships between modalities—how voice interrupts music, how emotional content affects response tone, how geographic context influences dream interpretation.

AWS Neptune, Amazon’s managed graph database service, provides the infrastructure for this kind of knowledge representation at scale—technology Nunna works with directly in his role. Neptune supports both RDF and property graph models, allowing hybrid approaches where some relationships are semantically precise (RDF) and others are computationally efficient (property graphs). For applications like “The Living Dreamspace,” which must analyze typing patterns in real-time to generate reactive music, this performance characteristic matters.

The integration point between knowledge graphs and large language models represents the current frontier. LLMs like GPT-4 generate fluent, contextually appropriate text—but they hallucinate facts and lose context across sessions. Knowledge graphs provide the grounding: verified relationships, consistent entities, structured memory. When “The Neural AfterLife” generates a memory reconstruction, a knowledge graph could ensure consistency with previously established memories, emotional states, and narrative continuity.

Evaluating Technical Execution Through Architecture

For Nunna, evaluating projects that claim emotional intelligence requires looking beneath surface polish to assess architectural foundations. DreamWare’s evaluation criteria weighted Technical Execution at 40%, Concept & Depth at 30%, and Originality & Presence at 30%—but these categories interconnect.

A project with sophisticated UI but flat data architecture will feel hollow on repeated use. The AI responds appropriately in isolation but contradicts itself across sessions. The “emotional memory” is actually session-scoped local storage that evaporates when the browser closes. Technical execution, properly evaluated, includes whether the chosen architecture can deliver on the conceptual promise.

The distinction between impressive demos and production-ready systems often lies in knowledge representation. “DreamTime” by team Dreamer (4.00/5.00) creates 3D rooms that transform based on user-written dreams. In a hackathon demo, generating a single room from a single dream input looks magical. In production, users expect the system to remember their previous rooms, understand relationships between dreams, and maintain narrative consistency—all knowledge graph problems.

Projects that scored highest in Originality & Presence often implicitly solved knowledge representation challenges. “The Neural AfterLife” (4.40/5.00 in Originality) integrates GPT-4 with custom Three.js visualizations and Web Speech APIs. The “seamless, performant” browser experience judges noted requires more than API calls—it requires a coherent model of what the experience should be, maintained consistently across interactions.

Industry Implications

The emotional AI market—wellness applications, AI companions, therapeutic chatbots, creative tools—is expanding rapidly. Consumer expectations are also rising. Users who interact with AI systems daily develop intuitions about what genuine understanding feels like versus sophisticated pattern matching. They notice when an AI “forgets” something they mentioned previously. They sense when responses feel generic rather than contextually appropriate.

Applications that treat emotional data as a flat database problem will hit a ceiling. They can implement features but cannot deliver experiences that feel coherent over time. The AI that remembers you mentioned work stress three weeks ago and connects it to your current sleep issues—that requires knowledge architecture, not just conversation history. The wellness chatbot that recognizes your anxiety patterns are seasonal and adjusts its approach accordingly—that’s a knowledge graph problem.

The stakes extend beyond user experience. Emotional AI applications that make therapeutic claims face increasing regulatory scrutiny. An application that purports to provide mental health support must demonstrate that its understanding is genuine, not just pattern-matched. Knowledge graphs provide auditability: you can trace why the system made a particular inference, what relationships it followed, what evidence it weighted. This transparency matters for clinical applications, enterprise deployments, and consumer trust.

The most capable LLM applications in 2025 and beyond will be built on knowledge graphs that provide persistent, structured, semantically rich context. This isn’t speculative—it’s the architecture AWS, Google Cloud, and Microsoft Azure are investing in for enterprise applications. Amazon Neptune, Google Knowledge Graph, and Azure Cosmos DB with Gremlin support all reflect this architectural direction. The same patterns apply to consumer emotional AI, with higher stakes for getting representation wrong.

Building AI That Actually Understands

DreamWare Hackathon 2025 demonstrated both the creative ambition and architectural challenges of emotional AI. Teams built applications that transform feelings into music, preserve memories across digital afterlives, and create globally-shared dreamscapes. Each project, in its own way, confronted the question of how to represent human experience in machine-readable form.

The knowledge engineering perspective offers a path forward: ontologies that define what emotional concepts mean, knowledge graphs that capture relationships between feelings and contexts, and integration patterns that ground generative AI in structured understanding.

Dreams may resist logic, but the systems that help us explore them need coherent architecture. The engineers building the next generation of emotional AI applications would do well to study how knowledge graphs work—and what they make possible that traditional databases cannot.

DreamWare Hackathon 2025 was organized by Hackathon Raptors, a Community Interest Company supporting innovation in software development. The event featured 29 teams competing across 72 hours with $2,300 in prizes. Veera V S B Nunna served as a judge evaluating projects for technical execution, conceptual depth, and originality.