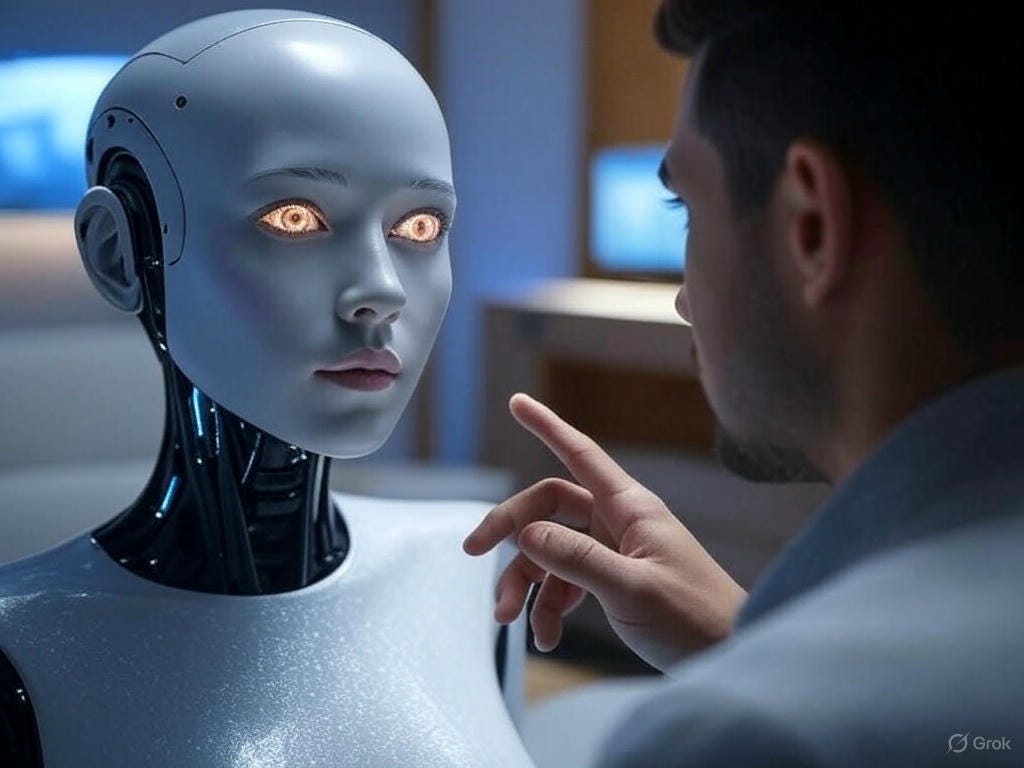

Technology trends often reveal more about human behavior than about technology itself. From social media to smartphones, tools evolve in response to unmet needs. One of the more debated examples of this pattern is the emergence of AI companionship systems. Rather than viewing this trend in isolation, it is more useful to understand it as part of a broader shift in how humans relate to digital systems.

From Utility Software to Relational Technology

Historically, software was built to perform tasks: calculate, store, retrieve, automate. Modern AI systems increasingly occupy a different role. They respond, adapt, and converse. This shift marks a transition from utility-focused tools to relational interfaces—systems designed to interact continuously rather than episodically.

AI companionship platforms sit at the far end of this spectrum. Their value is not derived from efficiency or output, but from interaction quality. This makes them a revealing case study for how technology is adapting to emotional and cognitive human needs.

Why Conversational Persistence Matters

Most digital interactions are transactional. A user searches, clicks, and leaves. Companion-style AI systems require persistence. They must maintain coherence across sessions, manage conversational continuity, and adapt over time.

This persistence exposes technical limitations that simpler applications never encounter. Issues such as context degradation, behavioral repetition, and response alignment become visible only through extended interaction. As a result, these systems often push conversational AI capabilities further than many enterprise tools.

Redefining Engagement in Tech Products

Engagement has long been a core metric in technology products, but AI companionship challenges traditional definitions. High engagement may reflect usefulness, but it can also signal over-attachment or design imbalance.

This has prompted developers to rethink engagement measurement. Instead of focusing solely on time spent or session frequency, teams increasingly explore metrics related to interaction diversity, topic variability, and natural disengagement. These approaches suggest a shift toward healthier engagement models that could influence other AI-driven platforms.

AI Companionship and Product Expectations

Another important signal is how users perceive AI behavior. In traditional software, predictability is a strength. In conversational AI, excessive predictability feels artificial. Users expect variation, memory, and responsiveness—even though these qualities are technically constrained.

Meeting these expectations requires complex orchestration between language models, memory layers, and interface design. AI companionship platforms therefore act as stress tests for user expectations around intelligence, responsiveness, and realism.

Cultural Context and Acceptance

Public acceptance of AI companionship did not happen overnight. It reflects years of exposure to conversational agents in customer support, virtual assistants, and entertainment. As these systems improved, the idea of sustained AI interaction became less abstract.

Importantly, acceptance does not necessarily imply replacement of human relationships. For many users, these systems occupy a supplementary role—offering conversation, reflection, or routine interaction within a broader social ecosystem.

This nuance is often missed in surface-level discussions but is critical for understanding adoption patterns.

Risks of Over-Simplified Narratives

Much of the public conversation around AI companionship falls into extremes: either utopian optimism or dystopian concern. Both miss the practical reality. These systems are neither sentient nor inherently harmful; they are complex products shaped by design choices.

Oversimplified narratives obscure the real challenges developers face: managing long-term context, preventing behavioral loops, ensuring transparency, and balancing engagement with responsibility.

Implications for Future AI Products

The lessons learned from AI companionship systems extend far beyond companionship itself. Any AI product involving long-term interaction—education platforms, mental health tools, collaborative work systems—faces similar design challenges.

As AI becomes more conversational and persistent, the industry will need new frameworks for evaluation, safety, and success metrics. Companion-style systems offer early insight into what those frameworks might require.

Conclusion

AI companionship is less about romance or novelty and more about the evolution of human–technology interaction. The rise of the AI girlfriend concept signals changing expectations: users increasingly want technology that listens, adapts, and remembers.

For the tech industry, this trend highlights both opportunity and responsibility. Understanding why these systems exist—and what they reveal about user behavior—may be more important than the systems themselves.