Artificial intelligence has transformed the way digital media is created, edited, and shared. One of the most fascinating breakthroughs is hyper realistic face swap technology. What once required professional studios and complex visual effects can now be done in seconds with a single uploaded image. The results look remarkably convincing, often blending seamlessly with lighting, expression, and movement in a video.

This rapid improvement has raised an important question. Why does today’s AI look so real, and what scientific advancements made it possible? Understanding the technology behind these lifelike face swaps helps creators, marketers, and businesses use the tools more effectively while appreciating the innovation that powers them.

Why have face swaps improved so dramatically in recent years?

Face swaps have become more convincing because modern AI models can analyze and replicate facial patterns, lighting, and motion with far greater accuracy than earlier technologies.

Over the past decade, artificial intelligence has evolved quickly. Traditional face swap tools relied on simple overlay methods that often appeared artificial. They struggled with facial angles, lighting differences, and expression mismatches. As deep learning models advanced, researchers began training neural networks to recognize highly detailed aspects of the human face.

This shift improved accuracy significantly. A report from MIT Technology Review highlighted that AI models trained on large data sets can now detect more than 100 distinct facial landmarks, compared to fewer than 20 in earlier systems. This allows the technology to track muscles around the eyes, subtle lip movements, and natural head tilts. These details make face swaps appear far more realistic.

The growth of short form video platforms also played a role. As demand increased for expressive and visually engaging video content, developers accelerated innovation in facial animation models. The result is a new generation of face swap technology that is accessible to everyday users while offering near professional quality.

How does AI identify and map facial features so accurately?

Modern AI uses neural networks that detect patterns in human faces and recreate them frame by frame in motion.

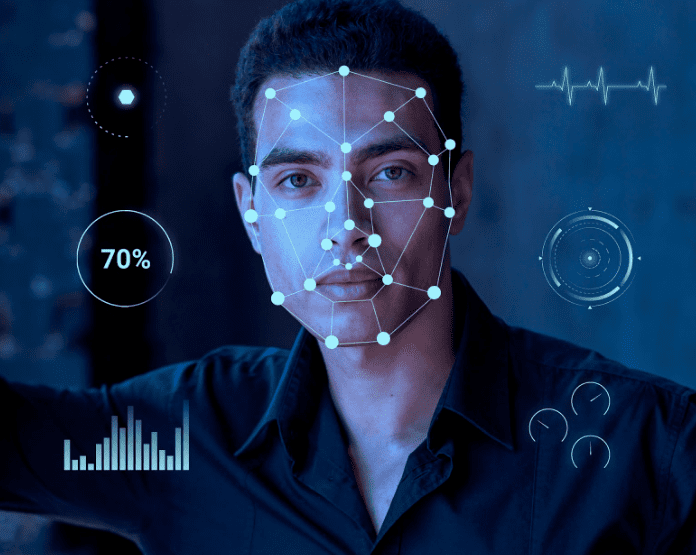

The foundation of hyper realistic face swapping is facial recognition and landmark detection. Machine learning algorithms are trained on thousands of images to learn how facial structures behave. The process typically involves three core steps:

- Detection. The AI identifies the location of a face within an image or video.

• Mapping. The AI pinpoints exact facial landmarks such as the nose bridge, jawline, cheekbones, and eye corners.

• Reconstruction. The AI blends the new face into the original scene while adjusting for natural movement.

Neural networks known as convolutional neural networks are especially effective at breaking down images into smaller components. They analyze textures, shadows, and edges to understand the geometry of a face. As these networks process more examples, they learn the subtle differences between expressions. This is why AI can match a smile or raised eyebrow even if the original photo did not show that expression.

Realism improves further when the AI analyzes motion. Modern systems evaluate each frame in a video and adapt the face to match every shift in movement. This frame level precision creates fluid, natural looking sequences that make the swap nearly undetectable.

Why does lighting play such a big role in realistic face swaps?

AI can adjust lighting and color tones to match the original video, which helps the swapped face blend naturally into the scene.

Lighting has always been one of the biggest challenges in visual effects. If the brightness or color of the swapped face does not match the surrounding environment, the result looks fake. Today’s AI models use advanced shading and color correction techniques to solve this problem.

These systems analyze the video to determine the direction, intensity, and color of the light. They then apply these conditions dynamically to the swapped face. For example, if the original video includes a warm sunset glow, the AI will tint the swapped face accordingly. If a character turns their head and enters a shadow, the AI will adjust brightness to keep the blend seamless.

A study from the Visual Computing Institute in Germany found that dynamic lighting adjustment increases realism by nearly 60 percent when compared to static blending techniques. This highlights how critical lighting adaptation is for convincing results.

Lighting consistency is one of the main reasons modern face swaps look natural, even when inserted into complex or fast moving scenes.

How does AI maintain natural expression and movement?

AI models replicate micro expressions and motion patterns by learning the way facial muscles move and interact in real time.

One of the most impressive aspects of modern face swap videos is expression accuracy. Older tools often created stiff or frozen faces. Today’s systems are capable of producing lifelike emotional reactions, including smiles, smirks, surprise, and eye movement.

This improvement is due to deep learning models that track how different facial muscles interact. For example, a genuine smile engages both the mouth and the muscles around the eyes. Advanced AI recognizes these combinations and applies them to the swapped face.

Motion tracking also plays a critical role. Each frame in the video contains movement data, and the AI must match that movement perfectly. If the person in the original video tilts their head slightly or laughs suddenly, the swapped face must follow in perfect sync. This is achieved through temporal coherence algorithms that ensure smooth transitions between frames.

In the middle of these capabilities, tools such as AI face swap by Viggle AI combine facial mapping, motion tracking, and shading to create expressive and realistic videos. These tools allow creators to place themselves or characters into dynamic scenes with minimal effort while maintaining natural movement.

What makes hyper realistic face swaps so convincing to the human eye?

Face swaps look convincing because they replicate visual cues that our brains rely on for facial recognition and emotional interpretation.

Humans are extremely good at spotting inconsistencies in faces. We instinctively notice when expressions do not match emotions or when lighting seems unnatural. Modern AI succeeds because it aligns with how people process facial information.

Three factors contribute to this realism:

- Consistency across frames. The AI ensures the face matches motion and emotion in every moment.

• Natural textures. Skin texture blending avoids the plastic look of older face swap models.

• Correct proportions. Facial geometry is preserved to avoid distortions or mismatches.

A study from the University of Cambridge found that people perceive faces as real when they align with two conditions: smooth motion transitions and accurate expression mapping. Modern AI excels in both categories, which is why face swap videos appear so authentic.

As AI continues learning from larger data sets, the results become even more precise. The technology is approaching a point where even trained editors may struggle to detect high quality face swaps without specialized tools.

Conclusion

Hyper realistic face swap technology has advanced rapidly thanks to improvements in facial recognition, deep learning, motion tracking, and lighting adaptation. These innovations allow AI to create seamless, expressive, and natural looking videos that rival professional visual effects. Tools like AI face swap by Viggle AI demonstrate how accessible this technology has become, giving creators an easy way to generate lifelike content. As AI continues to evolve, face swaps will grow even more convincing, marking a new chapter in digital media and creative expression.