The accelerated proliferation of artificial intelligence (AI) in organizational decision-making has inaugurated an era of unprecedented operational efficiency and analytical capability. Contemporary analyses of global AI market trajectories reveal exponential growth in adoption across diverse sectors, including healthcare, finance, manufacturing, and retail. AI systems now underpin decisions of profound societal consequence, ranging from clinical diagnostics to judicial risk assessments, thereby reshaping fundamental processes of governance and commerce. Enterprises increasingly deploy AI to optimize performance, enhance customer engagement, and refine strategic precision. Nevertheless, this technological ascendancy engenders complex ethical dilemmas necessitating rigorous normative frameworks to ensure responsible implementation.

As AI architecture evolves toward greater autonomy, the imperative for comprehensive ethical governance becomes acute. Empirical studies underscore that effective integration transcends technical proficiency, demanding organizational preparedness, adaptive change management, and principled attention to fairness, transparency, and accountability. Ethical frameworks must simultaneously mitigate immediate risks of bias and discrimination while anticipating systemic implications, including exacerbation of social inequities and erosion of privacy. This article synthesizes interdisciplinary perspective spanning computer science, jurisprudence, philosophy, and social theory—to articulate strategies for ethically aligned AI deployment. By embedding normative considerations within strategic design, organizations can reconcile innovation with societal values, thereby maximizing benefit while minimizing harm.

Core Ethical Principles for AI Decision Systems

1. Fairness

Fairness in AI systems encompasses both procedural justice and distributive justice. Procedural justice ensures that decision-making processes treat individuals and groups equitably, while distributive justice focuses on the outcomes of these decisions. Predictive analytics often perpetuate historical biases embedded in training data, leading to discriminatory practices in areas such as lending, hiring, and customer prioritization. Achieving fairness requires meticulous attention to data representation, algorithmic design, and the social context in which systems operate.

Case Example: In 2018, Amazon discontinued its AI recruiting tool after discovering it systematically downgraded résumés containing indicators of female gender, reflecting bias in historical hiring data. Similarly, credit scoring algorithms have faced scrutiny for disproportionately denying loans to minority applicants due to biased training datasets.

2. Transparency

Transparency is critical for fostering trust and enabling effective oversight. It involves two dimensions: technical transparency, which explains how algorithms function, and procedural transparency, which clarifies how decisions are reached. In high-stakes domains such as healthcare, finance, and criminal justice, transparency is essential for regulatory compliance and public confidence. However, transparency must be balanced against intellectual property protection and system security. Explainable AI techniques and clear documentation of decision processes are key strategies for achieving this principle.

Case Example: The European Union’s General Data Protection Regulation (GDPR) enforces a “right to explanation” for automated decisions. Financial institutions using AI for credit approvals have adopted explainable AI models to meet compliance standards. For instance, Wells Fargo implemented interpretable machine learning techniques to clarify credit decisions, improving customer trust and regulatory alignment.

3. Privacy

Privacy in AI extends beyond traditional data protection to address risks of inference, profiling, and aggregation of seemingly benign data into sensitive insights. Emerging techniques such as differential privacy and federated learning enable privacy-by-design approaches without compromising model utility. Differential privacy introduces mathematically controlled noise to safeguard individual data points, while federated learning allows distributed model training without centralizing sensitive data. These methods are vital for maintaining confidentiality, particularly in sectors like healthcare where data sovereignty and patient rights are paramount.

Case Example: Google’s use of federated learning in its Gboard keyboard allows predictive text models to improve without centralizing user data, reducing privacy risks. Similarly, Apple employs differential privacy to collect usage statistics while preserving individual anonymity.

4. Accountability

Accountability establishes clear lines of responsibility for AI decisions and their consequences. It requires governance structures that define roles across data providers, algorithm developers, system implementers, and decision-makers. Mechanisms for redress, systematic monitoring, and audit trails are essential to uphold accountability. Maintaining detailed records of system behavior and decision logic facilitates compliance and enables corrective action when harm occurs. In complex environments such as healthcare, accountability frameworks must address challenges posed by distributed systems and multi-jurisdictional data flows.

Case Example: The COMPAS algorithm used in U.S. criminal justice for recidivism prediction faced criticism for racial bias, sparking calls for accountability and transparency in algorithmic decision-making. In response, jurisdictions introduced audit mechanisms and human oversight requirements.

Implementation Strategies and Best Practices

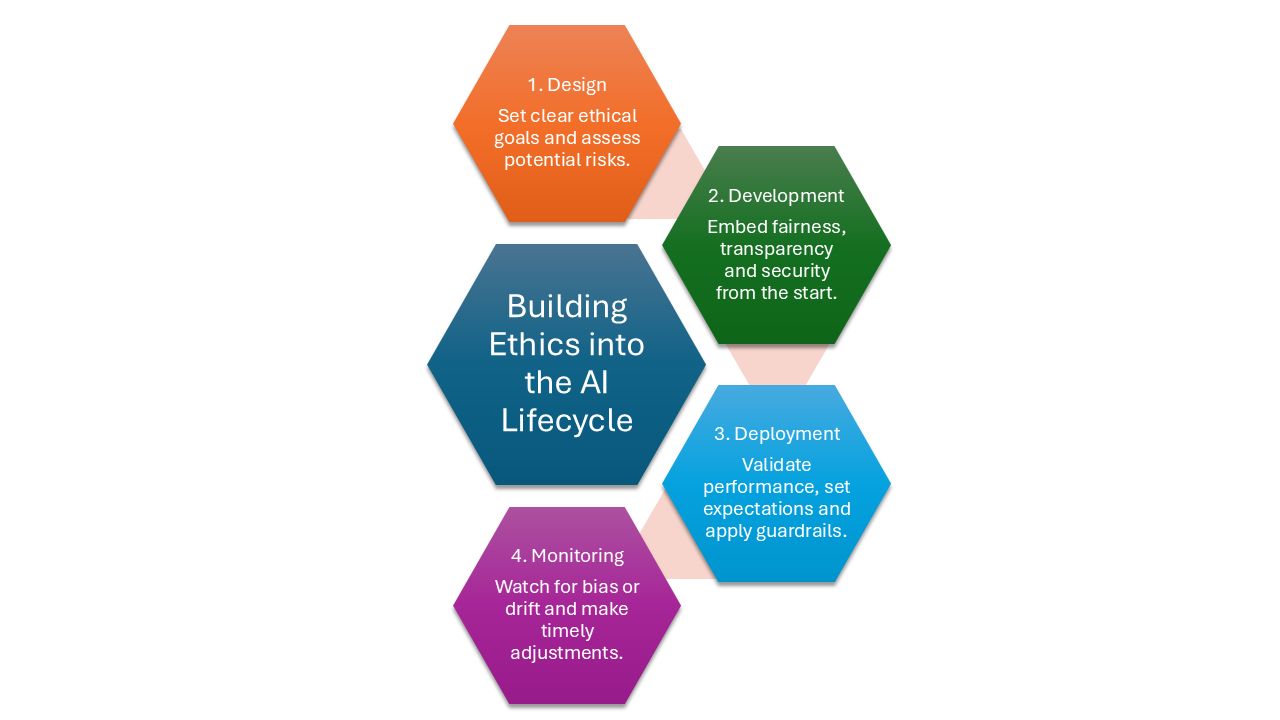

Integrating ethical standards into AI practice requires a systematic approach spanning the entire lifecycle—from design to deployment and ongoing monitoring. Leading organizations increasingly embed ethical considerations into AI strategies, aligning technological innovation with Environmental, Social, and Governance (ESG) objectives. Ethical Impact Assessments, akin to environmental assessments, should be conducted early to evaluate risks, benefits, and unintended consequences for diverse stakeholders. Continuous reassessment ensures evolving ethical compliance.

Technical implementation demands advanced solutions. Fairness-oriented methods such as adversarial debiasing, reweighting, and fair representation learning mitigate discrimination, while explainable AI techniques enhance transparency. Privacy-preserving approaches, including differential privacy and federated learning, must be integrated into data collection and model training. Organizations should establish explicit ethical benchmarks beyond accuracy metrics to guide development.

Governance structures play a pivotal role. AI ethics committees, escalation mechanisms, and embedded ethical reviews foster accountability. Organizational culture and leadership significantly influence success, requiring training programs and changing management strategies to prioritize ethics alongside business goals. Stakeholder engagement must be substantive, incorporating advisory boards, feedback loops, and public forums to ensure inclusivity and responsiveness. Documenting these interactions demonstrates commitment to ethical integrity, enabling AI systems that deliver innovation while safeguarding societal values.

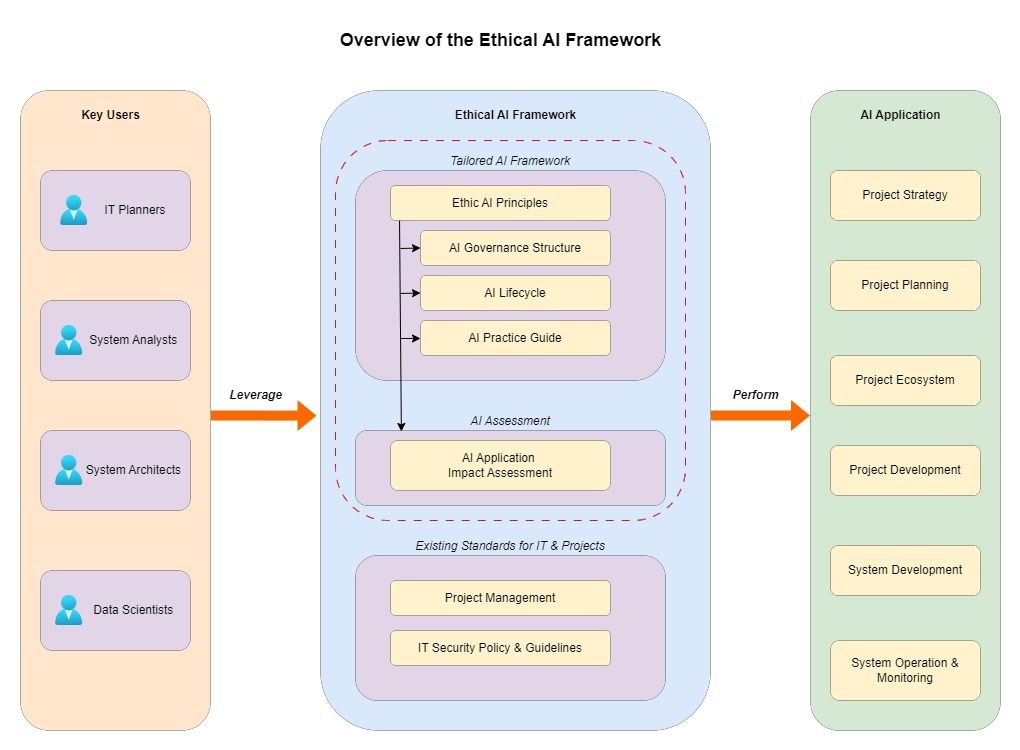

Applying the Ethical AI Framework in IT Projects

The Ethical AI Framework serves as a comprehensive guide for organizations adopting AI and big data analytics in IT projects and services. It is designed to support both technical teams during development and maintenance, and leadership in establishing governance structures that ensure accountability and public trust.

1. Governance and Accountability

Governance and accountability form the backbone of an Ethical AI Framework, ensuring organizations adopt AI responsibly and transparently. Effective governance establishes clear roles, responsibilities, and oversight mechanisms across the AI lifecycle, from design to deployment. It involves conducting ethical impact assessments, monitoring compliance, and implementing escalation procedures for addressing risks. Accountability requires organizations to safeguard public interest by evaluating societal impacts, mitigating unintended consequences, and maintaining transparency in decision-making processes. By embedding these practices, organizations can build trust with stakeholders, comply with regulatory standards, and demonstrate a commitment to ethical principles while fostering innovation and sustainable growth.

Case Example: Microsoft’s Responsible AI Standard outlines governance processes for risk assessment and ethical compliance, ensuring accountability across product teams.

2. Strategic Integration

Strategic integration ensures that ethical principles are embedded at the earliest stages of AI adoption rather than added as an afterthought. IT planners and executives should reference the Ethical AI Framework during strategy formulation, ecosystem design, and technology selection. This proactive approach aligns AI initiatives with corporate values, regulatory requirements, and long-term sustainability goals. By incorporating fairness, transparency, privacy, and accountability into strategic planning, organizations can mitigate risks, enhance stakeholder trust, and ensure compliance. Early integration also facilitates smoother implementation, reduces costly retrofits, and positions AI systems as both innovative and ethically responsible within dynamic business environments.

Case Example: Salesforce’s Office of Ethical and Humane Use integrate ethics into product strategy, influencing design decisions from inception.

3. Continuous Monitoring

Continuous monitoring is essential to maintain ethical integrity throughout the AI lifecycle. The Ethical AI Framework advocates for ongoing evaluation beyond initial deployment, ensuring systems adapt to evolving technologies and societal contexts. This involves implementing regular audits, conducting ethical impact assessments, and establishing stakeholder feedback loops to identify emerging risks and unintended consequences. Monitoring should include performance reviews, bias detection, and compliance checks aligned with regulatory standards. By embedding these processes into governance structures, organizations can proactively address ethical challenges, maintain transparency, and reinforce accountability. Continuous oversight ensures AI systems remain trustworthy, fair, and aligned with organizational and societal values.

Case Example: IBM’s AI Ethics Board conducts periodic reviews of deployed systems to maintain compliance and adapt to emerging risks.

Organizations adopting the Ethical AI Framework accomplish two critical goals: driving technological innovation while embedding fairness, transparency, and societal responsibility. This proactive approach not only enhances stakeholder trust but also mitigates reputational and regulatory risks, ensuring AI systems deliver sustainable value aligned with ethical principles and long-term corporate objectives.