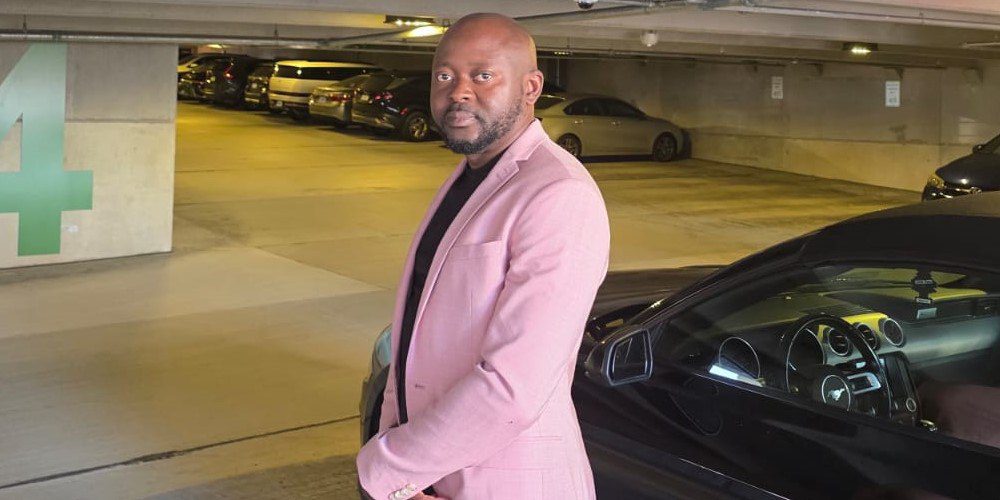

Olusesan Ogundulu, an expert in data modernization and cloud architecture, provides a guide to turning bad data into business value

The world of data is often presented as a goldmine, a source of endless insights and opportunities. But what if a significant portion of that treasure is, in fact, fool’s gold? Reports indicate that poor data quality is costing the U.S. economy an estimated $3.1 trillion annually.

This staggering figure highlights a fundamental problem for businesses: the failure to effectively manage and transform raw information into a reliable asset. In an era where data-driven decisions are paramount, this represents a critical threat to efficiency, innovation, and profitability.

To navigate this challenge and understand how companies can turn this colossal loss into a competitive advantage, we turn to Olusesan Ogundulu, a seasoned Senior Data Engineer with over 10 years of experience who specializes in designing and implementing complex data solutions in the Azure cloud. His expertise is rooted in a pragmatic approach to solving real-world business problems and a commitment to modernizing data infrastructure. Olusesan’s experience has gained him the trust of both technical and non-technical stakeholders. He is often brought into high-impact projects at the architecture and strategy phase to design scalable, future-proof solutions. Also, he has mentored junior engineers, defined coding and design standards for data teams, and shared his approaches through internal workshops and knowledge-sharing sessions.

Olusesan, the number is staggering. From your perspective, what does that statistic truly represent for a business on a day-to-day level, and what is your immediate impression of the scale of this challenge?

I think, first of all, that statistic represents the time wasted by analysts cleaning bad data instead of gaining insights, the flawed decisions made by leadership because they’re working with an incomplete or inaccurate picture, and the missed opportunities for innovation because the foundation of the data is unstable.

Ultimately, this number is a symptom of a deeper, more systemic issue: many companies have focused on simply collecting vast amounts of data without investing in the underlying infrastructure to ensure its quality, governance, and reliability. The role of a data engineer is to be the architect of that reliable infrastructure, turning that “cost of bad data” into a clear return on investment.

As a specialist who helped companies move from old data systems to modern cloud platforms, what are some of the biggest challenges in that kind of migration, and what’s the key to making it a success?

Navigating legacy systems often presents the most significant challenges, particularly with systems like IBM Cognos Report Studio and DB2. We often had to deal with limited documentation or inconsistent data. In some cases, it was necessary to reverse-engineer the logic and decipher business rules embedded in old workflows before any modernization could occur. In some cases, it was necessary to reverse-engineer the logic and decipher business rules embedded in old workflows before any modernization could occur.

Another major obstacle was change management—ensuring that stakeholders trusted the new cloud solutions over the legacy systems they had used for years. To overcome this, we focused on careful planning, clear communication, and proving the value of the new system through early, tangible wins.

A crucial factor for success was adaptability. The ability to quickly learn and master new platforms, like Azure and Snowflake, has been essential in keeping pace with the rapidly changing field of data engineering. It’s about bridging the gap between traditional and modern technology while focusing on the business context behind the technical requirements.

As a Senior Data Engineer at Alvarez & Marsal, you worked on optimizing data storage and processing. This work resulted in a significant reduction in storage costs. What is your go-to strategy for doing that?

I stick to a three-pronged approach: optimizing data pipelines, right-sizing storage, and implementing robust data governance. First, by modernizing legacy ETL processes and moving them to a serverless cloud architecture, we can significantly reduce processing time and computational costs. Secondly, we design data storage solutions with a focus on efficiency, often using tiered storage like Azure Data Lake, which allows for a significant reduction in storage costs.

Finally, we implement a data governance framework to actively reduce data quality issues. Poor data quality leads to wasted storage and processing, so by addressing it at the source, we can make the entire system more efficient. Ultimately, the key is to not just process data faster, but to process and store only the data that is clean, relevant, and well-governed.

During your time at a global leader in providing solutions for the alternative investment management industry — MUFG Fund Services — you also led a project to migrate reports from older platforms to a modern system. Can you describe the primary challenges you encountered and how that experience shaped your core philosophy on data modernization?

That project was a perfect example of a common data modernization challenge: moving away from legacy systems that, while functional, were no longer efficient or scalable. The main hurdles were a combination of technical and organizational issues.

Technically, we had to contend with the complexity of the legacy DB2 database and the intricacies of the existing report logic in IBM Cognos Report Studio. The challenge was to meticulously reverse-engineer and validate the logic to ensure that every single report was perfectly replicated in the new SSRS environment.

Organizationally, change management was a significant factor. We had to convince our stakeholders to trust the new system, which was critical for user adoption. This meant focusing on clear communication and demonstrating the value of the migration through tangible improvements, such as faster report generation and more flexible access to data.

This experience solidified my core philosophy: a successful data project isn’t just about the technology. It’s about combining technical expertise with strategic planning and effective communication to build trust. Ultimately, my goal is to design solutions that are not only robust and efficient but also transparent and trusted by the people who rely on them every day.

At TD Bank, a global leader in Canadian banking, you were a Senior Data Analyst and a critical player in migrating SSIS packages to Azure Data Factory and creating automated workflows. This work was crucial for the largest bank in Canada. How did you approach this project, and what kind of impact did these specific developments have on the company’s data operations and business users?

The key to success was close collaboration with both business users and stakeholders to elicit requirements and provide reporting solutions. I created pipelines and automated workflows using triggers in Azure Data Factory, which was a significant step in modernizing our data processes. I also migrated SQL databases to Azure Data Lake and developed Tableau reports and dashboards with drill-down and drill-through functionality to meet business needs. This work helped streamline data operations, improve efficiency, and enabled faster, data-informed decisions.

You have a clear vision for the future of data engineering, particularly with the incorporation of AI and automation. You’ve recently worked on automating data quality checks using a rules engine with metadata-driven validation logic. Can you elaborate on this project and explain how you see AI and automation reshaping the role of data engineers?

My current focus is on incorporating AI and automation into data workflows to reduce manual processes and improve data quality. The project involving the metadata-driven validation logic is foundational to this vision. It lays the groundwork for future AI-based anomaly detection, which will allow for more proactive and intelligent data quality monitoring. I see the future of data engineering as not just moving data efficiently, but in making it intelligent. The role will evolve to integrate machine learning and AI more seamlessly into data pipelines, allowing for advanced use cases like predictive analytics and anomaly detection.

Beyond the technical skills, you’ve also been a mentor and a teacher, setting new standards for the teams you’ve worked with. How do you approach knowledge sharing and professional growth, and what advice would you give to those who want to do more than just write code?

I believe that knowledge sharing is a critical part of professional growth and making a lasting impact. While I haven’t published a formal paper or book, I actively share my approaches internally through detailed documentation, technical walkthroughs, and knowledge-sharing sessions. My advice to aspiring data engineers is to cultivate a strong foundation in both traditional and modern tools. You must be adaptable and committed to continuous learning as the field is rapidly changing. Focus on understanding the business context behind the technical requirements, and don’t underestimate the power of collaboration with cross-functional teams. Finally, find opportunities to share what you’ve learned, whether through internal training, blogs, or community forums, to contribute to the broader data engineering community.