Every second, millions of trading messages move through global markets. High-frequency strategies generate thousands of orders in microseconds. Digital asset activity increased from 586 million transactions in 2020 to more than 2 billion in 2021, with a reported value of approximately $ 1.4 trillion that year (Office of Financial Research, 2023).

Each trade sets off a chain of actions. Confirmation, clearing, settlement, regulatory reporting, and surveillance must all run without delay. Multiplied across today’s volumes, the result is a torrent that legacy systems were not designed to handle.

Front-office platforms now operate in real time. Post-trade often does not. Many firms still rely on monolithic, batch-based systems designed for an earlier era. The question is no longer whether to modernize; it is how to do so. The question is how to design post-trade systems that can handle a million messages per second.

In the sections that follow, I explain why post-trade matters, where legacy systems fall short, and what a modern architecture should look like.

The Hidden Engine of Finance

Time and trading are inseparable in today’s markets – eamesBot | Shutterstock

The spotlight usually falls on the trading floor, but market stability depends on what happens after the trade.

Post-trade is the process that ensures a transaction is completed correctly. Clearing aligns obligations between buyer and seller. Settlement guarantees the transfer of cash and securities. Custody provides safekeeping of assets. Reporting gives authorities transparency. Surveillance monitors for manipulation and fraud.

When this machinery falters, costs escalate quickly. A global failure rate of roughly 2 percent equates to approximately $ 3 billion in annual losses. A single failure often consumes far more time and expense than a thriving settlement.

The timeline is tighter than it used to be. The United States moved to a T+1 standard settlement cycle on May 28, 2024. Canada and Mexico aligned one day earlier, and other jurisdictions are assessing similar moves. The goal is faster settlement and lower counterparty risk.

With less buffer time, firms need continuous visibility across the post-trade chain.

Why Legacy Systems Struggle

The weakness lies in the architecture. For decades, post-trade platforms have been built as large, tightly bound applications. Scaling is inefficient. Upgrades are slow and risky. A failure in one component can disrupt the whole system.

Batch processing adds another constraint. Instead of continuous oversight, firms are left with blind spots between scheduled runs. That approach does not match real-time trading volumes or compressed settlement deadlines.

These constraints make it hard to achieve the required scale, resilience, and transparency.

A New Architectural Approach

3D Render of Young Man Sitting on a bar working on his Laptop | Darko 1981 | Shutterstock

To handle today’s data intensity, post-trade systems need a new foundation built on three principles.

1) Event-driven systems. Every trade is captured as a discrete event. Events are durable. Downstream services consume them asynchronously, so the system continues to operate even if one service fails (i.e., each service continues to work independently).

2) Microservices. Functions are broken into independent services. Each performs one role, such as generating settlement instructions or preparing regulatory reports. Services run in parallel, scale individually, and contain failures.

3) Cloud-native infrastructure. Elastic capacity expands when volumes spike and contracts when activity slows. This improves efficiency and provides headroom during stress.

Together, these principles offer a framework that can absorb surges without sacrificing accuracy.

| Monolithic: A single application where all parts are tied together.

Microservices: Independent services, each focused on one role, connected yet resilient. |

Building the Backbone

Design principles need the right technology.

- Message brokers. Reliable event transport is essential. Apache Kafka remains widely used. Apache Pulsar offers a two-layer design that separates compute from storage through stateless brokers and Apache BookKeeper, which can simplify scaling and help avoid wholesale rebalancing during growth.

- In-memory computing. Processing in RAM avoids disk I/O bottlenecks. Disk access latencies are orders of magnitude higher than those of main memory, which is why in-memory engines are well-suited for real-time risk checks and fraud detection.

- Observability. Distributed platforms require integrated logs, metrics, and traces to detect issues before they escalate and become more severe. This is a core practice in site reliability engineering and is table stakes for complex financial systems.

These choices turn architectural intent into working infrastructure.

Project Velocity: A Modern Example

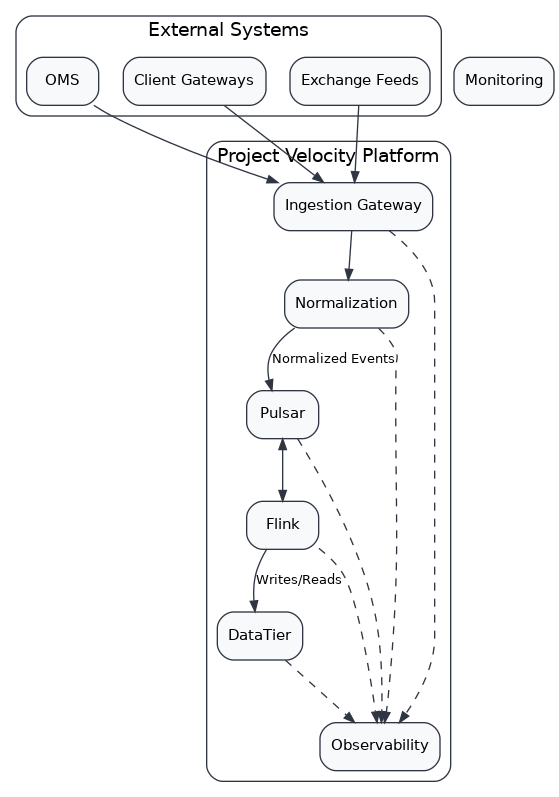

A modern platform, call it Project Velocity, illustrates how this approach works.

Trades arrive from exchanges, client gateways, and order systems. A normalization layer translates all inputs into a consistent event model. This ensures downstream services operate on clean, standardized data.

Events then enter Pulsar, the messaging backbone. Stream processors such as Apache Flink apply business rules, including validation, enrichment, and matching. Outputs are routed to purpose-fit data stores.

- In-memory grids provide rapid enrichment and reference lookups.

- Time-series databases create immutable audit trails.

- Relational databases maintain definitive records.

- Cloud object storage archives long-term data at scale.

A centralized observability stack monitors each stage with unified logs, metrics, and traces. The complete lifecycle, from ingestion to settlement, can be engineered to achieve sub-second performance for most flows. Critical paths can be designed to stay within that range even under load. Actual results depend on infrastructure and workload. The T+1 context makes this continuous view more than a convenience. It is a necessity.

Visual 1. Project Velocity architecture diagram

This model reduces manual intervention and scales reliably during volume spikes.

Implementation Notes From Practice

Standard quality assurance best practices. – TippaPatt | Shutterstock

These reflect lessons commonly observed in large-scale capital markets programs, where even small inefficiencies can magnify into major operational and regulatory risks. Over time, a few themes have consistently proven decisive in building systems that scale under pressure.

1) Start with the canonical event model. The fastest way to slow down a program is to let formats diverge at the edges. A stable schema for core events simplifies routing, replay, and audit.

2) Engineer for backpressure. Event backlogs are normal in stress conditions. Design consumers to throttle gracefully and recover without drops.

3) Right-size the data tier. One database rarely fits all tasks. Use in-memory for microsecond lookups, time-series for audit, relational systems for core records, and object storage for cost-efficient history.

4) Instrument first, optimize next. You cannot improve what you cannot see. Establish golden signals, set service level objectives, and trace the full path from ingestion to settlement before tuning.

The Future: Intelligent and Shared

Scale and speed are the baseline. The next step is intelligence and shared infrastructure.

1) Artificial intelligence and machine learning. Clean, real-time event streams power models that flag anomalies early. These models can identify fraud patterns, highlight stress in funding and liquidity, and predict settlement fails that require attention before cutoff times.

2) Distributed ledger technology. Shared, append-only records reduce reconciliation overhead and support near-instant settlement in specific use cases. Several markets are studying this as a way to reduce operational drag and counterparty exposure.

3) Convergence. AI depends on reliable data. A shared ledger strengthens data quality. Together, these tools can shift post-trade from reactive processing to proactive control.

Conclusion

Trading volumes have outgrown traditional post-trade infrastructure.

A practical path forward exists. Event-driven design, microservices, and cloud-native platforms, supported by the right backbone of brokers, in-memory processing, and observability, can deliver the required throughput while improving control.

The goal is not only to process a million messages per second. It is to build systems that respond to demand, anticipate risk, and support market confidence under stress. The jurisdictions that moved to T+1 made the direction clear. The technology to meet that standard is available today.

About the Author

Pratheep Ramanujam is a senior data engineer and data strategy leader with more than 16 years of experience in capital markets technology. He has led large-scale initiatives in regulatory reporting, cloud modernization, and analytics platforms that support stability and efficiency across global financial systems.

References

1) Office of Financial Research (2023). Data Analysis Shows High Growth, High Concentration in Digital Asset Market. U.S. Department of the Treasury. https://www.financialresearch.gov/the-ofr-blog/2023/05/30/data-analysis-shows-high-growth-high-concentration-in-digital-asset-market

2) Depository Trust & Clearing Corporation (2020). Impact of Trade Fails. DTCC. https://www.dtcc.com/itp-hub/dist/downloads/Impact_of_fails_Infographic_2020.pdf

3) Reuters (2024). US moves towards faster stock settlement: where are other countries? https://www.reuters.com/markets/us/us-moves-towards-faster-stock-settlement-where-are-other-countries-2024-03-21/

4) Apache Software Foundation (2024). Apache Pulsar Documentation: Architecture Overview. Version 4.0.x. https://pulsar.apache.org/docs/4.0.x/concepts-architecture-overview/

5) Karimi, K., Krishnamurthy, D., and Mirjafari, P. (2015). When In-Memory Computing Is Slower Than Heavy Disk Usage. arXiv preprint arXiv:1503.02678.

https://arxiv.org/pdf/1503.02678v1

6) IBM (2023). The Three Pillars of Observability. IBM Think Insights. https://www.ibm.com/think/insights/observability-pillars

7) Google SRE (2016). Monitoring Distributed Systems. In: Beyer, B., Jones, C., Petoff, J. and Murphy, N.R. (eds.) Site Reliability Engineering: How Google Runs Production Systems. O’Reilly Media. https://sre.google/sre-book/monitoring-distributed-systems