Twenty years ago, the late physicist Stephen Hawking relied on a single cheek-muscle twitch to tell the world what he was thinking. A tiny sensor in his glasses noticed that twitch, moved a cursor across a virtual keyboard, and let him build words one letter at a time. When he finally finished a sentence, a speech box read it out in that famous metallic voice. The process worked, but it produced only about one word per minute. It also stripped away everything that makes speaking feel human – tone, pauses, humor, even the right to interrupt someone mid-conversation. That slow, flattened style of communication became the benchmark that researchers set out to beat.

The review is prepared by Dmitry Baraishuk, a partner and Chief Innovation Officer at a custom AI software development company Belitsoft.

The first big leap came when scientists decided to read signals straight from the brain instead of watching leftover muscle movement. In 2004, a team at Brown University implanted a small array of wires into the motor area of a paralyzed man’s brain. Those wires picked up electrical pulses each time he imagined moving a hand. By letting a computer decode those pulses, the man could move a cursor purely by thought. It was a historic moment, but the hardware was bulky, linked with wires that pierced the skin, and could handle only a few dozen signals at once. Information moved slowly, and the implant carried infection risk. Even so, it proved that direct “brain-computer interfaces,” or BCIs, were possible.

Over the next fifteen years, most BCIs recorded brain signals, turned them into text, then sent that text to a speech program. Each step added delay. The computer had to wait until the user mentally finished a full word – or sometimes a whole sentence – before it could show or speak anything. That wait broke the natural back-and-forth rhythm of conversation. Because the decoding software needed a fixed vocabulary, the user could speak only words stored in the system – usually about 1,300 of them. Unusual names, slang, and quick filler sounds like “uh-huh” or “hmm” all failed. Some research groups pushed accuracy to around 97 percent for that limited word list, but speed and expressiveness did not improve. Users still sounded like slow, monotone robots, and most found it too tiring for daily life.

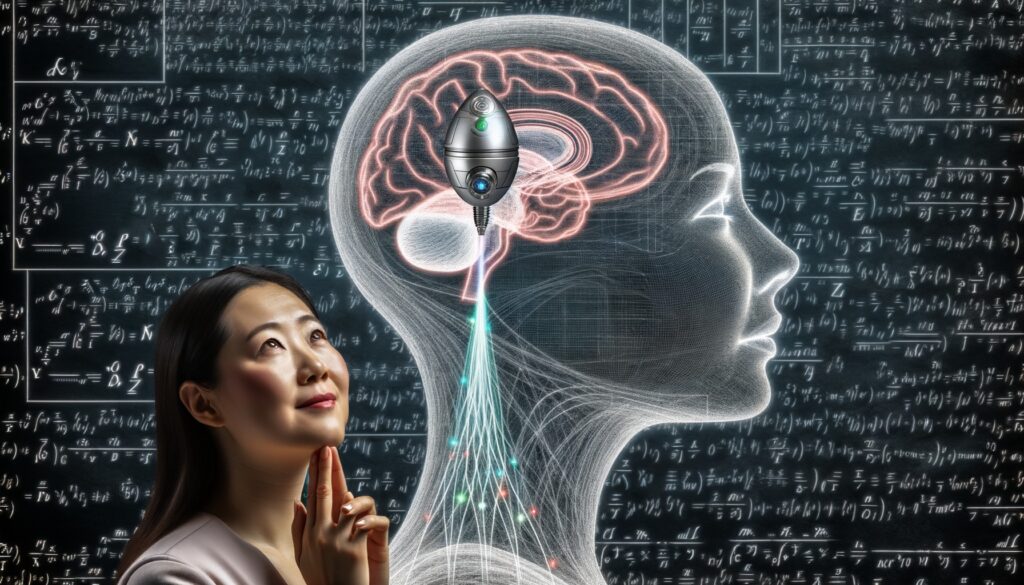

A group at the University of California, Davis, decided to bypass text altogether. They asked a simpler question: instead of guessing whole words, what if we decode the small muscle commands that shape every sound? In mid-2025, they published results from a first patient with advanced amyotrophic lateral sclerosis (ALS). The team implanted four tiny grids, each about the size of a baby aspirin, into the narrow strip of brain that controls lips, tongue, and voice box. Altogether those grids carried 256 microscopic electrodes, small enough to listen to single brain cells firing. Every few milliseconds, the implant sent a flood of electrical data to an artificial intelligence program that had been trained to link those patterns to basic sound features like pitch and volume. The AI produced a stream of numbers that a second program – called a vocoder – turned into audio. Total turnaround time from thought to sound: roughly ten milliseconds, shorter than the blink of an eye.

In practice, the system worked like this. A word or short phrase appeared on a screen. The patient tried to say it. Even though his mouth could no longer form clear sounds, his brain still sent the right control signals. The electrodes picked up those signals, the AI decoded them, and the vocoder spoke. Because the team had old home videos of the man talking before ALS stole his voice, they trained the vocoder to reproduce his natural tone. For him, hearing “his own” voice again carried huge emotional weight – friends and family recognized it instantly.

Accuracy still must rise before the system works in chatter around a kitchen table, yet the jump in clarity is already life-changing for a person whose spoken words had become unintelligible.

The gains go beyond accuracy. Because the device spits out sound continuously, the patient can stretch a vowel for emphasis, ask a question with a rising pitch, or toss in a quick “mm-hmm” while someone else is talking. There is no fixed dictionary, so invented words, foreign phrases, and even simple melodies come through. In short, it moves communication closer to natural speech than any text-based BCI ever managed.

None of this is free of challenges. Implanting electrodes means surgery, which carries infection and bleeding risks. Over time, the brain forms a thin scar around foreign objects, a process called gliosis, which slowly muffles the signals. Surgeons and engineers can swap in softer materials and drug-coated surfaces to delay scarring, but no one yet knows exactly how long today’s implants will stay sharp – five years, ten, maybe longer. Because ALS gradually destroys the very neurons that create these signals, an ALS patient’s usable window could be shorter than that of someone paralyzed by a spinal injury but whose brain cells remain healthy.

Scale is another factor. The Davis study used 256 electrodes. Startups such as Paradromics aim for 1,600 or more, arguing that extra channels will cut errors and give future users near-perfect speech. Paradromics recently tested its array in a volunteer already scheduled for epilepsy surgery. They inserted the device, captured clean signals within minutes, then removed it before closing. Data rates reached over 130 bits per second – ten times higher than published figures from Neuralink, Elon Musk’s company. Paradromics has started the long U.S. Food and Drug Administration (FDA) process for a clinical trial focused on restoring speech, with UC Davis slated as a lead site.

Neuralink remains the most publicized player thanks to its outspoken founder, but its first human implant saw some wires pull back from the brain, reducing performance. Precision Neuroscience takes a different route: a wafer-thin sheet that sits on top of the brain rather than piercing it. That approach may win easier FDA approval but probably captures weaker signals. Synchron skips skull opening completely, steering tiny electrodes through the bloodstream into a vein that runs alongside the speech region; it is the least invasive but also the lowest in resolution. Each design balances surgical risk, recording quality, and regulatory hurdles. No one knows yet which trade-off will dominate the market.

Why is now the moment for executive attention? Two converging trends – better electronics and better artificial intelligence – have lowered the technical walls that kept BCIs in university labs. Tiny amplifiers and wireless chips can tuck under a skull without overheating. Transformer-based AI models, the same family that powers modern language tools, can digest thousands of neural pulses each second and still run on a laptop-class processor. Vocoders that once required data center servers now fit on a tablet. In other words, the pieces needed for a portable, cable-free speech prosthesis are available off the shelf – the remaining task is product integration and clinical validation.

Regulatory costs will be high. Companies predict that pivotal trials alone could consume several hundred million dollars. Yet the potential market is large. Millions of people worldwide live with conditions that steal speech – ALS, brainstem stroke, traumatic injury, head-and-neck cancers. Even a small initial slice of that population could support a viable business, and success in speech will pave the way for related products such as thought-controlled wheelchairs or computer cursors.

Payers and hospital systems will want proof of real-world benefit. Does faster, clearer communication shorten hospital stays? Does it cut medication errors because patients can explain pain levels sooner? Does it let some people return to desk jobs, reducing long-term disability payouts? Early pilot programs need to measure these outcomes, not just lab accuracy.

Privacy concerns must also be addressed. The electrodes hear only the tiny patch of brain that moves the vocal tract – they cannot read hidden thoughts. Still, companies will have to encrypt data in transit and clarify who owns the raw recordings. A simple rule – no cloud storage without explicit consent – will calm many fears.

The BCIs of 2025 are not yet perfect, but they have crossed a threshold that matters.

About the Author:

Dmitry Baraishuk is a partner and Chief Innovation Officer at a healthcare software development company Belitsoft (a Noventiq company). He has been leading a department specializing in custom software development for 20 years. The department has hundreds of successful projects in AI software development, healthcare and finance IT consulting, application modernization, cloud migration, data analytics implementation, and more for startups and enterprises in the US, UK, and Canada.