Enterprise networks are restructuring to meet AI and machine learning demands, which introduce more intensive traffic behaviors than conventional applications. With the global AI market projected to reach $244.22 billion in 2025 and grow to $1.01 trillion by 2031, businesses face pressure to manage rising data volumes and complex communication patterns. As organizations integrate AI into products and workflows, network performance becomes a crucial limiting factor, prompting a renewed focus on scalable infrastructure for next-generation compute.

As enterprises accelerate AI adoption, existing networks are reaching critical stress points. Unlike traditional IT workloads, AI applications can trigger intermittent data surges, moving terabytes or even petabytes between training systems, data lakes, and inference endpoints.

AI workloads require extremely low latency and rapid data movement. In fact, up to 50% of AI processing time may be spent waiting on network transport, exposing the limits of legacy architectures. The impact extends beyond performance, introducing unexpected costs, security challenges, and even sustainability concerns.

In response, forward-thinking organizations are now considering foundational changes, such as Clos network architectures and deterministic routing solutions designed to support AI’s scale and unpredictability.

Architectural Requirements for AI-Optimized Networks

Most enterprise network architectures were built around steady-state workloads or structured business applications with predictable traffic flows. These legacy systems are quickly reaching their limits in the face of AI-driven operations, where sudden, large-scale data transfers and extremely low latency requirements are the norm.

To meet these challenges, organizations must rethink their infrastructure along several critical dimensions: extremely low latency, deterministic performance, capacity for horizontal scaling, security that protects high-volume interconnects, and high availability.

Instead of making incremental adjustments to aging systems, the transition calls for a fundamentally different design model. AI environments require something closer to a distributed, high-capacity intercity transport grid, where traffic routing, load balancing, and fault management are engineered into the foundation, not added after the fact.

Let’s break down the core technical pillars of building a network infrastructure that can reliably support AI-scale demands.

1. Clos Architecture for Scalable, Predictable Performance

Networks built to support AI workloads increasingly rely on nonblocking three-stage or five-stage Clos network architectures. These topologies can handle an eight to sixteen-fold increase in port density over conventional designs, enabling rapid data transfer between GPUs and storage systems.

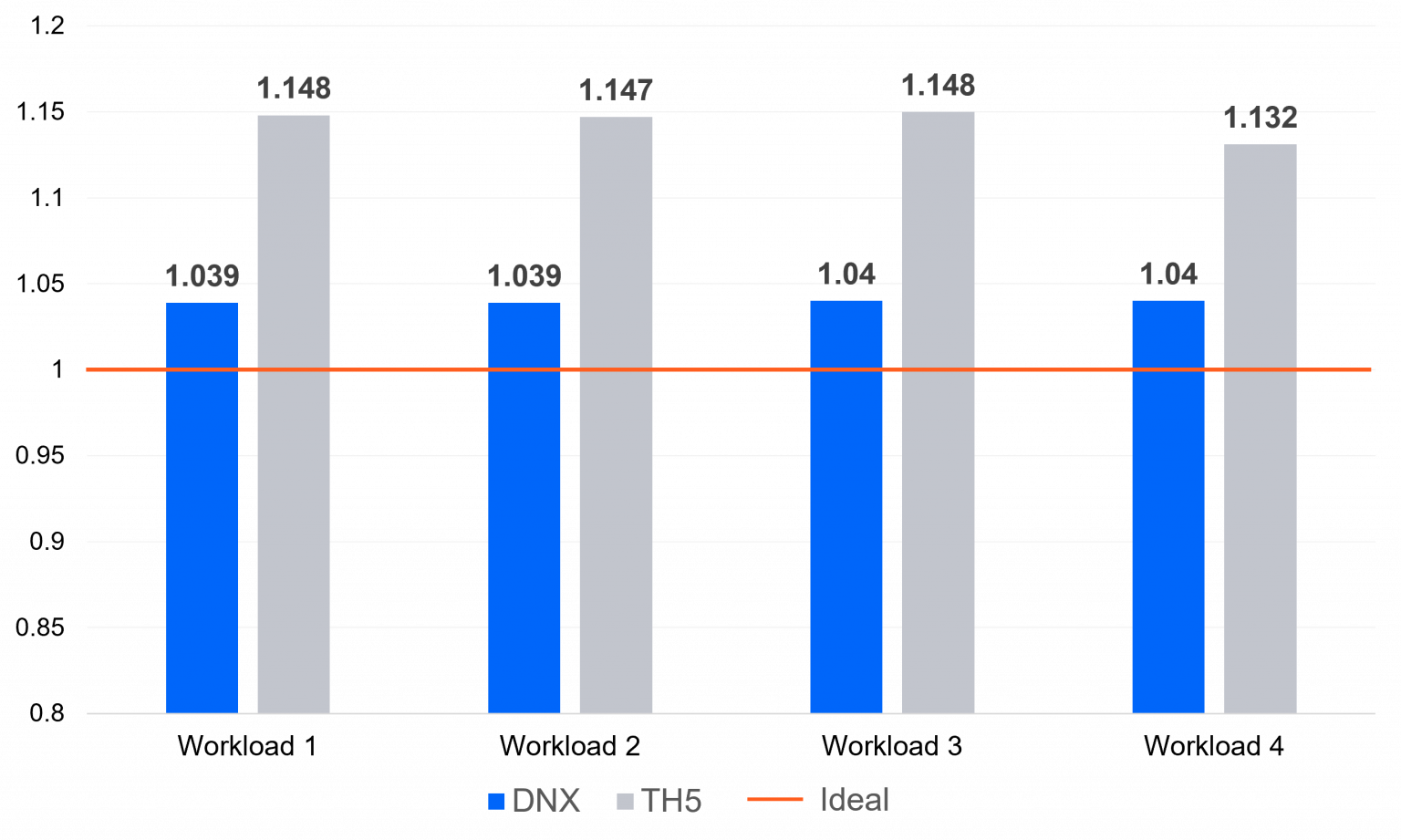

- Parallel Processing Efficiency: Fully meshed, nonblocking Clos ports allow consistent, rapid GPU-to-GPU data transfers. In recent benchmarks, a DNX-based DDC architecture achieved near-ideal Job Completion Time (JCT), while a TH5-based Ethernet Clos design showed JCTs 10% higher than DDC and 14% above ideal.

- Predictable Low-Latency Performance: DDC-based solutions can scale to 32,000 ports at 800 Gbps per node, ensuring line-rate throughput and lossless traffic delivery. This is critical for real-time AI model training, where datasets can exceed petabytes. High bandwidth is essential to keep GPUs fully utilized.

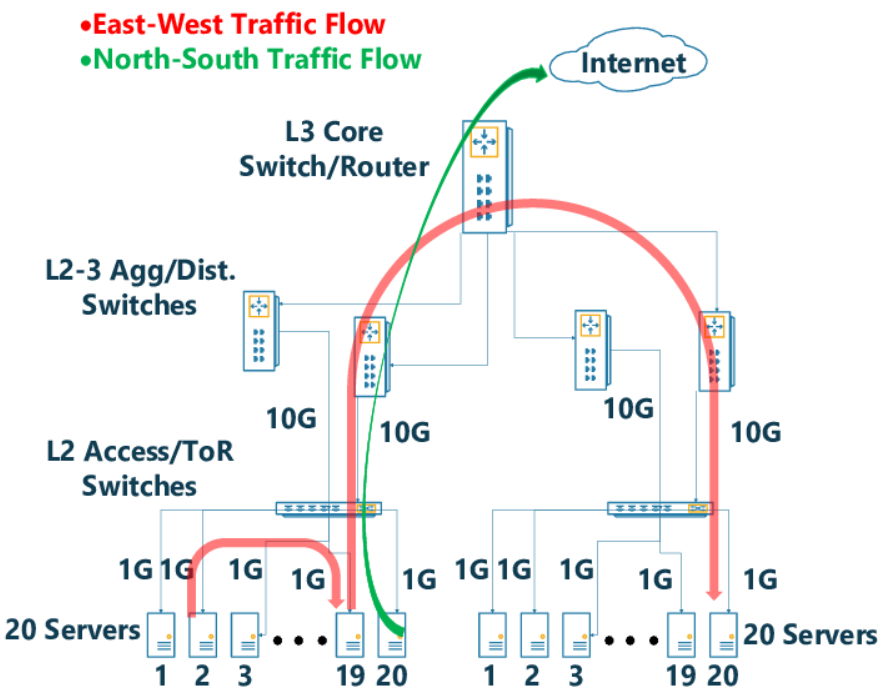

- East-West Optimization: Unlike traditional networks built primarily for north-south traffic (between clients and servers), Clos architectures are optimized for east-west traffic. This design ensures low-latency, high-throughput performance across distributed compute nodes, minimizing congestion and accelerating data exchange during training and inference.

- High Availability: Four-device-per-layer configurations (leaf and spine) in Clos topologies reduce the risk of failures compared to legacy two-device layers. This redundancy ensures continuity even during individual node or path outages.

2. VXLAN and EVPN for Multi-Cloud Flexibility

With 35% of global enterprises already deploying AI and 42% actively exploring adoption, organizations are rapidly turning to multi-cloud strategies to scale their AI initiatives. A VXLAN-EVPN overlay addresses the key challenge of connecting distributed cloud, data center, and edge environments efficiently and securely.

- Scalable, Transport-Neutral Networking: VXLAN allows creation of up to 16 million virtual networks via MAC-in-UDP encapsulation, dwarfing the legacy 4,094 VLAN cap. These overlays work across internet, MPLS, leased circuits, and dark fiber alike.

- Dynamic Routing: EVPN uses MP-BGP to distribute MAC and IP reachability across Clos layers, streamlining workload mobility between environments without manual configuration.

- Traffic Segmentation and Encryption: VXLAN/VLAN segmentation isolates sensitive workloads, while TLS 1.3 secures data in transit with minimal overhead, supporting compliance without performance trade-offs.

Together, VXLAN and EVPN form a resilient, scalable backbone for AI workloads, enabling enterprises to extend Clos-based data center networks into cloud and edge environments with agility and confidence.

Structural Trends Influencing AI Networking

Recent developments in enterprise networking reflect several trends that are influencing how organizations approach AI infrastructure:

AI-Driven Self-Healing Network Systems

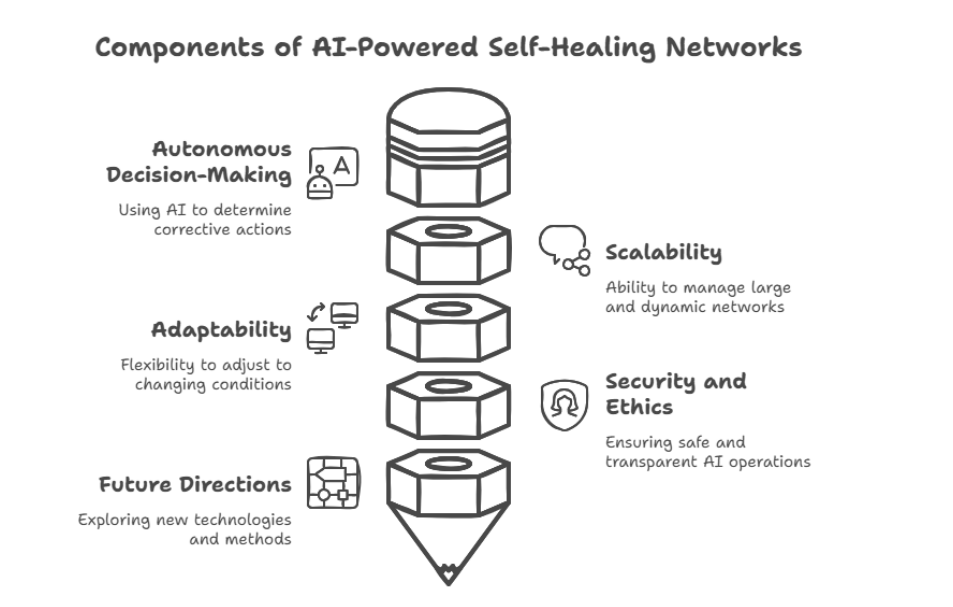

The operational complexity of network topologies is driving the rise of autonomous, self-healing systems. AI-powered orchestration tools continuously monitor network health, automatically reconfiguring paths and reallocating resources to maintain optimal performance. These tools replace fragmented, manual processes with unified configuration management across the entire network fabric, anticipating potential conflicts, ensuring consistency, and proactively preventing failures.

More advanced deployments go beyond automation to implement full AI-driven traffic engineering. In these systems, network paths are dynamically restructured in response to real-time telemetry, enabling continuous optimization without human intervention.

IPv6 Implementation for Enterprise IoT Integration

As enterprise IoT platforms scale, IPv6 adoption is becoming a practical necessity. IPv6’s 128-bit address scheme allows every device to be individually addressed without the complexity of network address translation, reducing latency and simplifying security configurations. Networks running on IPv6 report improved reliability, better encryption support via IPsec, and fewer misconfigurations, all of which support smoother AI-to-device communication.

Hardware-Accelerated Encryption for Edge Security

As IoT systems carry an increasing share of AI workloads, network infrastructure is being pushed to handle higher port density and greater thermal output. Co-packaged optics (CPO) are emerging as a response to these constraints, reducing heat and power needs per port, while hardware-based encryption offloads cryptographic processing from CPUs. This results in better throughput, stronger security, and longer device life, especially in resource-constrained environments.

Image: Shutterstock

Cloud-Native Network Security Services

Centralized firewalls no longer meet the demands of AI systems spread across cloud, edge, and on-premise environments. Enterprises are turning to zero-trust frameworks, enforced through cloud-native services, which deliver:

- Scalability for high-volume encrypted traffic

- Unified policy control across hybrid environments

- Deep traffic visibility, including encrypted flows

Adopting cloud-native network security ensures flexible, resilient protection as AI models span edge, cloud, and data center environments.

Conclusion: Designing for AI-Capable Infrastructure

Enterprise networks, once designed for modest, client-server transactions, now face the challenge of high-density, parallel data movement. The bandwidth and latency thresholds required by AI applications far exceed those of traditional workloads.

Clos topologies, deterministic routing, and VXLAN/EVPN overlays provide the technical capabilities required for managing AI workloads efficiently. These frameworks support intensive lateral data flows and hybrid deployments, and reduce disruptions that delay training cycles and inference outputs. Moreover, their economic benefits are measurable. Trimming JCT by 10% can mean a 10% reduction in GPU usage over time, which is an immediate cost benefit at scale.

The future of enterprise networking lies in architectural models that enable AI innovation while optimizing resource use. Organizations that modernize now stand to avoid slowdowns, reduce waste, and build infrastructure that can meet demand without compromise.

About the Author:

Jigar Babaria is a Senior Network Development Engineer with over 19 years of experience designing, implementing, and securing enterprise network infrastructures for global organizations. He holds US Patent #10931624, which outlines a method for deterministic routing over public internet infrastructure. His technical background includes deep specialization in zero-trust architecture, network segmentation, and secure cloud integration. Babaria also holds certifications such as CCIE SP (Written), CCIP, and JNCIS-M, and has served in evaluation roles for enterprise technology partners, helping define implementation benchmarks across the industry.

References:

Artificial Intelligence – Worldwide | Market forecast (2025 March). Statista. https://www.statista.com/outlook/tmo/artificial-intelligence/worldwide

Understanding AI workloads in hyperscaler networking. (2024, January 23). DriveNets. https://drivenets.com/resources/education-center/what-is-ai-workload/

Froehlich, A. (2024, April 11). Building networks for AI workloads. TechTarget. https://www.techtarget.com/searchnetworking/tip/Building-networks-for-AI-workloads

Cohen, D. (2023, November 22). Network Cloud AI: practical applications and benefits. DriveNets. https://drivenets.com/blog/network-cloud-ai-from-theory-to-practice/

Optimizing Networking for AI Workloads: A Comprehensive guide. (2024, December 27). UfiSpace. https://www.ufispace.com/company/blog/networking-for-ai-workloads

Khattak, M. K., Tang, Y., Fahim, H., Rehman, E., & Majeed, M. F. (2020 February). Effective routing technique: augmenting data center switch fabric performance. IEEE Access, 8, 37372–37382. https://doi.org/10.1109/access.2020.2973932

Salminen, M., & Mauladhika, B. F. (2025, February 7). AI statistics and trends: New research for 2025. Hostinger. https://www.hostinger.com/tutorials/ai-statistics

Katragadda, S., & D, G. (2023). Self-Healing Networks: Implementing AI-Powered Mechanisms to Automatically Detect and Resolve Network Issues with Minimal Human Intervention. International Journal of Scientific Research and Engineering Development. https://ijsred.com/volume6/issue6/IJSRED-V6I6P123.pdf

Taylor, J. (2025, March 28). Co-Packaged optics – end of pluggables? What it is, why it matters, and who needs to pay attention. CABLExpress®. https://www.cablexpress.com/blog/co-packaged-optics-end-of-pluggables-what-it-is-why-it-matters-and-who-needs-to-pay-attention

Hashiyana, V., Haiduwa, T., Suresh, N., Bratha, A., & Ouma, F. (2020, May 1). Design and implementation of an IPSEC virtual Private Network: a case study at the University of Namibia. IEEE Conference Publication | IEEE Xplore. https://ieeexplore.ieee.org/document/9144027

Featured Image: FAMILY STOCK | Shutterstock