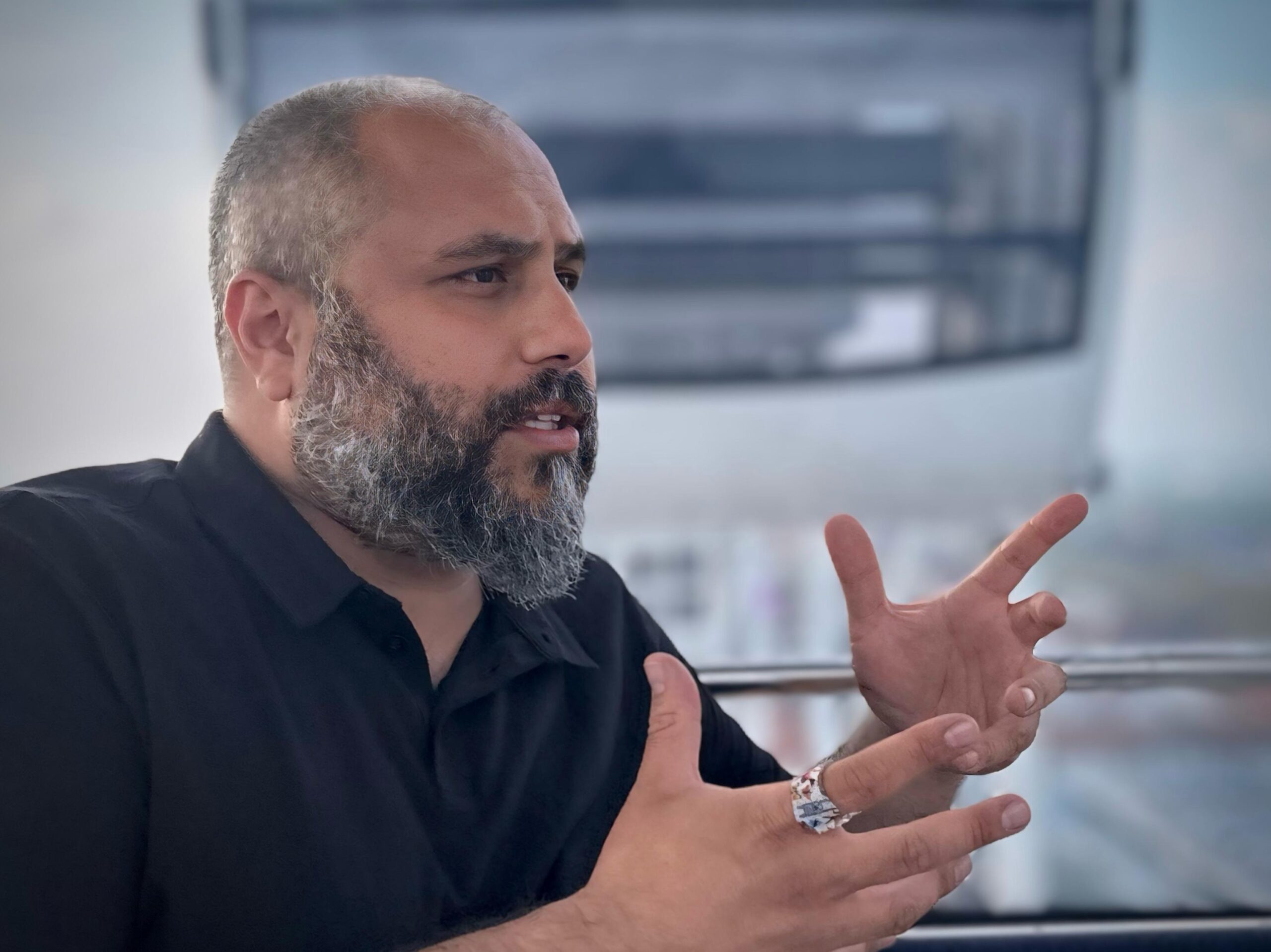

By Amir Karimi

With over 900,000 unresolved cases burdening the German judicial system, the call for greater efficiency is intensifying. Artificial intelligence (AI) is being hailed as a potential solution — but the constitutional and legal barriers remain firmly in place. In Germany, the notion of a fully autonomous “robo-judge” remains constitutionally impermissible. AI may support, but it must never decide.

Constitutional Boundaries and Legal Certainty

In a landmark decision (Case X ZB 5/22, June 11, 2024), Germany’s Federal Court of Justice (BGH) ruled that AI systems cannot be recognized as inventors under patent law. The case centered around DABUS, an AI system that had been listed as an inventor in patent applications globally. The court was unequivocal: “Only a natural person can be considered an inventor under § 37(1) PatG.”

“The legal framework is unambiguous — machines cannot assume legal responsibility; authorship and accountability remain human domains,” explains Amir Karimi, a German-based analyst and advisor specializing in AI regulation.

This principle extends far beyond patent law. In copyright, liability, and administrative law, the recognition of personhood — and thus legal responsibility — is reserved solely for human beings. AI remains a tool, comparable to a word processor, never the author of legal consequence.

Judicial Independence as a Constitutional Anchor

The German Constitution enshrines judicial independence as a foundational pillar of democratic order. According to Article 92 and Article 101(1) of the Basic Law (Grundgesetz), judicial authority must be exercised by natural persons. Any attempt to automate core judicial decisions would violate this tenet.

“Technological efficiency cannot justify the erosion of legal responsibility,” notes Karimi. “Judicial rulings must ultimately be made by humans — not algorithms.”

This principle also serves as a safeguard against automation bias — the cognitive tendency to over trust machine-generated results. AI’s lack of transparency, often referred to as the “black box problem,” conflicts directly with the principle of verifiability, which is indispensable in a constitutional democracy.

AI in Practice: Supportive, Not Sovereign

Despite strict constitutional limits, AI applications are already shaping day-to-day operations within German courts. At the Stuttgart Higher Regional Court, the “OLGA” system assists in categorizing thousands of mass litigation cases related to diesel emissions. In Frankfurt, the “FRAUKE” system

automates draft rulings for air passenger compensation cases. In Hanover, a judge reported that AI systems now complete data extraction tasks in four seconds — processes that once took two hours.

Other innovations include automated pseudonymization tools and systems capable of scanning asylum files. Bavaria and North Rhine-Westphalia are currently developing a GPT-style language model tailored specifically to judicial contexts.

“These developments are impressive — but must remain auxiliary. AI cannot and must not replace judicial reasoning,” says Karimi.

The Legal Maze: Data Protection and Liability

The General Data Protection Regulation (GDPR) applies in full to AI tools operating in the judiciary. Particularly thorny is the question of whether a trained model can be linked to identifiable individuals. As technology evolves, so do the risks.

Even more complex is the issue of liability. Since AI lacks legal personhood, it cannot be held liable for its actions. Instead, responsibility may fall on developers, owners, or operators — an evolving legal dilemma for both courts and legislators.

The AI Act: Europe’s Regulatory Milestone

On August 1, 2024, the European Union’s AI Act will come into force, classifying judicial AI applications as “high-risk.” The regulation imposes strict standards for documentation, oversight, and human control.

Dr. Jutta Kemper, Deputy Head of Unit at the German Ministry of Justice, reaffirmed this stance: “The final decision must always rest with a human.”

“The AI Act establishes the legal perimeter within which innovation can thrive without undermining the rule of law,” Karimi observes. “It’s not a barrier — it’s a safeguard.”

The Act sends a clear message: law is not a testing ground for uncontrolled automation. Regulatory clarity, not deregulation, is the path forward.

Ethics Meets Innovation

One of the most ambitious undertakings is the TITAN project — a €1.5 million initiative exploring how AI can assist, but not replace, legal reasoning. Universities are responding with academic lecture series on AI and law, while practical manuals like the AI and Law Handbook outline the intersection between innovation, liability, and legal tradition.

“This is not merely a technological debate — it’s a discourse about democratic values,” Karimi notes. “We must build an interdisciplinary ethics of responsibility for AI in law.”

As ministries prepare to unveil a nationwide AI justice strategy in 2025, the balance between digital transformation and constitutional fidelity remains fragile — and essential.

Conclusion: A Hybrid Judiciary — with Rules

AI will transform the judiciary — but it will not replace it. Human judges remain constitutionally indispensable. AI can expedite repetitive tasks and assist with case analysis, but it cannot render final judgments. The constitutional mandate is clear.

“The challenge lies in drawing a firm line between support and sovereignty,” Karimi concludes. “Only when humans remain in control can the judiciary maintain its legitimacy.”

The future of justice in Germany will not be purely human — nor purely artificial. It will be hybrid: a carefully calibrated synthesis of judicial expertise and technological augmentation.

FAQs: Artificial Intelligence in the Judiciary

Can AI deliver verdicts in Germany?

No. The Basic Law mandates that only human judges may issue court rulings. AI may only provide support functions.

How is AI currently used?

To categorize mass litigation (e.g., OLGA), assist with legal research, analyze court files, and anonymize rulings.

What are the main legal challenges?

Ensuring GDPR compliance, resolving liability in the case of faulty decisions, and preserving decision-making transparency.

What does the AI Act regulate?

It categorizes judicial AI as high-risk, requiring robust documentation, human oversight, and traceability of decisions.

How is Germany preparing for broader AI deployment in courts?

Through nationwide AI strategies, cross-state data platforms (2026), and research initiatives like TITAN.

Selected Sources:

- German Federal Ministry of Justice – Chatbot Legal Advisory Report

- University of Konstanz – AI in the Judiciary

- Legal-Tech.de – AI in Justice: Update

- IBM Germany – AI and Justice

- AI and Law Handbook – Beck-Shop

About Amir Karimi

Amir Karimi is a seasoned tech entrepreneur and analyst specializing in artificial intelligence in Germany. With deep expertise in research trends, market dynamics, and regulatory frameworks, he advises organizations on the ethical and strategic deployment of AI technologies.