In today’s digital economy, data has evolved from a mere asset to a strategic imperative. Enterprises across industries rely on vast volumes of data to inform decisions, drive innovation, and sustain competitive advantage. However, the true value of data lies not in its collection, but in how seamlessly it can be integrated, transformed, and operationalized within increasingly complex technology ecosystems.

Modern organizations are inundated with data from diverse sources such as IoT sensors, mobile applications, legacy databases, real-time event streams, and third-party APIs. Effectively managing this data requires robust ETL (Extract, Transform, Load) processes that unify disparate data formats and systems into coherent, analytics-ready resources. Once relegated to backend operations, data integration now plays a central role in shaping enterprise strategy.

Yet despite this evolution, significant challenges persist. A recent Gartner report revealed that over 80% of enterprise analytics initiatives are delayed due to fragmented data pipelines, inflexible architectures, and outdated integration technologies. In 2022 alone, the global data integration software market grew by 9% to $4.6 billion, driven by vendors offering cloud-native and specialized delivery models. As demand escalates, so too does the cost of inefficiency, manifesting in delayed insights, inconsistent reporting, increased compliance risk, and lost opportunity. With the ETL tools market expected to exceed $22 billion by 2027, the need for intelligent, scalable, cloud-compatible integration frameworks has never been more urgent.

A Research-Driven Breakthrough in ETL Automation

Amid this backdrop, a timely and impactful research paper titled “Enhancing Data Integration and ETL Processes Using AWS Glue” was published in the International Journal of Research and Analytical Reviews in December 2024. The paper explores how AWS Glue, a fully managed, serverless ETL service by Amazon Web Services that can fundamentally reshape enterprise data integration.

Authored by a cross-industry team of software engineers from leading organizations including a global financial institution, Verizon, Meta, and Tata Consultancy Services, the study highlights how AWS Glue’s modular architecture enables scalable, cost-effective, and automation-friendly ETL workflows.

Redefining the ETL Landscape with AWS Glue

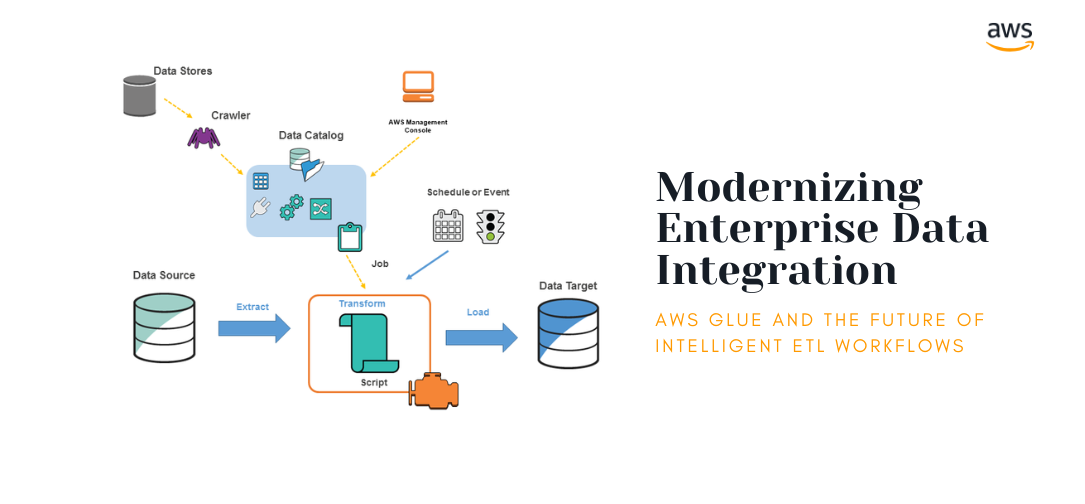

The paper positions AWS Glue as a key enabler of real-time analytics, cloud migration, and data lake formation. Central to this is Glue’s modular architecture featuring components such as Glue Crawlers for automated schema discovery and Glue Workflows for orchestrating complex, multi-step transformations.

As Balakrishna Pothineni, one of the principal contributors, explains:

“Data integration is no longer just about moving data from point A to B. It’s about intelligently adapting to evolving schemas, securing data in transit, and orchestrating distributed transformations with minimal latency and operational burden.”

The study showcases AWS Glue’s versatility in handling a wide array of data sources, from traditional systems like Oracle and SQL Server to real-time streams via Amazon Kinesis. The pay-as-you-go serverless model provides a cost-efficient path to scale, while integrations with tools like Glue Studio, Amazon SageMaker, and CI/CD platforms simplify the development and deployment of production-grade pipelines.

Glue’s Spark-based DynamicFrame API further enhances flexibility in managing schema drift and executing complex transformations. Its interoperability with AWS ecosystem services strengthens its application across analytics, machine learning, and compliance pipelines.

Pothineni adds:

“This research lays the groundwork for building self-healing data pipelines with systems that detect, isolate, and remediate anomalies without human intervention.”

Applied Innovation: Real-World Use Cases

The paper also explores high-impact applications including automated data lake formation, real-time fraud detection through Amazon Kinesis, and cloud migration from legacy systems. Of particular interest is the analysis of AWS Glue’s cost-efficiency under serverless deployment offering a blueprint for organizations aiming to deliver rapid insights without inflating infrastructure costs.

Verizon contributor Ashok Gadi Parthi remarks:

“The ETL framework we evaluated wasn’t just technically elegant. It was compliance-aware and CI/CD integrated, something many enterprises overlook when designing analytics systems for scale.”

He continues:

“What made this ETL framework stand out was its seamless integration of compliance, security, and performance. Many enterprise systems today lack that trifecta.”

Addressing Challenges and Architecting Resilience

While emphasizing AWS Glue’s advantages, the paper candidly acknowledges its limitations such as debugging complexities in Spark-based jobs and the potential for cost volatility at scale. The authors propose mitigations, including Terraform-based provisioning, ML-powered anomaly detection, and automated schema validation to bolster pipeline reliability and integrity.

TCS contributor Durgaraman Maruthavanan adds:

“We believe this work provides a foundation for embedding ML-driven anomaly detection and auto-healing capabilities in data pipelines, pushing the boundaries of what ETL systems can autonomously manage.”

Looking Ahead: From Serverless to Self-Healing Pipelines

A major takeaway from the research is its vision for integrating AWS Glue with AI and ML models to enable intelligent ETL systems. Potential advancements include real-time data quality monitoring, adaptive schema recognition, and tighter integration with hybrid and multi-cloud environments.

As organizations enter a new era of data complexity, this research offers more than just technical insight, it serves as a blueprint for resilient, AI-driven data infrastructure. By focusing on automation, observability, and interoperability, the study underscores the strategic role of data integration in powering real-time analytics, regulatory compliance, and enterprise transformation.