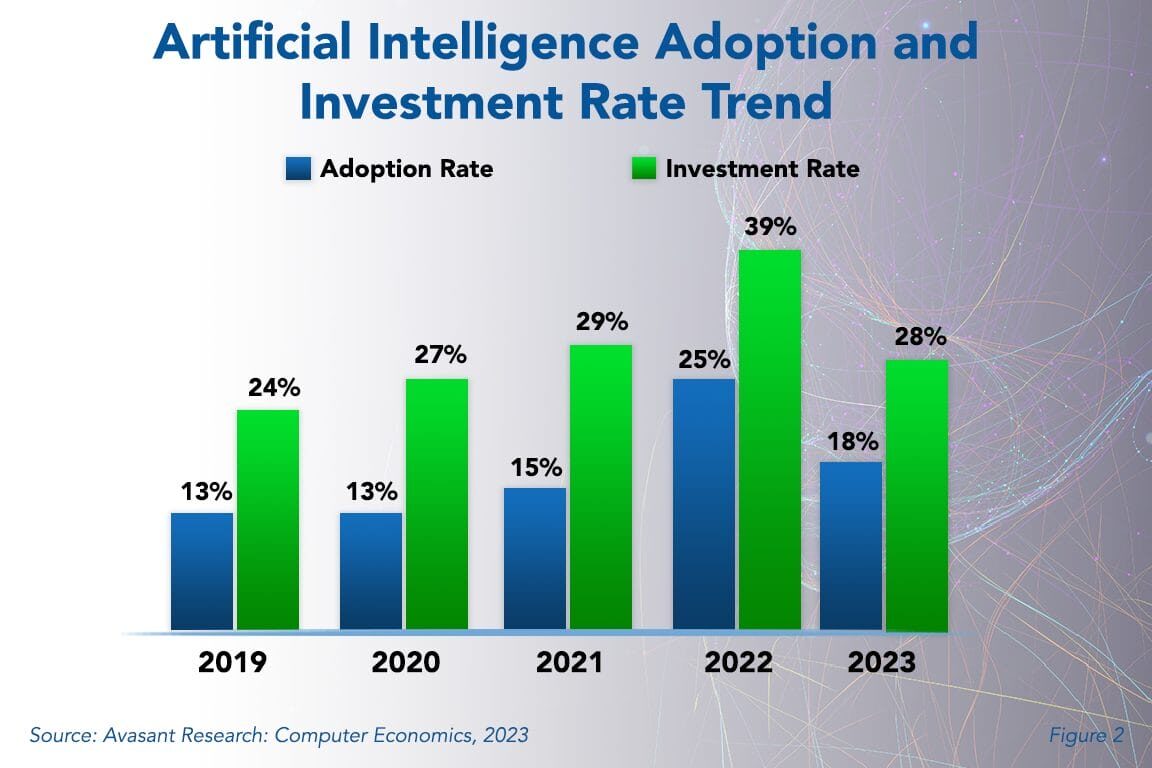

“AI on mobile used to mean camera filters or unlocking your phone with a glance. It was clever, but superficial. But recent research and industry trends show a significant shift. According to Dwivedi et al. (2021), AI’s role in mobile computing is transitioning from reactive assistance to proactive engagement, where models infer user intent, optimize performance, and anticipate needs.

This reflects a broader shift toward embedded, context-aware intelligence. As Dr. Kate Crawford (2023) notes, “Incorporating AI in edge devices enhances real-time responsiveness, privacy, and autonomy”—a sentiment echoed across industry panels and academic discourse. Such insights reflect how AI-first engineering isn’t just a design shift—it’s a philosophical one, influencing every layer of development from chip design to software ecosystems.”

AI in Your Palm: Engineering the Rise of AI-First Mobile Phones

It was 2:12 a.m., Seoul time. I was on a video call with engineers in Suwon, debugging inference drift on a prototype device, while Dallas and Bangalore were still hours from waking.

We were syncing modem firmware and AI behavior on a phone that might someday help first responders save lives. That was when I realized that AI wasn’t an enhancement—it had become the device’s heartbeat.

Over the past two decades, I’ve watched mobile phones evolve from voice-centric tools to mission-critical lifelines.

Today, we’re entering an even more profound transformation.

Phones are no longer just reactive—they’re beginning to sense, adapt, and respond proactively. AI is no longer an add-on. It’s built into the foundation, reshaping everything we thought we knew about mobile design.

As someone who’s engineered systems tough enough to survive oil rigs, subways, and frontlines, I’ve learned that the future of mobile isn’t about screens or processors — it’s about intelligence. More specifically, embedded intelligence.

The rise of AI-first mobile phones marks a shift from device-centric design to experience-centric engineering, and we’re only at the beginning.

Understanding AI in Mobile: Beyond Smart Features

AI on mobile used to mean camera filters or unlocking your phone with a glance. It was clever, but superficial.

Today, it’s about how the phone processes the world around it and makes real-time decisions, often under pressure. AI isn’t a luxury- it’s a lifeline, especially in enterprise and public safety settings.

Your voice message still cuts through clearly on a Push-To-Talk (PTT) system. Why? Your phone’s AI has been trained to separate your voice from the noise and find clarity in chaos.

Or consider thermal sensors predicting hardware stress and adjusting performance to preserve uptime. This isn’t theory. I’ve deployed these systems in FirstNet-certified rugged phones in the field.

“Incorporating AI in edge devices like smartphones enhances real-time responsiveness, privacy, and autonomy.”

— Dr. Kate Crawford, Microsoft Research (TED 2023) [1]

That’s a new class of intelligence.

AI’s Role in Global Collaboration and Cross-Functional Engineering

Research reveals that the worldwide smartphone market declined by 10% year-on-year, reaching 258.2 million units in Q2 2023, signaling a slowdown in the rate of decline compared to previous quarters.[2]

Take one device launch. The modem team in Suwon was tuning inference timing for power efficiency. Simultaneously, Android developers in Bangalore were rewriting UI logic that responded dynamically to AI-generated events.

Meanwhile, our certification leads in Dallas were questioning how to define what “expected behavior” even means when the behavior itself can evolve.

AI doesn’t follow deterministic rules—it adapts to context. That forced us to change how we work:

- QA shifted from strict test cases to scenario-based validation.

- Logs became smarter, able to detect inference drift or confidence-level changes.

- Bug triage transformed into a conversation: Is it a defect, or a sign that the model is learning as designed?

We even had to redesign product dashboards to track how AI logic impacted modem performance and battery life in real-world field tests. This wasn’t a nice-to-have; it was critical infrastructure.

Building AI-first phones is like conducting a global orchestra where every section is in a different time zone—and the sheet music keeps changing. Harmony isn’t optional. It’s engineered.

The Stack Behind the Intelligence

The difference between AI-powered and AI-first isn’t just intelligence. It’s in how the system is built—end-to-end.

1. Hardware Layer

It starts at the silicon level. I’ve led chipset selection across Snapdragon and Exynos platforms, and the same truth applies every time: on-device inference is non-negotiable in high-stakes environments.

Cloud AI is helpful—until you’re in a dead zone, out of time, or under load. In public safety and industrial settings, even milliseconds matter.

The wrong chip impacts:

- Thermal performance in field use

- Battery longevity under inference load

- Modem and NPU coordination

- Signal integrity across RF conditions

I’ve seen the results firsthand in field test vehicles—when signal drops or thermal spikes trace back to a single architectural choice. These decisions ripple all the way to end-user trust.

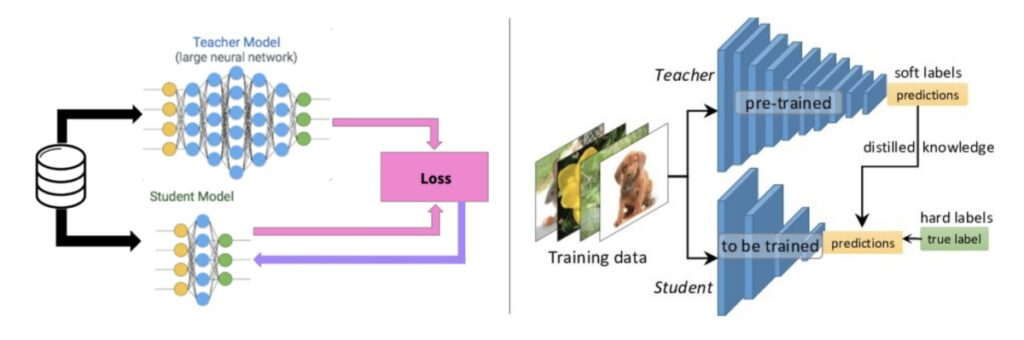

2. Middleware & Firmware

This is the engine room most never see—but where the transformation really takes hold.

We embedded AI reasoning into:

- RIL (Radio Interface Layer)

- NAS, RRC, MAC, and Layer 4 modem stacks

This involved reprogramming decades-old firmware to interact with inference systems. We had to inject adaptability into code that was never designed to respond to probability.

It was like teaching an old dog new tricks—and watching it learn.

3. Application Layer

Now, the user touches the intelligence. But they shouldn’t feel the AI. It just needs to work.

A PTT app detecting urgency in your voice or anomaly detection stopping a system exploit—the user experience must remain natural, predictable, and even comforting. The best AI in mobile stays invisible. It doesn’t seek attention; it earns trust through quiet reliability.

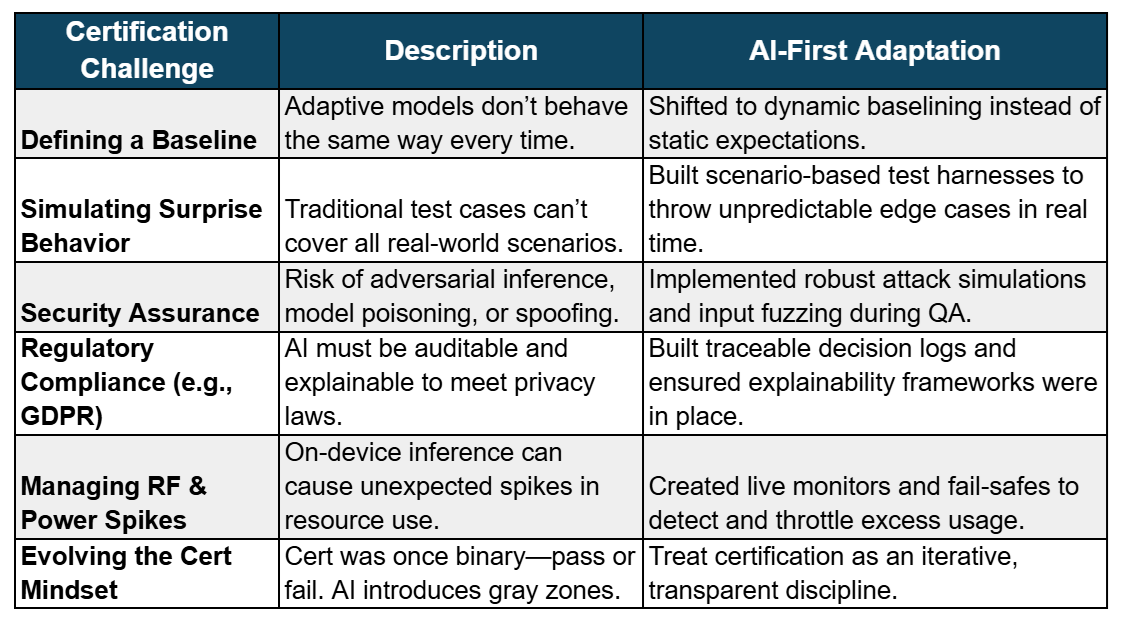

AI and Certification: Redefining What “Correct” Means

Certification used to be about stability. If you could produce repeatable outcomes, you passed.

But what happens when the behavior is dynamic by design?

During SOC certification for a rugged AI-first device [4], one model began throttling connectivity based on predicted usage. It looked like a fault. The certification team flagged it. But it wasn’t a bug—it was the intended behavior of an adaptive system.

The result? Certification not as a checkbox—but as an evolving discipline grounded in transparency and control.

“Testing AI is like testing a personality—it’s contextual, not just conditional.”

— Dr. Timnit Gebru (TED, 2023)[5]

Real-World AI Systems I’ve Built

Here’s what it looks like when this work meets the real world.

Smartphones (ex: XP5S , XP8, Samsung Galaxy & XCover Pro etc.)

We trained AI to recognize ambient noise patterns in industrial zones. One day, a technician radioed in from a metal shop. His voice came through clearer than ever. The system had learned the soundscape—and adapted in real time.

Mission Critical Environment (ex: MCPTT)

We built adaptive AI models into Android and iOS devices to modulate modem power. The payoff? No dropped calls, longer battery life, better thermal control. Not magic—just data-driven optimization.

LTE Modem (ex : Exynos)

In enterprise deployments, we implemented AI-assisted reconnection logic in low-signal environments. Session drop rates fell by 30%—and reliability became a differentiator.

Each of these use cases wasn’t just a technical win—it was a meaningful outcome for someone relying on their device under pressure.

Emerging Trends: What’s Next for Mobile AI

The next frontier is already visible:

Federated Learning

Devices will personalize models locally, learning from users without sending data to the cloud. Privacy is built in, not bolted on.

Hyper-Contextual UX

Phones will adjust based not only on location and task but also on emotion, stress, or urgency, inferring needs before they’re spoken.

AI-Augmented RF Modems

Expect real-time adaptation to interference patterns and signal prediction at the modem layer.

Autonomous Security

Phones will detect and react to behavioral anomalies, cutting off threats before they escalate into breaches.

The result? A phone that doesn’t just work for you—it understands you.

AI Engineering Challenges: Global Insights

We’re not done. Far from it.

- Model compression vs. capability: 68% of AI engineers cite reducing model size without accuracy loss as a top concern[11]. TinyML models can suffer 20–30% accuracy drops[12].

- Global compliance: 72% of teams cite cross-border regulation as a deployment blocker[6]; only 19% feel well-prepared for new legislation[7].

- Testing evolution: 64% of QA leads report static testing fails AI edge cases[8]. Scenario-based testing adoption has tripled since 2021[9]. 48% of companies now track inference drift in real time[10].

It takes alignment across engineering, UX, QA, compliance, and even legal. AI isn’t a feature to be slotted in; it demands systemic design.

Conclusion

AI-first mobile isn’t a roadmap. It’s the reality for every serious platform being built today. It’s changing the device and how we engineer everything around it.

In 20 years, I’ve built phones to survive chaos, impact, and time. Now, we’re creating devices that anticipate chaos—systems that can think, adapt, and respond in moments where every millisecond counts.

Key Takeaways:

- On-device AI is essential for environments where latency, privacy, or connectivity can’t be compromised.

- Engineering has changed: QA, cert, power systems, and architecture all evolve around AI.

- AI touches every layer, from chipset to UX to compliance.

- The results are measurable: Fewer dropped sessions. Smarter resource use. Longer uptime.

We can’t treat AI like a feature anymore. It’s infrastructure. It’s design. It’s responsibility.

About the Author

I am Raghavendra Mangalawarapete Krishnappa , an expert professional with over 20 years of experience in launching top-tier mobile devices, including advanced smartphones and ultra-rugged phones, across the U.S. and international markets. I have successfully led the deployment of Mission Critical Push-to-Talk (MCPTT) applications for both iOS and Android platforms and have significantly contributed to the development of 3GPP protocol frameworks for LTE chipsets.

My work portfolio includes pioneering efforts on Exynos and Snapdragon chipsets, 5G technologies, and Laboratory IoT innovations, as well as introducing cutting-edge features globally. I have collaborated seamlessly with diverse teams in the USA, India, and other global locations, forging impactful partnerships with industry leaders such as Samsung Electronics America, AT&T, Verizon, SouthernLinc Wireless, Sonim Technologies, Sasken Communication Technologies, and CES Ltd.

I invite you to connect with me on LinkedIn to explore potential synergies and opportunities!.

References

[1] [3] VBQ Speakers. (2023). The most popular TED talks on artificial intelligence. VBQ Speakers. https://www.vbqspeakers.com/news/the-most-popular-ted-talks-on-artificial-intelligence/

[2] Canalys. (2023). Worldwide smartphone market Q2 2023. Canalys. https://www.canalys.com/newsroom/worldwide-smartphone-market-Q2-2023

[3] Xailient. (2020). Model compression allows real-time edge AI processing. Xailient Blog. https://xailient.com/blog/model-compression-allows-real-time-edge-ai-processing/

[4] ResearchGate. (2023). Artificial intelligence in the work context. ResearchGate. https://www.researchgate.net/publication/365805629_Artificial_intelligence_in_the_work_context

[5] TED. (2023). The must-watch TED Talks on AI from 2023. TED Talks Playlist. https://www.ted.com/playlists/841/the_must_watch_ted_talks_on_ai_from_2023

[6] Pew Research Center. (2023). As AI spreads, experts predict the best and worst changes in digital life by 2035. Pew Research. https://www.pewresearch.org/internet/2023/06/21/as-ai-spreads-experts-predict-the-best-and-worst-changes-in-digital-life-by-2035/

[7] Gartner. (2023). Highlights from Gartner IT Infrastructure, Operations & Cloud Strategies Conference. Gartner. https://www.gartner.com/en/articles/highlights-from-gartner-it-infrastructure-operations-cloud-strategies-conference

[6] OpenText. (2023). World Quality Report 2023-2024: The future up close is now available. OpenText. https://blogs.opentext.com/world-quality-report-2023-2024-the-future-up-close-is-now-available/

[9] Dwivedi, Y. K., Hughes, D. L., Ismagilova, E., Aarts, G., Coombs, C., Crick, T., … & Williams, M. D. (2021). Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. International Journal of Information Management, 57, 101994. https://www.sciencedirect.com/science/article/pii/S0268401221000761

[10] MIT Technology Review Insights. (2024). Tracking AI Inference Drift in Production. MIT Technology Review. https://www.technologyreview.com/2024/01/04/1086046/whats-next-for-ai-in-2024/

[11] Badii, C., Beltrami, D., & Nesi, P. (2023). Explainable AI for AI-Generated User Profiles: Transparency, Fairness, and Trust for Enhanced Human-Centered Systems. Computers, 12(3), 60. https://www.mdpi.com/2073-431X/12/3/60