Cristiano Amon, the CEO of Qualcomm, said that the company’s most recent modem will significantly outperform Apple, which has just begun experimenting with the technology.

TakeAway Points:

- Qualcomm’s newest high-end modem, the X85, was revealed.

- Apple has been slow to deploy its first modem, and last month it did so in a low-key manner with the launch of the iPhone 16e. It is known as the C1 cellular modem.

- Google has notified Australian authorities that it has received over 250 complaints worldwide in the last year over the use of their AI software to create deepfake terrorist content.

X85 modem

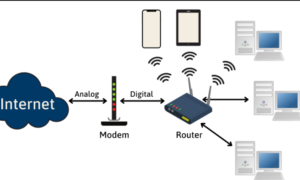

Modems are a key component of smartphones that connect the device to the mobile network. Qualcomm is one of the biggest modem suppliers in the world and, for years, has been the go-to company for Apple’s iPhones.

But in 2019, Apple bought Intel’s modem business with the view of designing its own modem in-house, much like it does with its smartphone processors.

It has taken some time for Apple to release its first modem, and it did so quietly with the launch of the iPhone 16e last month. The cellular modem is called the C1.

This week, Qualcomm announced the X85, its latest high-end modem. In an interview on Tuesday, Amon touted the improved performance of the modem, suggesting it would create a big gulf between it and Apple.

“It’s the first modem that has so much AI; it actually increases the range of performance of the modem so the modem can deal with weaker signals,” Amon said.

“What that will do will set a huge delta between the performance of premium Android devices and iOS devices when you compare what Qualcomm can do versus what Apple is doing.”

The iPhone 16e is Apple’s cheapest smartphone in the latest range. Several reports suggest Apple is working on modems for its higher-end iPhones.

Amon reiterated a statement he has made previously that he expects Qualcomm will not supply Apple with modems in 2027.

Addressing potential technological advances with Apple’s modem, Amon said the components will be key for AI, and Qualcomm will be able to address that.

“If modem is relevant, there’s always a place for Qualcomm technology,” Amon said.

“In the age of AI, modems are going to be more important than they have ever been. And I think that’s going to drive consumer preference about whether they want the best possible modem in the computer that’s in their hand all the time,” Amon added.

Google reports complaints about AI deepfake terrorism content

Google has informed Australian authorities it received more than 250 complaints globally over nearly a year that its artificial intelligence software was used to make deepfake terrorism material.

The Alphabet-owned tech giant also said it had received dozens of user reports warning that its AI program, Gemini, was being used to create child abuse material, according to the Australian eSafety Commission.

Under Australian law, tech firms must supply the eSafety Commission periodically with information about harm minimisation efforts or risk fines. The reporting period covered April 2023 to February 2024.

Since OpenAI’s ChatGPT exploded into the public consciousness in late 2022, regulators around the world have called for better guardrails so AI can’t be used to enable terrorism, fraud, deepfake pornography and other abuse.

The Australian eSafety Commission called Google’s disclosure “world-first insight” into how users may be exploiting the technology to produce harmful and illegal content.

“This underscores how critical it is for companies developing AI products to build in and test the efficacy of safeguards to prevent this type of material from being generated,” eSafety Commissioner Julie Inman Grant said in a statement.

In its report, Google said it received 258 user reports about suspected AI-generated deepfake terrorist or violent extremist content made using Gemini, and another 86 user reports alleging AI-generated child exploitation or abuse material.

It did not say how many of the complaints it verified, according to the regulator.

A Google spokesperson said it did not allow the generation or distribution of content related to facilitating violent extremism or terror, child exploitation or abuse, or other illegal activities.

“We are committed to expanding on our efforts to help keep Australians safe online,” the spokesperson said by email.

“The number of Gemini user reports we provided to eSafety represent the total global volume of user reports, not confirmed policy violations.”

Google used hatch-matching – a system of automatically matching newly-uploaded images with already-known images – to identify and remove child abuse material made with Gemini.

But it did not use the same system to weed out terrorist or violent extremist material generated with Gemini, the regulator added.

The regulator has fined Telegram and Twitter, later renamed X, for what it called shortcomings in their reports. X has lost one appeal about its fine of A$610,500 ($382,000) but plans to appeal again. Telegram also plans to challenge its fine.