A distinguished researcher in data engineering, Pradeep Kumar Vattumilli sheds light on a transformative approach to modernizing ETL processes through metadata-driven architectures. This innovative method emphasizes the use of metadata to streamline workflows, enable automated schema adjustments, and enhance scalability, ultimately optimizing efficiency and reducing complexities in evolving data ecosystems.

A New Era for ETL Architectures

In the fast-paced realm of data management, traditional ETL (Extract, Transform, Load) systems often struggle to keep up with the growing demands for scalability, flexibility, and real-time adaptability. Metadata-driven ETL pipelines revolutionize this landscape by separating the pipeline’s operational logic from its implementation details. By leveraging metadata to dynamically configure data transformations and workflows, these pipelines provide unparalleled agility, enabling organizations to swiftly adapt to evolving business requirements and data formats. This approach minimizes manual intervention, enhances reusability, and supports rapid scaling, making it an indispensable solution for modern enterprises navigating complex data ecosystems.

The Foundations of Metadata-Driven Design

Metadata-driven ETL introduces a transformative approach by decoupling data processing logic from configuration, enabling dynamic and flexible pipeline orchestration. Central to this framework is the organization of metadata into distinct layers—semantic, structural, and operational—which define data meaning, format, and processing rules. This layered architecture fosters the creation of reusable and adaptable pipeline components, significantly reducing the reliance on hardcoding. As a result, development cycles are accelerated, while maintenance and modifications become more streamlined. This abstraction not only enhances scalability and agility but also ensures that ETL processes can effortlessly adapt to evolving business requirements and data landscape changes.

Overcoming Contemporary Challenges

Modern data ecosystems face the challenge of integrating diverse data sources, maintaining comprehensive metadata repositories, and ensuring compliance with stringent regulatory requirements. Metadata-driven approaches address these complexities by enabling efficient data lineage tracking, which maps the flow and transformations of data across the pipeline, ensuring transparency and accountability. Automated governance mechanisms streamline policy enforcement, improve audit readiness, and enhance security controls. Additionally, robust metadata management systems organize and catalog information, enabling efficient data discovery and usage. These advancements provide a systematic framework for handling growing data complexities, empowering organizations to achieve scalability, regulatory compliance, and operational efficiency in dynamic environments.

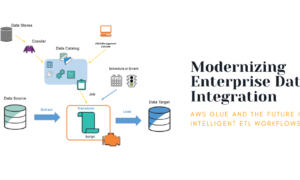

Designing for Flexibility and Scalability

Effective metadata schema design is crucial for achieving scalability in modern ETL systems. By including source-to-target mappings, transformation logic, and data quality rules within the metadata, organizations can create pipelines that adapt seamlessly to evolving requirements. These metadata-driven pipelines support both full and incremental processing patterns, enabling efficient handling of diverse workloads. Parameterized pipeline templates further enhance scalability by allowing reusable components to be dynamically configured based on specific use cases. Additionally, dynamic execution flows leverage metadata to optimize resource utilization and streamline operations, ensuring that the system maintains high performance and operational efficiency even as data volumes and complexity grow.

Enhancing Performance and Resource Optimization

Benchmarking methodologies reveal the performance superiority of metadata-driven systems. By optimizing throughput, latency, and resource utilization, these systems demonstrate consistent performance under high workloads. Smart resource allocation and GPU-accelerated components further amplify operational efficiency, making them ideal for large-scale deployments.

Fostering Reusability and Maintainability

The reusability of pipeline components is a hallmark of metadata-driven design. Metadata templates structured with hierarchical patterns enable the creation of complex workflows from modular, well-tested elements. Coupled with robust version control mechanisms, these templates ensure compatibility across pipeline versions while facilitating smooth evolution.

Integrating Business Rules and Governance

Business rules, encoded as metadata, enable seamless integration into pipeline workflows. Metadata-driven rule engines dynamically execute complex logic while maintaining compliance with regulatory standards. Additionally, governance models ensure alignment between technical teams and business stakeholders, fostering collaboration and adherence to organizational policies.

Charting the Path Forward

The adoption of metadata-driven pipelines signifies a paradigm shift in ETL architecture. Organizations can achieve substantial reductions in development overhead, enhanced maintainability, and greater scalability. However, the success of these systems hinges on implementing robust governance frameworks, designing flexible metadata schemas, and leveraging advanced orchestration patterns.

In conclusion, Pradeep Kumar Vattumilli’s work underscores the transformative potential of metadata-driven architectures in the realm of data integration. His insights provide a blueprint for organizations aiming to modernize their ETL systems and achieve data management excellence.