By Yashwanth Kotha, Invited Expert at TechBullion

The seamless integration of personalised content into our lives comes with a significant trade-off: our privacy. As we embrace digital convenience, our data trails become lucrative for targeted advertising, leaving us to ponder the true cost of these personalised experiences.

Are We Designing Our Privacy Away? Where do we draw the line between personalization and privacy? Ever mentioned a product in a conversation, only to find it advertised on your Facebook or Instagram feed shortly after? It’s a familiar scenario for many of us, showcasing how companies like Meta harness our search histories, conversations, and casual chats to craft ads with eerie accuracy.

All these questions made me want to reflect on where the lines between virtual and reality blur and what is the new ethics of UX design. My name is Yashwanth Kotha and I am a Senior UX Designer with a deep focus on research, mentoring the next generation of designers. In this article I will try to find an answer to a fundamental question: How do we design experiences that feel personal without trespassing on the sacred ground of privacy?

Ethical UX as a New Paradigm

When we speak about product design in 2022, designing solely around user needs isn’t enough. While User-Centric Design focuses on user experiences, it often overlooks broader business and legal considerations, risking commercial viability and personal privacy.

Similarly, while Design Thinking fosters innovation, without integrating business and legal constraints, solutions may not be feasible or sustainable in the long term. Attempts to evolve Design Thinking into version 2.0 have improved outcomes but have yet to address emerging challenges like internationalisation, legal limitations, and user privacy.

Ethical UX design, on the contrary, represents a paradigm shift from user exploitation to user empowerment. This philosophy places user needs, rights, and dignity at its core. It also balances the benefits of personalisation with the imperative of privacy. However, bear in mind that this approach requires a conscious effort to understand and implement practices that safeguard user data while providing meaningful, customised experiences.

A designer as well as the whole product team need to be committed to transparency, consent, and control. By transparency we mean the clarity of how user data is collected, consent is something that is freely given, informed, and unequivocal, and control is the concept that allows users to manage their data preferences and privacy settings easily. These 3 principles lay the foundation of trust between users and digital platforms.

Unethical UX Design

Before we go deeper into the ethical UX design let us take a look at what unethical UX design looks like. I have collected just some of the examples, in reality they are much more numerous and these practices can sometimes be dangerous for such vulnerable categories as children and teenagers.

First of all, these are subscription services like streaming platforms and gym memberships. Very often the cancellation processes are designed in such a way that a user needs to take a considerable effort to overcome all the “obstacles” and finally cancel the subscription. Undoubtedly this results in ongoing charges and customer frustration.

Then we have social media platforms such as Facebook, Instagram, TikTok. They all use addictive design features like personalised content and persisting notifications to encourage users to spend more time scrolling through their feeds.

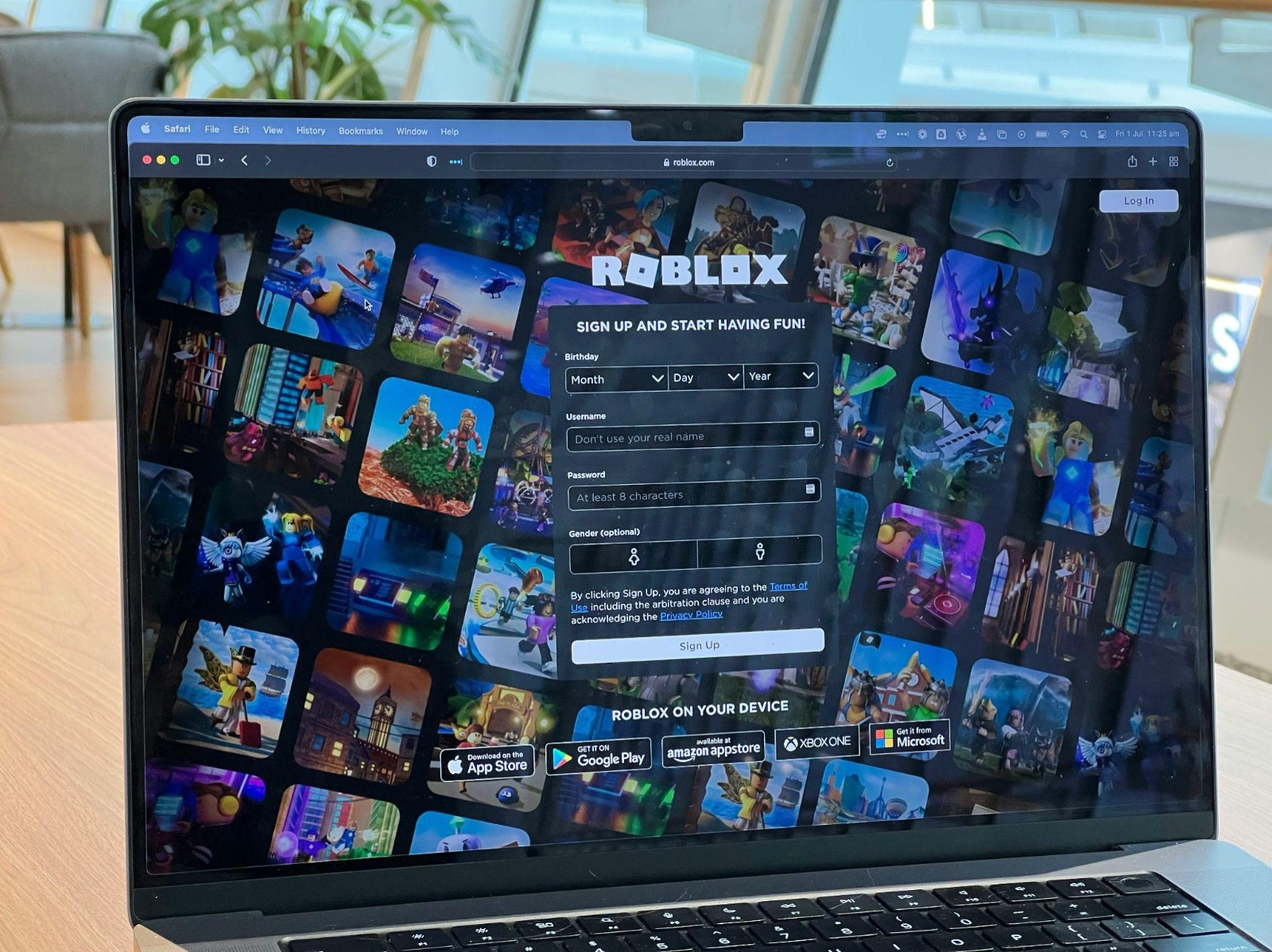

A special category is mobile games. Platforms incorporate addictive elements like in-app purchases and reward systems to keep players engaged. While not inherently designed to be addictive, Minecraft’s open-world sandbox gameplay can be highly or even too engaging for children. Moreover, it provokes them to spend many they do not earn.

Roblox is yet another example. The average age of Roblox users is between 9 and 12 years old. With a vast library of user-generated content and social features, Roblox can be addictive for children who enjoy exploring new games and interacting with friends as long as they are not controlled. The fact that more than half of all US children now have a Roblox account speaks for itself.

When it comes to data privacy one of the most frequent ethical concerns is data harvesting and tracking. Social media are often criticised for their extensive data collection practices, including tracking users’ online activities across websites and apps without clear consent. Further on this data is used to create detailed user profiles for targeted advertising, raising concerns about privacy and user exploitation.

Another issue is the use of dark patterns. For example, Yelp, an online platform and mobile application for reviewing local businesses, has been accused of using dark patterns on its website to manipulate users into providing more personal information than they intended. The platform uses misleading language and confusing opt-out processes to encourage users to share additional data for marketing purposes.

Balance Between Personalisation and Privacy

There is no doubt that users need personalised experiences. They are most loyal to companies that understand their needs, know their preferences, and in the end save them time. However, this desire doesn’t grant carte blanche access to personal data. The challenge, then, is balancing between personalisation and privacy, a journey that requires both wisdom and creativity.

In this context, personalisation must be reimagined. It’s about curating experiences based on what users are willing to share, not what can be covertly extracted from them. Ethical personalisation respects boundaries and seeks to understand where those boundaries lie through clear communication and straightforward options for data sharing.

The Role of Regulation and Self-Governance

While regulations like GDPR and CCPA provide a legal framework for data protection and privacy, ethical UX transcends compliance. It’s about adopting a culture of privacy and ethics that permeates every facet of the design process, even when no regulation explicitly demands it.

Self-governance based on ethical principles can ensure that companies remain ahead of legal mandates, setting new standards for privacy and user respect.

Ethical UX in Action

One of the ethical UX examples is Fitbit — a popular health and fitness app and wearable device. The company produces smartwatches, trackers, smart scales and collects loads of sensitive personal data. Nevertheless, it emphasises user privacy and control over personal information.

Here’s how they achieve this.

First of all, they are very accurate with privacy preferences. Fitbit prompts users to set privacy preferences during the initial setup process. Here users can specify their privacy settings right from the start, ensuring that they are comfortable with how their data will be handled.

They always use transparent explanations. All the apps and devices provide clear and transparent explanations of how data is collected, used, and shared. Users can access detailed information about the types of data collected, such as activity tracking, sleep patterns, heart rate, and more. This transparency helps users make informed decisions about their privacy settings.

Fitbit prioritises user control over their health and fitness data. Users are the ones who control access to their data, including who can view it and how it is shared. Fitbit offers granular privacy settings, allowing users to customise their preferences based on their comfort level.

The company employs robust security measures to protect user data from unauthorised access and breaches. This includes encryption protocols, secure data storage practices, and regular security updates to mitigate potential vulnerabilities.

Overall, Fitbit’s approach to privacy and user control is a positive example in the health and fitness app industry.

Lyft is another product whose team is working hard on providing ethical UX.

A popular ridesharing service places a strong emphasis on user safety and privacy through the below features.

The feedback passengers provide on their rides is always anonymous. The feature enables users to share their experiences, report any issues or concerns, and offer suggestions for improvement without revealing their identity. This encourages honest and open communication while protecting user privacy.

Another practice Lyft implements is a phone number masking system to protect the privacy of both drivers and passengers. This means that personal phone numbers are kept private during communication between the two parties. Instead, Lyft assigns temporary phone numbers that make communication possible without compromising user privacy.

Equally important is that they give clear guidelines on data usage. By communicating these practices clearly, Lyft helps users understand how their data is used and empowers them to make informed decisions about their privacy. In these terms, Lyft has all the chances to compete with Uber for the top slot in the ride-sharing industry.

The Future of Ethical UX and Privacy

Looking ahead, the future of ethical UX and privacy is not just about implementing the principles but also about fostering an environment where privacy is seen as an integral part of the user experience.

Innovations in technology, such as AI and machine learning, offer new opportunities and challenges in this regard. The ethical use of these technologies in UX design will be a critical frontier in ensuring that personalisation enhances, rather than encroaches upon, user privacy.